Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Closed torans closed 1 year ago

Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

- What's the relationship between you and organization? Can you provide some related materials?

- Your company was founded in December 2021. Can you explain about data source of trainning data(Videos, Images, Files) about Finance, Agricultural, Industrial etc? How did you gather this information?

- The data you have provided are all little icons of the cockroaches, too. Can you provide more data samples related with trainning data(Videos, Images, Files) about Finance, Agricultural, Industrial etc.? How many copies will you store?

- Can you provide more detailed information about other storage providers participated in this program, such as you can list SPs you have contacted with at present?

@Sunnyiscoming I am one of the Technical staff in the company. I have been entrusted to submit the company application in my github account. The following attachments are commissioned. https://drive.google.com/file/d/1FJivNAlOysYIS1IJfsm2rk6eXHPsa69_/view?usp=sharing

Yes, although the company is not a long part, we have many high-quality customers from network cooperation. In terms of data. Have many of these data customers provide publicly available data. Or according to actual needs. We will use the copy data provided from customers time to time and train models that can be more accurate.

Related Data Samples as below. (Finance) Loan Default Forecast https://drive.google.com/drive/folders/1x70wNRnmA_80VG7cx01Uaj-7T8etmQiV?usp=sharing (Industrial)Hard hat wearing prediction: https://drive.google.com/drive/folders/1MGN_Xlu3I4ZnDXbCRoofYaFStdxtnmlI?usp=sharing (Agriculture) Pest identification training https://drive.google.com/drive/folders/1owpmTFIWwg5vF6V9q3bG7oO-UYIRwA2G?usp=sharing (Agriculture) Image monitoring of leafy vegetables pests and diseases https://drive.google.com/drive/folders/1irnBDmfB28CdmM3SCcbRZ8FVb6IxIbqZ?usp=sharing (Industrial) Floor tile crack detection sample data https://drive.google.com/drive/folders/1txb-wA5XHyMXoWF9dM59Zm8k0taSYw2q?usp=sharing

Some of the SP we are contacting include nodes miner in Hong Kong or Singapore. Less than 25% of total data will be stored in each SP.If the approved quota is down, we will communicate with them better. I hope you can help us review it first.

In addition, We have revised the usage and resubmitted Total amount of DataCap 2PiB and Weekly allocation of DataCap 40TiB DataCap. We hope it can be approved. Thanks again.

Could you send an email to filplus-app-review@fil.org with your official domain in order to confirm your identity? Email name should includes the issue id #702. Can you list sps nodes here? Whether they support data retrieval?

Could you send an email to filplus-app-review@fil.org with your official domain in order to confirm your identity? Email name should includes the issue id #702. Can you list sps nodes here? Whether they support data retrieval?

@Sunnyiscoming It has been sent to filplus-app-review@fil.org by our official email, please confirm. We will store it on the following SPs nodes f095334, f098743, f0509981, f01085801, f0818625. All the SPs nodes support data retrieval functions.

Could you send an email to filplus-app-review@fil.org with your official domain in order to confirm your identity? Email name should includes the issue id #702. Can you list sps nodes here? Whether they support data retrieval?

@Sunnyiscoming It has been sent to filplus-app-review@fil.org by our official email, please confirm. We will store it on the following SPs nodes f095334, f098743, f0509981, f01085801, f0818625. All the SPs nodes support data retrieval functions.

Could you send an email to filplus-app-review@fil.org with your official domain in order to confirm your identity? Email name should includes the issue id #702. Can you list sps nodes here? Whether they support data retrieval?

hi @Sunnyiscoming . Is there anything else we need to add and improve?

Could you send an email to filplus-app-review@fil.org with your official domain in order to confirm your identity? Email name should includes the issue id #702. Can you list sps nodes here? Whether they support data retrieval?

Hey @Sunnyiscoming . As shown in the figure, we have sent an email to filplus-app-review@fil.org through the company's official domain email, and look forward to your review, thanks.

If there are any problems that we need to continue to supplement and improve. We will actively cooperate with SPs. Now we urgently need a safe and reliable platform to backup existing data. No longer consider continuing to use self-built NAS server . If we can pass the application, we still need time to communicate with SPs on how online or offline storage the data. We look forward to your reply, thanks again.

Email received.

Email received.

@Sunnyiscoming . Thanks for your reply, what should we do next?

Total DataCap requested

2PiB

Expected weekly DataCap usage rate

40TiB

Client address

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

f02049625

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

20TiB

Triggering because the client has answered questions. Notaries are requested to do further due diligence to ascertain the dataset. Thanks

Triggering because the client has answered questions. Notaries are requested to do further due diligence to ascertain the dataset. Thanks

@raghavrmadya Thanks. what should we do next?

This identification technique is very interesting and I will support and do due diligence

Your Datacap Allocation Request has been proposed by the Notary

bafy2bzaceaikhw3vmo2osmh454nvzyikgkpzb3gqzo63snvcwwqphbzysp3x4

Address

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

Datacap Allocated

20.00TiB

Signer Address

f1fg6jkxsr3twfnyhdlatmq36xca6sshptscds7xa

You can check the status of the message here: https://filfox.info/en/message/bafy2bzaceaikhw3vmo2osmh454nvzyikgkpzb3gqzo63snvcwwqphbzysp3x4

Your Datacap Allocation Request has been approved by the Notary

bafy2bzacea5qeuwr4nipz5saimmwqxrzudcaly2pa53xs32g4l6pncoxtfeeu

Address

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

Datacap Allocated

20.00TiB

Signer Address

f1qdko4jg25vo35qmyvcrw4ak4fmuu3f5rif2kc7i

You can check the status of the message here: https://filfox.info/en/message/bafy2bzacea5qeuwr4nipz5saimmwqxrzudcaly2pa53xs32g4l6pncoxtfeeu

@torans Hi! Great to see you have gotten approval for DataCap and advancing the mission of preserving humanity’s most important information. If you are looking for storage providers to store these data or have any questions, please visit #bigdata-exchange on Filecoin Slack or reply here.

We have strong demand from a diverse group of SPs, who are actively looking to onboard more data.

f02049625

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

40TiB

c72fd5ed-1925-45b1-a49c-440b8d49308e

f01858410

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

psh0691 & not found

100% of weekly dc amount requested

40TiB

20TiB

1.98PiB

| Number of deals | Number of storage providers | Previous DC Allocated | Top provider | Remaining DC |

|---|---|---|---|---|

| 293 | 3 | 20TiB | 49.57 | 4.31TiB |

Your Datacap Allocation Request has been proposed by the Notary

bafy2bzacedaofrd3ryol3uj3w6wfv736qd6vrukhgjcptaovdwinkqoryebke

Address

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

Datacap Allocated

40.00TiB

Signer Address

f1zffqhxwq2rrg7rtot6lmkl6hb2xyrrseawprzsq

Id

c72fd5ed-1925-45b1-a49c-440b8d49308e

You can check the status of the message here: https://filfox.info/en/message/bafy2bzacedaofrd3ryol3uj3w6wfv736qd6vrukhgjcptaovdwinkqoryebke

Your Datacap Allocation Request has been approved by the Notary

bafy2bzaceaufbpwt4zg3umvwpevxjl5qxezxztzzw6piz2ptkwxaju4rewm34

Address

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

Datacap Allocated

40.00TiB

Signer Address

f1e77zuityhvvw6u2t6tb5qlnsegy2s67qs4lbbbq

Id

c72fd5ed-1925-45b1-a49c-440b8d49308e

You can check the status of the message here: https://filfox.info/en/message/bafy2bzaceaufbpwt4zg3umvwpevxjl5qxezxztzzw6piz2ptkwxaju4rewm34

f02049625

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

80TiB

3ea4955d-8f99-4f00-a7f0-3bc0f69bcc64

f01858410

f1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

newwebgroup & GaryGJG

200% of weekly dc amount requested

80TiB

20TiB

1.98PiB

| Number of deals | Number of storage providers | Previous DC Allocated | Top provider | Remaining DC |

|---|---|---|---|---|

| 752 | 6 | 40TiB | 20.32 | 364GiB |

Chuangshengyun Technologyf1xts6y3lb7mmcjelapapjsvso7yv2cvwip7pozay

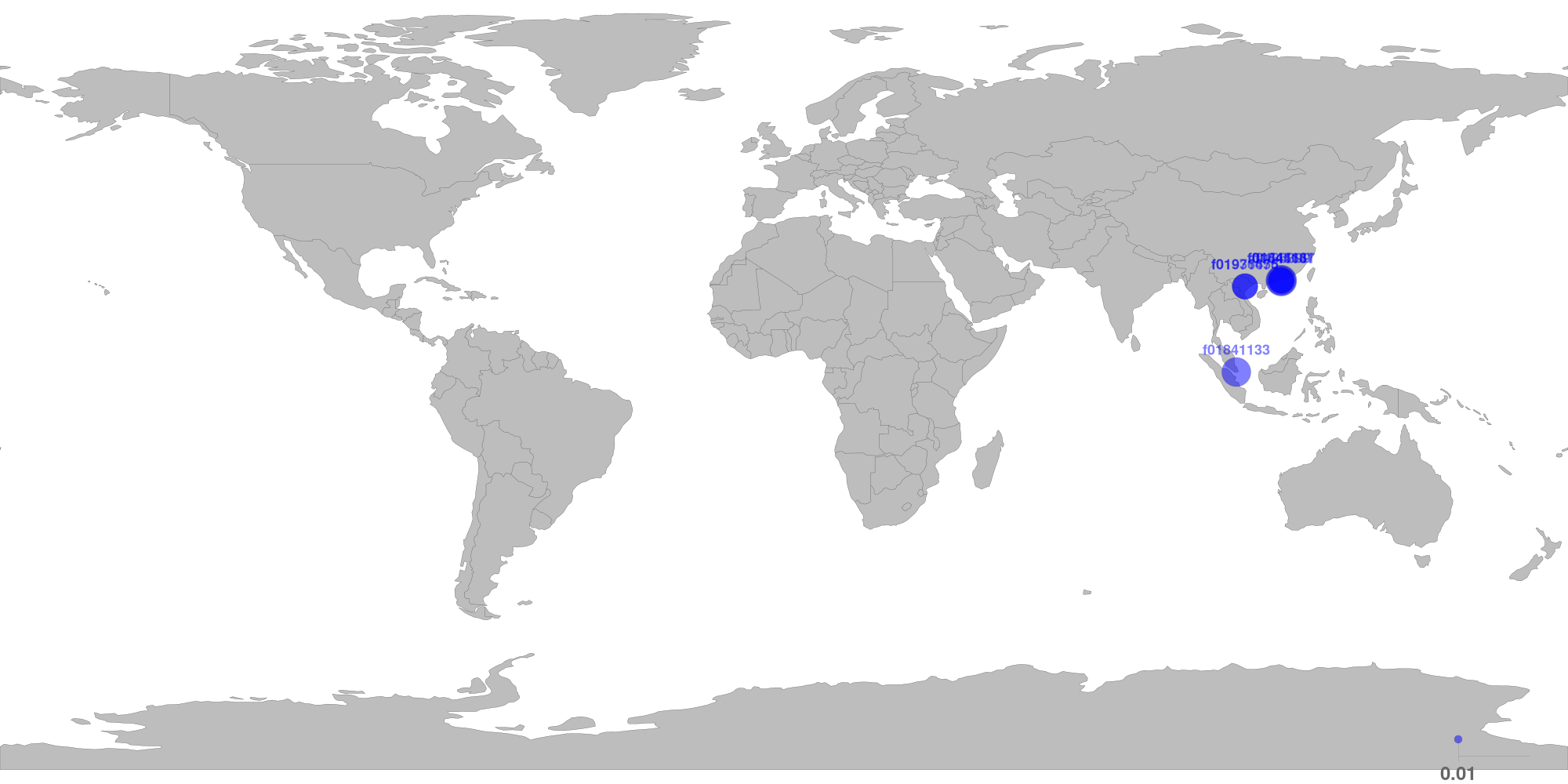

The below table shows the distribution of storage providers that have stored data for this client.

If this is the first time a provider takes verified deal, it will be marked as new.

For most of the datacap application, below restrictions should apply.

⚠️ f054464 has sealed 27.39% of total datacap.

| Provider | Location | Total Deals Sealed | Percentage | Unique Data | Duplicate Deals |

|---|---|---|---|---|---|

| f054464 | Hong Kong, Central and Western, HK | 11.97 TiB | 27.39% | 11.97 TiB | 0.00% |

| f01445167 | Hong Kong, Central and Western, HK | 8.03 TiB | 18.38% | 8.03 TiB | 0.00% |

| f01841133 | Singapore, Singapore, SG | 8.02 TiB | 18.34% | 8.02 TiB | 0.00% |

| f01841131 | Hong Kong, Central and Western, HK | 4.02 TiB | 9.20% | 3.99 TiB | 0.78% |

f01936456new |

Văn Điển, Hanoi, VN | 3.93 TiB | 8.98% | 3.93 TiB | 0.00% |

f01971675new |

Văn Điển, Hanoi, VN | 3.93 TiB | 8.98% | 3.93 TiB | 0.00% |

| f0136399 | Hong Kong, Central and Western, HK | 3.81 TiB | 8.72% | 3.81 TiB | 0.00% |

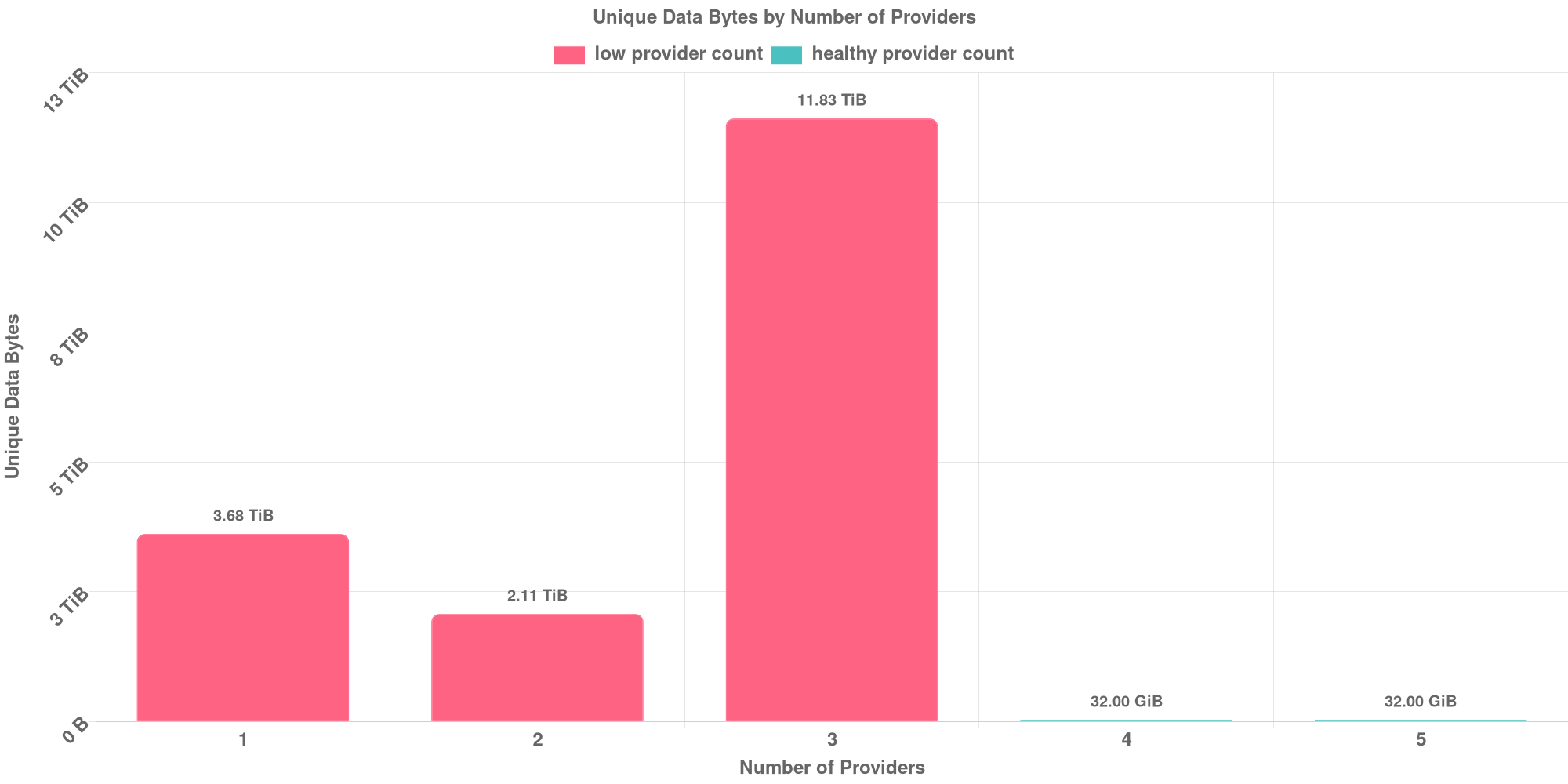

The below table shows how each many unique data are replicated across storage providers.

⚠️ 99.36% of deals are for data replicated across less than 4 storage providers.

| Unique Data Size | Total Deals Made | Number of Providers | Deal Percentage |

|---|---|---|---|

| 3.68 TiB | 3.71 TiB | 1 | 8.49% |

| 2.11 TiB | 4.22 TiB | 2 | 9.65% |

| 11.83 TiB | 35.50 TiB | 3 | 81.22% |

| 32.00 GiB | 128.00 GiB | 4 | 0.29% |

| 32.00 GiB | 160.00 GiB | 5 | 0.36% |

The below table shows how many unique data are shared with other clients. Usually different applications owns different data and should not resolve to the same CID.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

Hello @torans

I have been looking at your LDN to approve it for datacap, however i have some questions.

Are you the one doing the data preparation for your client or are you the client owning this data and doing the data preparation yourself?

I see that the SP's you work with all belong to one organization. Secondly they don't have a clean tracking record and they have been involved in non compliant storage data of FIL+. Can you provide me their contact information? If you want you can do that by email -> kyc@dcent.nl

That brings me to my third question. Can you provide me with a list of SP's for your new datacap allocation? Fyi, the SP's should not be controlled by one organization but you need 3 independent organizations in 3 different regions. If you want me to sign for a new tranch of datacap we need understanding that you won't use it for the existing organization / SP's. I know this might be difficult. If you need help finding SP's with good reputation or other help let us know.

我看到与您合作的 SP 都属于一个组织

Hello @torans

I have been looking at your LDN to approve it for datacap, however i have some questions.

Are you the one doing the data preparation for your client or are you the client owning this data and doing the data preparation yourself?

I see that the SP's you work with all belong to one organization. Secondly they don't have a clean tracking record and they have been involved in non compliant storage data of FIL+. Can you provide me their contact information? If you want you can do that by email -> kyc@dcent.nl

That brings me to my third question. Can you provide me with a list of SP's for your new datacap allocation? Fyi, the SP's should not be controlled by one organization but you need 3 independent organizations in 3 different regions. If you want me to sign for a new tranch of datacap we need understanding that you won't use it for the existing organization / SP's. I know this might be difficult. If you need help finding SP's with good reputation or other help let us know.

Happy New Year, great to hear from you. Our company provides customers with artificial intelligence algorithm service applications. And use the data for AI model training service. So we have collected a lot of application-related data together with our customers. You mentioned that the SPs we work with belong to the same organizational issue, which is not the case. Store datacap client clients as filecoin. We communicated with our partner SP immediately after receiving this information and found no compliance. If you believe there has been any violation. Please provide relevant proof, and we will further confirm upon receipt. If so, we will rectify according to compliance requirements. In order to promote the development of filecoin. Let us know if you have questions. We are willing to actively cooperate with official arrangements.

Hi there, happy new year to you to!

I take it you can work with this? Machines in the same subnet's belong to the same organization. Machines on VPN etc......

Hello @torans

You asked me a question and i provided you with the relevant proof. Can you let me know what you plan is to rectify the situation?

Hello @torans

You asked me a question and i provided you with the relevant proof. Can you let me know what you plan is to rectify the situation?

We communicated with SP on this matter, and SP replied that the VPN is mainly for the stability of overseas network bandwidth, which will not affect the use of DC data access at all. In addition, the official DC data requirement is four different copies, but it seems that there is no requirement for the four different copies to be in different cities IP, and the SP we are looking for exists in three different countries and cities, including Hong Kong, Vietnam and Singapore are not the same organization. It would take us a lot of effort to find all four copies in different cities.

@torans At a minimum, we expect all applicants to exert effort towards advancing the Filecoin network. In recognition of such efforts, successful applicants will be rewarded with datacap. Good luck with finding more storage providers!

@torans At a minimum, we expect all applicants to exert effort towards advancing the Filecoin network. In recognition of such efforts, successful applicants will be rewarded with datacap. Good luck with finding more storage providers!

Dear @herrehesse, First thank you for your reply. Filecoin network is a great discovery for mankind. Yes, we will all do more to help Filecoin network human progress and witness the development of history.

checker:manualTrigger

✔️ Storage provider distribution looks healthy.

✔️ Data replication looks healthy.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

[^2]: Deals from those addresses are combined into this report as they are specified with checker:manualTrigger

[^3]: To manually trigger this report with deals from other related addresses, add a comment with text checker:manualTrigger <other_address_1> <other_address_2> ...

Click here to view the full report.

@torans I read the robot's report, the number of SPs you have is in line with the requirements, but the distribution areas are concentrated in two places, this is still slightly out of line with the LDN packaging specification, you should look for several SPs in different areas to store your data. Otherwise, it will be difficult to obtain community support in the future.

In addition, I tried to connect some of your SPs, and they still meet the requirements in terms of packaging specifications. For example, the following result.

$./bin/lotus client query-ask f01445167

Ask: f01445167

Price per GiB: 0.0000000005 FIL

Verified Price per GiB: 0 FIL

Max Piece size: 64 GiB

Min Piece size: 256 B

$

$

$./bin/lotus client query-ask f01841131

Ask: f01841131

Price per GiB: 0.0000000005 FIL

Verified Price per GiB: 0 FIL

Max Piece size: 32 GiB

Min Piece size: 256 B

$

$

$./bin/lotus client query-ask f0136399

Ask: f0136399

Price per GiB: 0.0000000005 FIL

Verified Price per GiB: 0 FIL

Max Piece size: 64 GiB

Min Piece size: 256 B

$

$

$./bin/lotus client query-ask f01841133

Ask: f01841133

Price per GiB: 0.0000000005 FIL

Verified Price per GiB: 0 FIL

Max Piece size: 32 GiB

Min Piece size: 256 B

$

$

$./bin/lotus client query-ask f01936456

Ask: f01936456

Price per GiB: 0 FIL

Verified Price per GiB: 0 FIL

Max Piece size: 64 GiB

Min Piece size: 1 GiB

$

$

$./bin/lotus client query-ask f01971675

Ask: f01971675

Price per GiB: 0 FIL

Verified Price per GiB: 0 FIL

Max Piece size: 64 GiB

Min Piece size: 1 GiB

$./bin/lotus client retrieval-ask f01445167 baga6ea4seaqn2oydbf3qxkkpa46x3ob4ypoj36uorkcsnywa7ko6qhwwabgzgfi

Ask: f01445167

Unseal price: 0 FIL

Price per byte: 0 FIL

Payment interval: 0 B

Payment interval increase: 0 B

Mar 4 09:35:03 proposals dealscanner-f01904773-f01936456: Error: Failed to retrieve content with candidate miner f01936456: data transfer failed: deal errored: getting pieces for cid bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: getting pieces containing block bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: failed to lookup index for mh 1220b00702f86f1aa0fd1dc4d6c3af29de4180d5f3d62322062702275858a7ab7010, err: datastore: key not found

Mar 4 09:35:03 proposals dealscanner-f01904773-f01936456: Error: Failed to retrieve content with candidate miner f01936456: data transfer failed: deal errored: getting pieces for cid bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: getting pieces containing block bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: failed to lookup index for mh 1220b00702f86f1aa0fd1dc4d6c3af29de4180d5f3d62322062702275858a7ab7010, err: datastore: key not found Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456: ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafybeignteljnwvn426jxnefei2tj2l6r3cstcme46d7pjdigab4yhtpzy: getting pieces containing block bafybeignteljnwvn426jxnefei2tj2l6r3cstcme46d7pjdigab4yhtpzy: failed to lookup index for mh 1220cd991696daade6bc9bb485223534e97e8ec5298984e787f7a4683003cc1e6fce, err: datastore: key not found Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456: Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456: Error: Failed to retrieve content with candidate miner f01936456: data transfer failed: deal errored: getting pieces for cid bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: getting pieces containing block bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: failed to lookup index for mh 1220b00702f86f1aa0fd1dc4d6c3af29de4180d5f3d62322062702275858a7ab7010, err: datastore: key not found Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456-19659250: ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafybeidk2glqisdrnq7vzmiu4io2hjlg5ioxtogaif4tflzebupw5k22ri: getting pieces containing block bafybeidk2glqisdrnq7vzmiu4io2hjlg5ioxtogaif4tflzebupw5k22ri: failed to lookup index for mh 12206ad1970448716c3f5cb114e21da3a566ea1d79b8c0417932af240d1f6eab5a8a, err: datastore: key not found Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456-19662920: ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: getting pieces containing block bafybeifqa4bpq3y2ud6r3rgwyoxstxsbqdk7hvrdeidcoarhlbmkpk3qca: failed to lookup index for mh 1220b00702f86f1aa0fd1dc4d6c3af29de4180d5f3d62322062702275858a7ab7010, err: datastore: key not found Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456-19662920: Mar 4 21:22:35 proposals dealscanner-f01904773-f01936456-19659250:

Per above, retrievals are not working. Can you please fix to enable us to do duediligence?

This application has not seen any responses in the last 10 days. This issue will be marked with Stale label and will be closed in 4 days. Comment if you want to keep this application open.

This application has not seen any responses in the last 14 days, so for now it is being closed. Please feel free to contact the Fil+ Gov team to re-open the application if it is still being processed. Thank you!

Large Dataset Notary Application

To apply for DataCap to onboard your dataset to Filecoin, please fill out the following.

Core Information

Please respond to the questions below by replacing the text saying "Please answer here". Include as much detail as you can in your answer.

Project details

Share a brief history of your project and organization.

What is the primary source of funding for this project?

What other projects/ecosystem stakeholders is this project associated with?

Use-case details

Describe the data being stored onto Filecoin

Where was the data in this dataset sourced from?

Can you share a sample of the data? A link to a file, an image, a table, etc., are good ways to do this.

Confirm that this is a public dataset that can be retrieved by anyone on the Network (i.e., no specific permissions or access rights are required to view the data).

What is the expected retrieval frequency for this data?

For how long do you plan to keep this dataset stored on Filecoin?

DataCap allocation plan

In which geographies (countries, regions) do you plan on making storage deals?

How will you be distributing your data to storage providers? Is there an offline data transfer process?

How do you plan on choosing the storage providers with whom you will be making deals? This should include a plan to ensure the data is retrievable in the future both by you and others.

How will you be distributing deals across storage providers?

Do you have the resources/funding to start making deals as soon as you receive DataCap? What support from the community would help you onboard onto Filecoin?