Thanks for your request!

Heads up, you’re requesting more than the typical weekly onboarding rate of DataCap!Closed qianmonetwork closed 1 year ago

Thanks for your request!

Heads up, you’re requesting more than the typical weekly onboarding rate of DataCap!Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Thanks for your request!

Heads up, you’re requesting more than the typical weekly onboarding rate of DataCap!Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Thanks for your request!

Heads up, you’re requesting more than the typical weekly onboarding rate of DataCap!Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

@galen-mcandrew @raghavrmadya Hello, official team, is there any update? we want to join filecoin! thank you

Is your organization name 甘肃阡陌网网络科技有限公司(https://www.qcc.com/firm/b6edfe23092c0615247cdb5902159bd3.html)? Can you explain your data composition and provide sufficient data samples separately? How many copies will you store? What's the relationship between you and organization? Can you provide more detailed information about other storage providers participated in this program, such as you can list SPs you have contacted with at present?

@Sunnyiscoming thank you Great to hear from you, Regards!

Thanks for your request!

Heads up, you’re requesting more than the typical weekly onboarding rate of DataCap!Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Thanks for your request!

Heads up, you’re requesting more than the typical weekly onboarding rate of DataCap!Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Hi @galen-mcandrew @raghavrmadya , Hope for an update, thanks. If you still have questions, I am willing to answer

Can you explain your data composition and provide sufficient data samples separately?

Any update here?

Any update here?

Hi, sorry for the late reply. I have added data example (https://github.com/qianmonetwork/data). Our data comes from the usual business accumulation, and more is the accumulation of business materials. We have a lot of video materials. When we face different customers, we have different scenarios and need many different types of materials. @Sunnyiscoming

@raghavrmadya @Sunnyiscoming Hello, the application has been submitted for a long time, hope to make progress. If there is any doubt, I will explain. When it passes, it will greatly help me find the right SPs. thank you very much

The data sample provided is difficult to prove 5PB data. Could you explain your business and the corresponding data type and size in more detail. To provide the corresponding data sample?

Hi, @Sunnyiscoming thank you for your serious review. Because of you, it will be better.

We undertake business in product photography, advertising shooting, marketing planning, graphic design, brand promotion, and scenic advertising. For example, in the photography business line, through adequate pre-planning, experienced photographers must fully communicate with customers to accurately locate customers’ needs; then through professional mid-term production, and finally by photographers and artists Post synthesis to ensure customer satisfaction.

In our data composition, the vast majority is video data. We have accumulated a lot of high-definition material videos, which can be used as customer demonstrations, self-promotion, and post-production video materials. With the accumulation of business, we have accumulated a lot of video resources, and many video materials are not used often, so storing them is a headache for us, before we knew about filecoin.

I made preliminary statistics on the existing data. Our server stores 700T+ of video material data that is not frequently used. In order to save costs, they are only saved in one copy, which is easily damaged. We want to store multiple copies of our data in filecoin, so we applied for a 5P quota. We plan to store more than 5 copies. If we consider this, the quota of 3.5P can meet most of our needs, but considering the continuous accumulation of data in the business, we apply for 5P. Of course, if you think it's not possible, we can change it to 3.5P. We have rearranged all the data of the company, including the new data that needs to be archived, and we will store more than 1P data in filecoin

For more sample data, I'm sorting it out and uploading it somewhere convenient for viewing: https://pan.baidu.com/s/1ud3mxEvIA60X8Q8TR5Ovaw?pwd=mmuo.

Thank you.

@galen-mcandrew @raghavrmadya Hope for new progress, thanks!

@simonkim0515 Thanks for your attention and assign it. Looking forward to good progress, thank you very much!

Total DataCap requested

5PiB

Expected weekly DataCap usage rate

400TiB

Client address

f1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

f02049625

f1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

200TiB

25a1db27-e560-4c35-96ad-471825c98b5f

Hi @qianmonetwork ,

I checked that your company registration time is not very long, but you declare that you have 700T of original data, which will make people more confused. Hopefully, you can prove your real data storage capacity, such as storage capacity through screenshots, etc. Also, if you have selected SPs and can you list them? Finally, you should send a confirmation email to filplus-app-review@fil.org using the email address of your company domain.

Hi @YuanHeHK

I checked that your company registration time is not very long, but you declare that you have 700T of original data, which will make people more confused. Hopefully, you can prove your real data storage capacity, such as storage capacity through screenshots, etc.

Thank you for your question and attention. First of all, we have multiple business lines. It is our requirement to enrich ourselves more and more by constantly accumulating excellent materials. In addition, we had some business and accumulation before the establishment of qianmowang. Here is a screenshot of our storage server(https://user-images.githubusercontent.com/64911932/206461963-417ce3a7-d0b6-4975-8af2-67b764ec1ac5.png).

Also, if you have selected SPs and can you list them?

Yes, after our search, we found the cooperative SPs, they are: f01954294 f01901351 f01870888 f01922865

Finally, you should send a confirmation email to filplus-app-review@fil.org using the email address of your company domain.

We sent a verification email to filplus-app-review@fil.org with our email address: (https://user-images.githubusercontent.com/64911932/206461819-4d0dfef3-3455-477a-b39a-48599353380e.png)

@qianmonetwork Thank you for your patient reply. It is recommended that you upload the image directly to the reply to the issue later, which is more convenient to view. The other 4 SPs are still too few, and it is recommended to have more cooperative SPs in the future. At present, there seems to be no problem, in view of the relatively long application time of this application, it is recommended to support the approval first, and continue to check and pay attention to it later.

Your Datacap Allocation Request has been proposed by the Notary

bafy2bzacec6cfkf6jgvrdywegxplbq5igpvirkxeidss3w24dvqyrjxwm57du

Address

f1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

Datacap Allocated

200.00TiB

Signer Address

f1fg6jkxsr3twfnyhdlatmq36xca6sshptscds7xa

Id

25a1db27-e560-4c35-96ad-471825c98b5f

You can check the status of the message here: https://filfox.info/en/message/bafy2bzacec6cfkf6jgvrdywegxplbq5igpvirkxeidss3w24dvqyrjxwm57du

Thanks for your support

The information and email confirmation have been done, all aspects are good, willing to support and continue to pay attention.

Your Datacap Allocation Request has been approved by the Notary

bafy2bzacea4tjtf3sliy6hyeticaagfihvb5mjjogts5oicenvgqsz6s2imzm

Address

f1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

Datacap Allocated

200.00TiB

Signer Address

f1yayfsv6whu3rheviucvventj3y6t542xfpb47ei

Id

25a1db27-e560-4c35-96ad-471825c98b5f

You can check the status of the message here: https://filfox.info/en/message/bafy2bzacea4tjtf3sliy6hyeticaagfihvb5mjjogts5oicenvgqsz6s2imzm

Thanks for your support

f02049625

f1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

400TiB

0e0b9bc0-2b81-4ea3-b60d-060f70211860

f01858410

f1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

not found & fireflyHZ

100% of weekly dc amount requested

400TiB

200TiB

4.80PiB

| Number of deals | Number of storage providers | Previous DC Allocated | Top provider | Remaining DC |

|---|---|---|---|---|

| 4747 | 4 | 200TiB | 37.6 | 46.90TiB |

QianMoWangf1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

1NDLABS-OFFICE1YuanHeHK

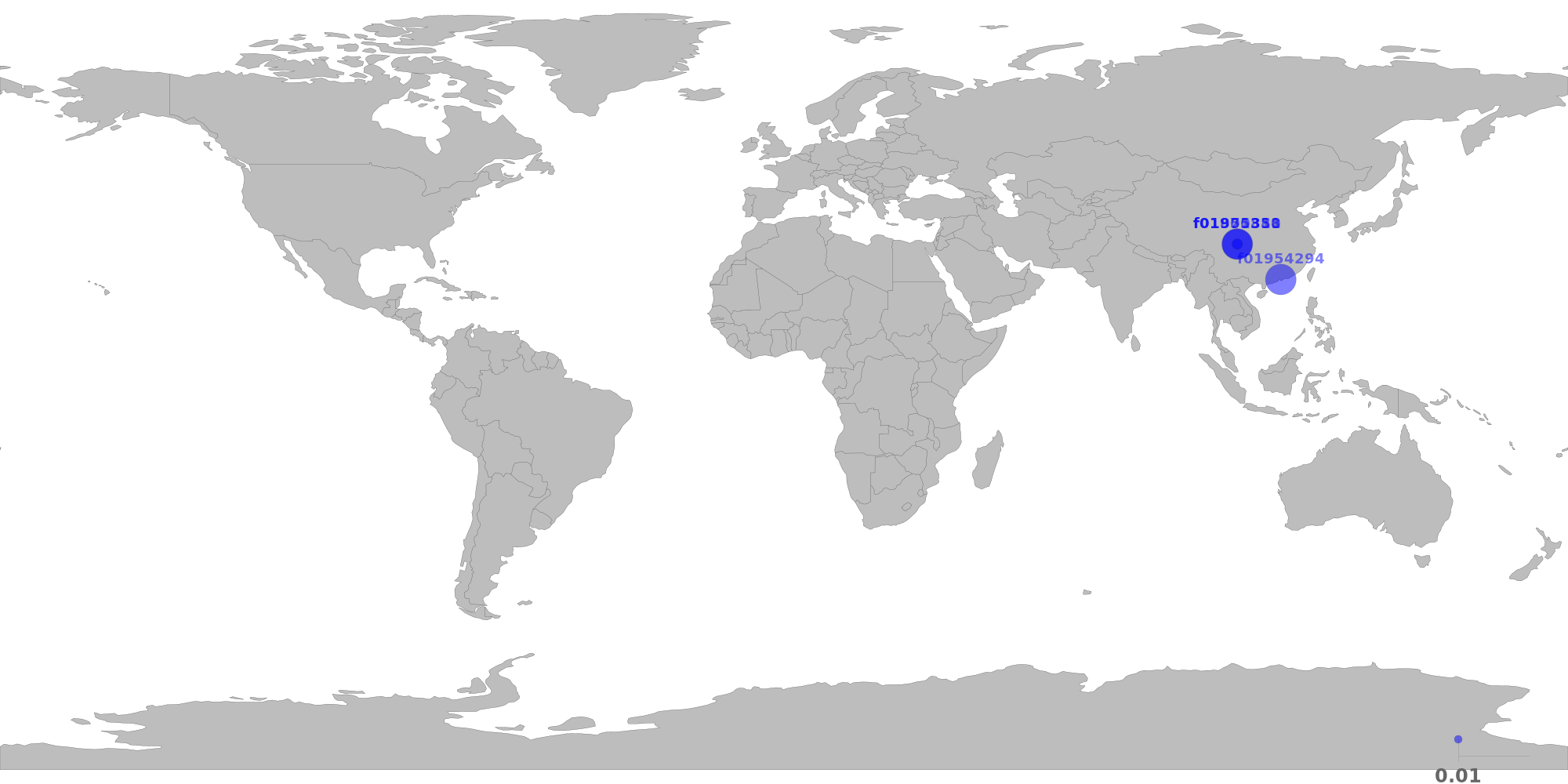

The below table shows the distribution of storage providers that have stored data for this client.

If this is the first time a provider takes verified deal, it will be marked as new.

For most of the datacap application, below restrictions should apply.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ 60.16% of total deal sealed by f01901351 are duplicate data.

⚠️ 35.85% of total deal sealed by f01870888 are duplicate data.

⚠️ 62.78% of total deal sealed by f01954294 are duplicate data.

| Provider | Location | Total Deals Sealed | Percentage | Unique Data | Duplicate Deals |

|---|---|---|---|---|---|

| f01901351 | Chengdu, Sichuan, CNCHINA UNICOM China169 Backbone |

46.13 TiB | 36.11% | 18.38 TiB | 60.16% |

| f01870888 | Chengdu, Sichuan, CNCHINA UNICOM China169 Backbone |

35.56 TiB | 27.84% | 22.81 TiB | 35.85% |

f01955310new |

Chengdu, Sichuan, CNCHINA UNICOM China169 Backbone |

1.56 TiB | 1.22% | 1.56 TiB | 0.00% |

| f01954294 | Shenzhen, Guangdong, CNChina Unicom Guangdong IP network |

44.50 TiB | 34.83% | 16.56 TiB | 62.78% |

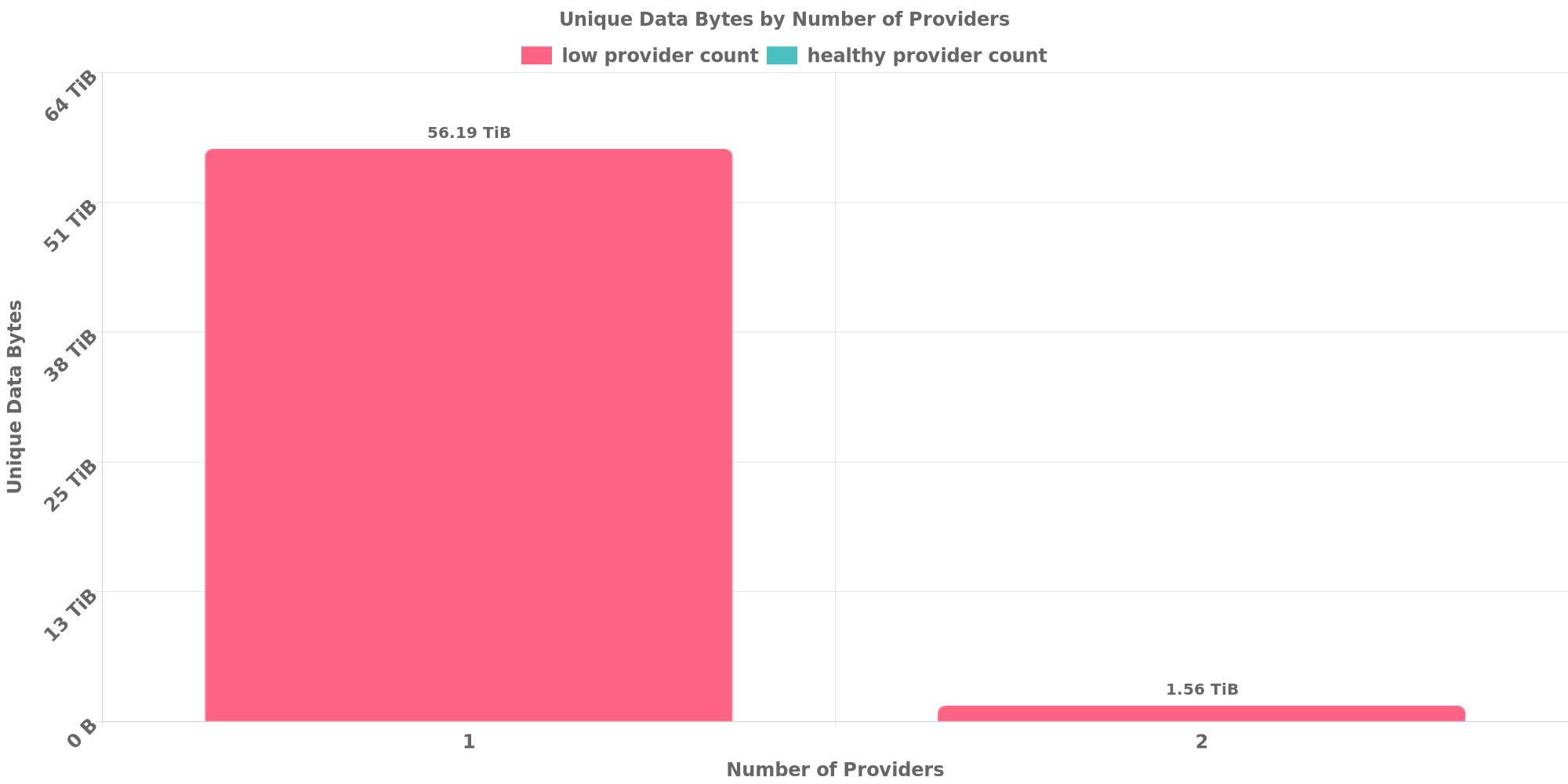

The below table shows how each many unique data are replicated across storage providers.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

| Unique Data Size | Total Deals Made | Number of Providers | Deal Percentage |

|---|---|---|---|

| 56.19 TiB | 122.78 TiB | 1 | 96.11% |

| 1.56 TiB | 4.97 TiB | 2 | 3.89% |

The below table shows how many unique data are shared with other clients. Usually different applications owns different data and should not resolve to the same CID.

However, this could be possible if all below clients use same software to prepare for the exact same dataset or they belong to a series of LDN applications for the same dataset.

⚠️ CID sharing has been observed.

| Other Client | Application | Total Deals Affected | Unique CIDs | Approvers |

|---|---|---|---|---|

| f1o3c4uaoioq6xwzqrvdw2juzps3eyujbtys2mvpq | Duo Mu Media (Shenzhen) LTD | 11.63 TiB | 372 | 1NDLABS-OFFICE1newwebgroup |

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

@qianmonetwork dear applicant,

Your CID report looks unhealthy, all your storage is in the same region and with not enough miners. Can you tell me how you are going to solve this for the next batch?

@NDLABS-OFFICE I hope you are also checking this before singing again, FIL+ rules must be met!

Yup, this does not look healthy.

There is also CID sharing taking place. We should investigate what has been put on chain and what belongs to who and if the data is valid.

Ps. You are selfdealing here. All your miners are on one IP address and beloning to the same organisation. This is against FIL+ rules.

**_We can't continue with this LDN unfortunately.

I advise all notary's not to sign subsequent requests._**

root@chain2 ~ # lotus net connect f01870888 f01870888 -> {12D3KooWGfVu6xihkABCFsdG97GLbwKFLHWSg8YnnRGqKymMPj88: [/ip4/101.206.185.216/tcp/4001]} ERROR: failed to parse multiaddr "f01870888": must begin with /

root@chain2 ~ # lotus net connect f01901351 f01901351 -> {12D3KooWLtRoMtfUUAhK4Q25GVoqvB9Wb15CCi1eZ5sVeyjDyEca: [/ip4/101.206.185.216/tcp/54001]} ERROR: failed to parse multiaddr "f01901351": must begin with /

root@chain2 ~ # lotus net connect f01955310 f01955310 -> {12D3KooWAgqQJJkzbE66aZheH3xQCdxDQTKJD7jVHqNDDT1Ns4FP: [/ip4/101.206.185.217/tcp/4001]} ERROR: failed to parse multiaddr "f01955310": must begin with /

root@chain2 ~ # lotus net connect f01954294 f01954294 -> {12D3KooWCT9VLTy5WeWcCepJ9Xikf4NRYNnUFbtwLj8DB7MisBCC: [/ip4/122.13.138.46/tcp/44001]} ERROR: failed to parse multiaddr "f01954294": must begin with /

root@chain2 ~ #

@herrehesse @cryptowhizzard Hello, thank you. The reason for the storage distribution is: we mailed the data to the sp we cooperated with separately, and we negotiated with the sp. When the car file is generated and packaged, it will be mailed to each other in the subsequent stage, which will It can improve efficiency and does not need to generate car files repeatedly. As far as I know, generating car files is also a resource-consuming thing.

@cryptowhizzard @herrehesse Regarding the CID sharing thing, I'm not sure why. My guess is that a certain sp has a wrong package. I will investigate to make sure the regulations are met.

@cryptowhizzard @herrehesse There are any problems that can be solved, but no one can easily come to a conclusion. Including your LDN, various problems may also arise. When something unexpected happens, what we should do is investigate and correct it. rather than the death penalty. I will proceed to identify the problem, thanks.

checker:manualTrigger

QianMoWangf1nn5ocwfcwotrtr4wjzy7fwfqeid23vl2cshprea

1NDLABS-OFFICE1YuanHeHK

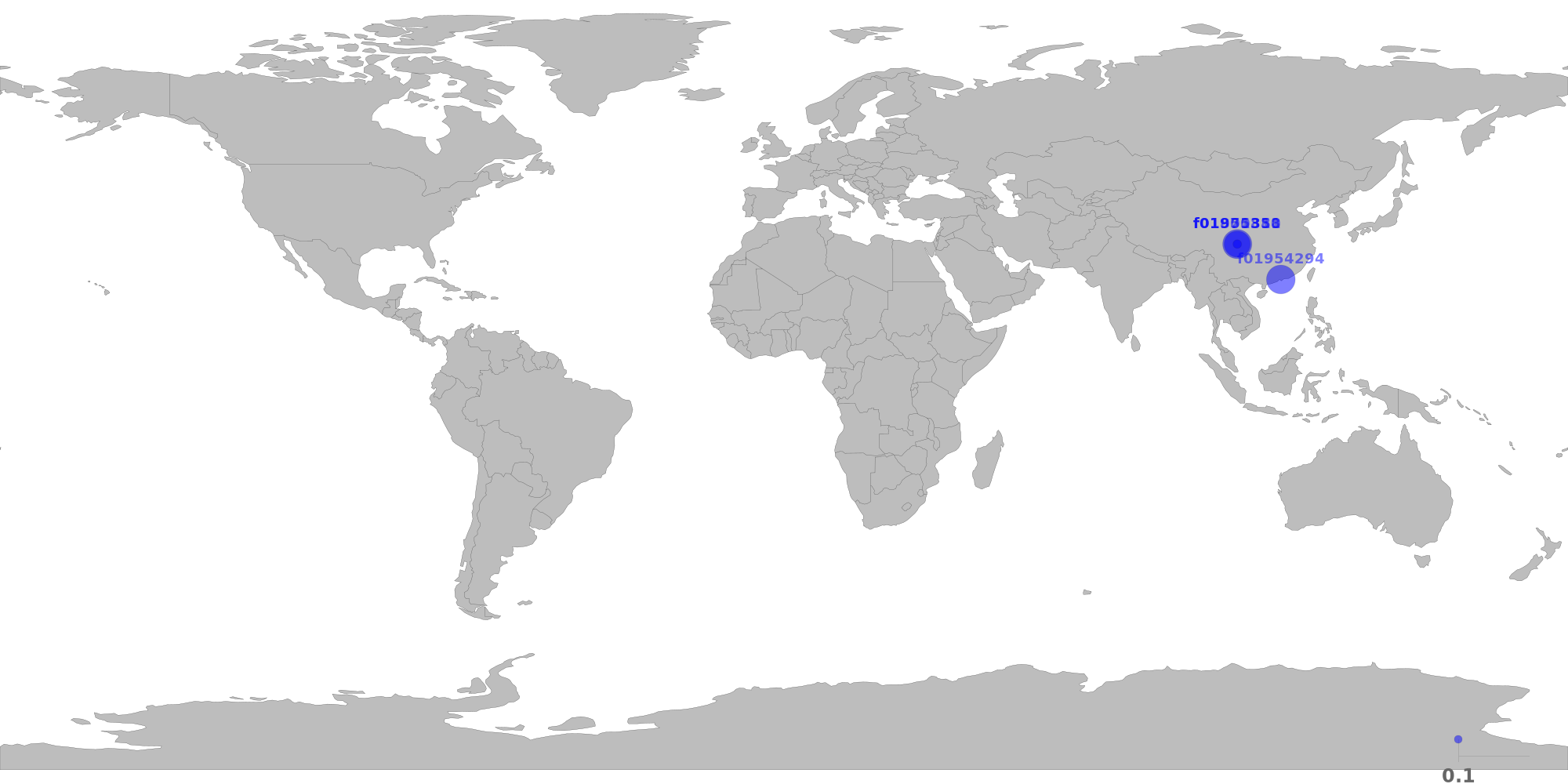

The below table shows the distribution of storage providers that have stored data for this client.

If this is the first time a provider takes verified deal, it will be marked as new.

For most of the datacap application, below restrictions should apply.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ 60.16% of total deal sealed by f01901351 are duplicate data.

⚠️ 35.85% of total deal sealed by f01870888 are duplicate data.

⚠️ 62.78% of total deal sealed by f01954294 are duplicate data.

| Provider | Location | Total Deals Sealed | Percentage | Unique Data | Duplicate Deals |

|---|---|---|---|---|---|

| f01901351 | Chengdu, Sichuan, CNCHINA UNICOM China169 Backbone |

46.13 TiB | 32.83% | 18.38 TiB | 60.16% |

| f01870888 | Chengdu, Sichuan, CNCHINA UNICOM China169 Backbone |

35.56 TiB | 25.31% | 22.81 TiB | 35.85% |

f01955310new |

Chengdu, Sichuan, CNCHINA UNICOM China169 Backbone |

14.31 TiB | 10.19% | 14.31 TiB | 0.00% |

| f01954294 | Shenzhen, Guangdong, CNChina Unicom Guangdong IP network |

44.50 TiB | 31.67% | 16.56 TiB | 62.78% |

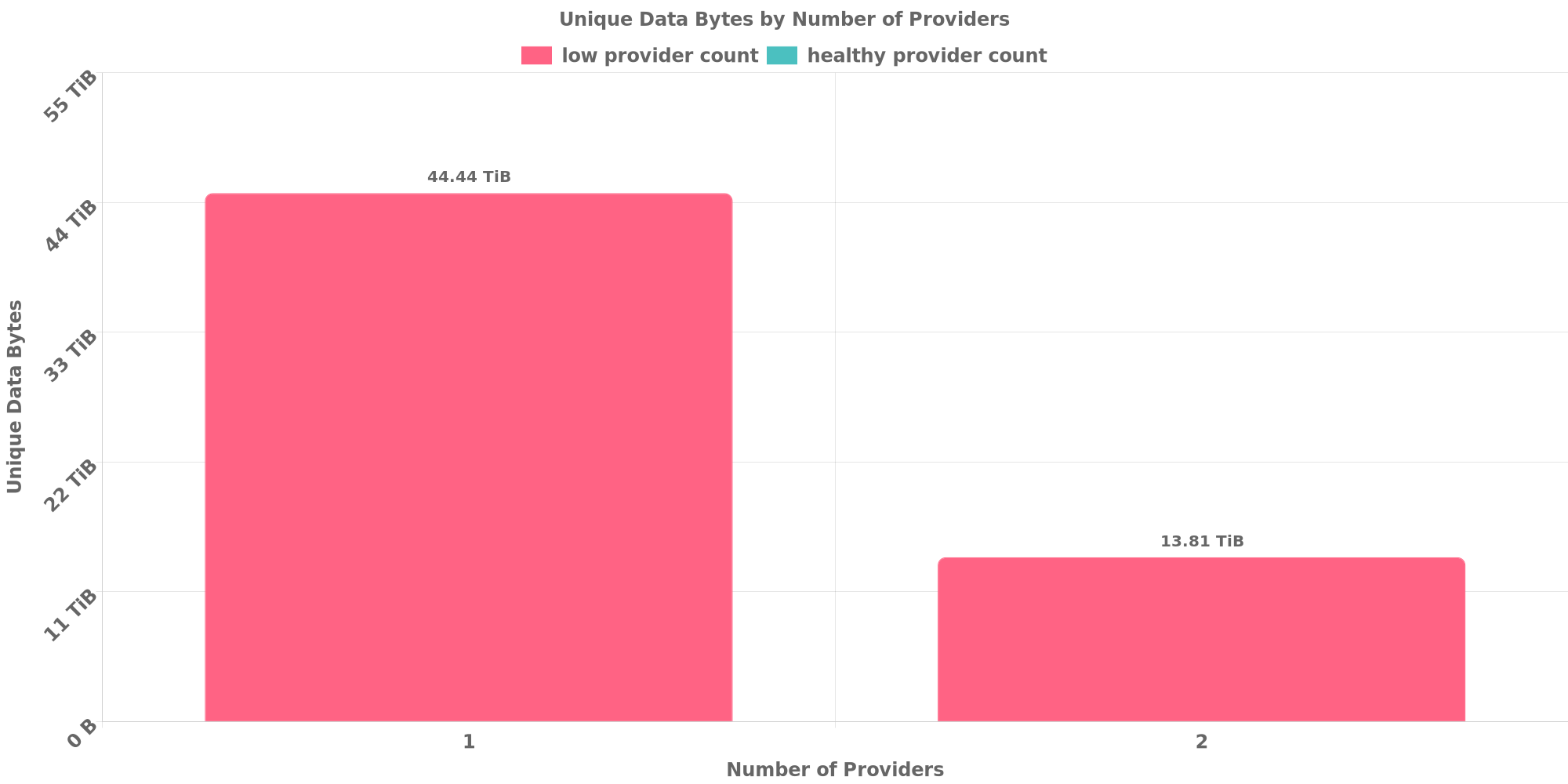

The below table shows how each many unique data are replicated across storage providers.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

| Unique Data Size | Total Deals Made | Number of Providers | Deal Percentage |

|---|---|---|---|

| 44.44 TiB | 101.34 TiB | 1 | 72.13% |

| 13.81 TiB | 39.16 TiB | 2 | 27.87% |

The below table shows how many unique data are shared with other clients. Usually different applications owns different data and should not resolve to the same CID.

However, this could be possible if all below clients use same software to prepare for the exact same dataset or they belong to a series of LDN applications for the same dataset.

⚠️ CID sharing has been observed.

| Other Client | Application | Total Deals Affected | Unique CIDs | Approvers |

|---|---|---|---|---|

| f1o3c4uaoioq6xwzqrvdw2juzps3eyujbtys2mvpq | Duo Mu Media (Shenzhen) LTD | 11.69 TiB | 374 | 1NDLABS-OFFICE1newwebgroup |

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

@cryptowhizzard @herrehesse Report situation: We urgently contacted sp, and through communication with sp, it was initially determined that f01901351 also seal ldn #951. During the seal process, an operation error occurred when the technician used the car file data to send the deal. I'm sorry for the situation. More specific information, I will continue to investigate, thank you

@cryptowhizzard @herrehesse Thank you for your guidance. We will relearn FIL+ rules and negotiate with sp. Our application is to store its own data, and there is only one. Because FIL+ can greatly help us reach storage cooperation with sp.

I am sorry for the problems that have occurred, but for the problems that have occurred, no matter what, there should be a chance to correct them. Next, we will study the FIL+ rules carefully, and we will continue to investigate the problem.

If the community requests, we will stop cooperating with the abnormal sp, but we believe that both the application and the SP should have the opportunity to correct it, because they can Correcting the problem will increase the market share and usage rate of filecoin. If any application and sp with problems will be abandoned by the community, it is not a good thing for filecoin.

We know that Speedium does a good job, but there is no guarantee that Speedium will not make a little mistake.

Just like no one can guarantee that he will not make mistakes, if he makes a mistake, he should not be killed directly.

what should we do? Will we be allowed to continue working with the existing sps or will we have to re-find sps and we will strictly follow the fil+ rules but can anyone tell me what should we do? thanks

How can we continue please? @cryptowhizzard @herrehesse Can we continue to work with existing sps or do we have to find new sps?

Hello @qianmonetwork

Given that this FIL+ LDN went wrong we need some time to get it on track again and due diligence.

Can you show us some visible proof of the size of your data and the storage systems you have there?

Please fill out this form to provide us me with the necessary information to make a educated decision on your LDN request. The SP part is very important since those above went wrong.

This application has not seen any responses in the last 10 days. This issue will be marked with Stale label and will be closed in 4 days. Comment if you want to keep this application open.

This application has not seen any responses in the last 14 days, so for now it is being closed. Please feel free to contact the Fil+ Gov team to re-open the application if it is still being processed. Thank you!

Large Dataset Notary Application

To apply for DataCap to onboard your dataset to Filecoin, please fill out the following.

Core Information

Please respond to the questions below by replacing the text saying "Please answer here". Include as much detail as you can in your answer.

Project details

Share a brief history of your project and organization.

What is the primary source of funding for this project?

What other projects/ecosystem stakeholders is this project associated with?

Use-case details

Describe the data being stored onto Filecoin

Where was the data in this dataset sourced from?

Can you share a sample of the data? A link to a file, an image, a table, etc., are good ways to do this.

Confirm that this is a public dataset that can be retrieved by anyone on the Network (i.e., no specific permissions or access rights are required to view the data).

What is the expected retrieval frequency for this data?

For how long do you plan to keep this dataset stored on Filecoin?

DataCap allocation plan

In which geographies (countries, regions) do you plan on making storage deals?

How will you be distributing your data to storage providers? Is there an offline data transfer process?

How do you plan on choosing the storage providers with whom you will be making deals? This should include a plan to ensure the data is retrievable in the future both by you and others.

How will you be distributing deals across storage providers?

Do you have the resources/funding to start making deals as soon as you receive DataCap? What support from the community would help you onboard onto Filecoin?