This exact same issue is affecting us with our fluent bit Kubernetes daemonset. We are using IMDSv2 on our EKS nodes and fluentbit is unable to communicate with our elastic search cluster. As a result, we have to turn off the AWS_Auth parameter.

This should be a high priority as this is a security risk for many users.

Bug Report

Describe the bug

Credentials are not retrieved from AWS Instance Metadata Service v2 (IMDSv2) when running on EC2. This causes plugins that require credentials to fail (e.g.:

cloudwatch).To Reproduce

Steps to reproduce the problem:

Create an EC2 instance with metadata version 2 only selected on the

Advanced Detailssection of theConfigure Instancestep. NB: I have used Amazon Linux 2 AMI (HVM), SSD Volume Type - ami-09f765d333a8ebb4b (64-bit x86) in this exampleAs I will be using the

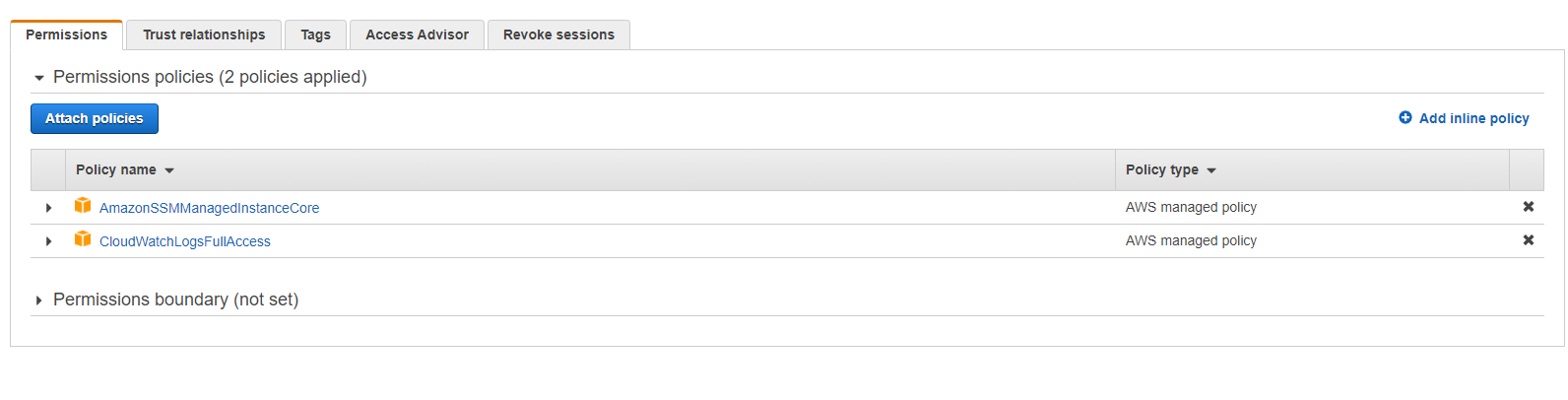

cloudwatchoutput to demonstrate this issue I have assigned a very loose role to the instance:I created and assigned fully open security group to remove that as a potential issue.

Install Fluent Bit as per https://docs.fluentbit.io/manual/installation/linux/amazon-linux

Apply the following configuration:

[INPUT] Name systemd Path /var/log/journal Buffer_Chunk_Size 32000 Buffer_Max_Size 64000

[OUTPUT] Name cloudwatch_logs Match * region ap-southeast-2 log_group_name testing log_stream_name bazz auto_create_group true