Codes for HKU CS Final Year Program 21011: Applying the differentiable physics on deformable objects to enhance the performance of robot learning tasks

The visual logger includes three parts: the Blender side for rendering, the PlasticineLab side for telling the renderer what to visualise, and the protocol between the two sides.

The blender side codes are available here. The protocol is embedded into the blender side codes and the PlasticineLab codes as submodules. The image below gives an overview of the system architecture.

Description

According to the above architecture, the PlasticineLab side codes are designed with the "event-driven" model. The robot controller, the primitive controller, the MPM simulator and the collision manager are registered as the event publisher. Here, the event refers to the updates of the primitive, particle and robot poses and other environment-level events (e.g., collision or visualisation complete). Whenever an event occurs, one message defined in theprotocol will be distributed through a TCP socket. The publisher side is the TCP client, and the TCP server shall be the event subscriber (i.e., the Blender side codes.

How to use

Step One: prepare the callback and publishers

In the class expected to be registered as a publisher, make the class inherit the plb.utils.VisRecordable.

Then, the following three methods are to be provided:

an initialising callback method: the method will be invoked once the global visualisation is turned on.

an event publishing method: the method initialises a message and then calls the message.send() method.

[OPTIONAL] an ending callback method: the method will be invoked once the global visualisation is turned off.

_Please note that the visualisation is not by default turned on. Users must manually call VisRecordable.turn_on() to start the visualization globally. For example, in an RL training scenario, one may turn on the visualisation after a certain number of epochs if he wants to skip the training process but only visualise the final outcomes._

Important Notes:

_The VisRecordable.turn_on() must only be called once._

_The VisRecordable.turn_off() must be called if the visualisation has been turned on._

No more events must be ever published once the visualisation is turned off.

Step Two: register the callbacks

Then, call self.register_scene_init_callback to register the initializing callback. call self.register_scene_end_callback to register the ending callback.

At the places where the poses are updated (such as forward kinematics or collision detection methods), first, check self.is_recording(), and call the event publishing method if self.is_recording() returns True. The self.is_recording() returns True means the visualisation has been turned on already.

Step three: turn on/off the visualization

At the proper time point, call VisRecordable.turn_on() or VisRecordable.turn_off() to start or end the visualization.

An example: plb.engine.primitive.primitive

Step one: prepare the callbacks:

def _init_object_in_visualizer(self):

""" Callback when the visualizer is turned on

Send a message to the visualizer to initialize the primitive

object in the scene.

NOTE: despite rigid-body primitives, the default representation

of their spacial shape is as well the SDF values. Hence, the

initialization borrows the factory method from the

`DeformableMeshesMessage` to transfer SDF to meshes.

"""

sdf_ = np.zeros((256, 256, 256))

self.get_global_sdf_kernel(0, sdf_)

sdf_ = self.plb_sdf_2_z_up_rhs(sdf_)

DeformableMeshesMessage.Factory(

self.unique_name,

0,

sdf = sdf_,

scale = (256, 256, 256)

).message.send()

def _update_pose_in_vis_recorder(self, frame_idx, is_init = False):

state_idx = frame_idx + (0 if is_init else 1)

if is_init or self.is_recording():

UpdateRigidBodyPoseMessage(

self.unique_name,

plb_pose_2_z_up_rhs(self.get_state(state_idx, False)),

frame_idx * self.STEP_INTERVAL

).send()

The forward_kinematics and the apply_robot_forward_kinemtaics are two occasions where the poses of a primitive might be altered. Thus, we check self.is_recording() here and publish the message if it is recording.

def forward_kinematics(self, f):

self.forward_kinematics_kernel(f)

self._update_pose_in_vis_recorder(f)

@ti.complex_kernel

def apply_robot_forward_kinemtaics(self, frame_idx: ti.i32, xyz_quat: torch.Tensor):

""" The robot's forward kinematics computes the target position and

rotation of each primitive geometry for each substep. The method

applies this computation results to the primitive geometries.

Parameters

----------

frame_idx: the time index

xyz_quat: the position and global rotation of the primitive geometry

should be moved to

"""

if xyz_quat.shape[-1] != 7:

raise ValueError(f"XYZ expecting Tensor of shape (..., 7), got {xyz_quat.shape}")

xyz_quat = z_up_rhs_pose_2_plb(

pose = xyz_quat.detach().cpu().numpy(),

is_cylinder = self.cfg.shape == "Cylinder",

cylinder_h = getattr(self, 'h') if hasattr(self, 'h') else 0

)

targetXYZ = xyz_quat[:3]

targetQuat = xyz_quat[3:]

self.position[frame_idx + 1] = np.clip(

targetXYZ,

self.xyz_limit[0].value,

self.xyz_limit[1].value

)

self.rotation[frame_idx + 1] = targetQuat

self._update_pose_in_vis_recorder(frame_idx)

Adds a new debugger config to demo the visualisation

Dockerfile

Fixes the pip install issue

plb/engine/controller/

The

plb/engine/controller_facade.py and plb/engine/primitives_facade.py

Adds documentations

plb/engine/primitive/primitive.py

Makes the Primitive class a publisher.

plb/engine/taichi_env.py

Publishes the particle movements in MPMSimulator.

plb/envs/rope_robot.yml, tests/data/ur5/ur5_primitive_offset.urdf and tests/data/ur5/ur5_primitive.urdf

The rope_robot env now has no offset of the robot (robot initialised at the origin). If wanting to have offset, change the URDF to tests/data/ur5/ur5_primitive_offset.urdf

plb/utils/

Create the VisRecordable class.

plb/urdfpy/

Publishes the URDF robots' poses changes.

Tests

Unit tests

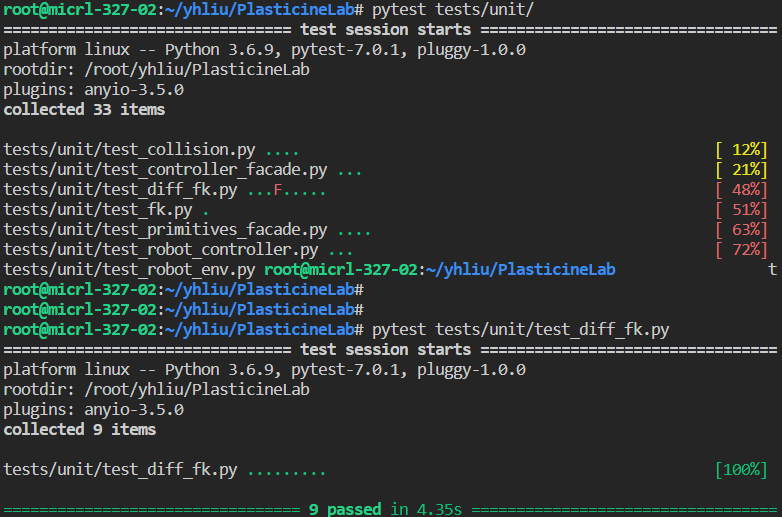

Unit tests are updated according to the changes in source codes. All are passed:

_NOTE: the weird thing is one of the tests in tests/unit/test_diff_fk.py fails if running all UT together, but it is passed if we only run the test_diff_fk.py._

Functional tests

A new functional test is added to demonstrate the visualisation: tests/vis_recording.py. To run the demo,

Start the blender side codes, following the instructions here

Run the test

python -m tests.unit.vis_recording

Or using the VSCode debugger

Logistics

The pull request closes #14 and #15, finally completing #17.

Visual Logger (PlasticineLab side)

The visual logger includes three parts: the Blender side for rendering, the PlasticineLab side for telling the renderer what to visualise, and the protocol between the two sides.

The blender side codes are available here. The protocol is embedded into the blender side codes and the PlasticineLab codes as submodules. The image below gives an overview of the system architecture.

Description

According to the above architecture, the PlasticineLab side codes are designed with the "event-driven" model. The robot controller, the primitive controller, the MPM simulator and the collision manager are registered as the event publisher. Here, the event refers to the updates of the primitive, particle and robot poses and other environment-level events (e.g., collision or visualisation complete). Whenever an event occurs, one message defined in theprotocol will be distributed through a TCP socket. The publisher side is the TCP client, and the TCP server shall be the event subscriber (i.e., the Blender side codes.

How to use

Step One: prepare the callback and publishers

In the class expected to be registered as a publisher, make the class inherit the

plb.utils.VisRecordable.Then, the following three methods are to be provided:

message.send()method._Please note that the visualisation is not by default turned on. Users must manually call

VisRecordable.turn_on()to start the visualization globally. For example, in an RL training scenario, one may turn on the visualisation after a certain number of epochs if he wants to skip the training process but only visualise the final outcomes._Important Notes:

VisRecordable.turn_on()must only be called once._VisRecordable.turn_off()must be called if the visualisation has been turned on._Step Two: register the callbacks

Then, call

self.register_scene_init_callbackto register the initializing callback. callself.register_scene_end_callbackto register the ending callback. At the places where the poses are updated (such as forward kinematics or collision detection methods), first, checkself.is_recording(), and call the event publishing method ifself.is_recording()returnsTrue. Theself.is_recording()returnsTruemeans the visualisation has been turned on already.Step three: turn on/off the visualization

At the proper time point, call

VisRecordable.turn_on()orVisRecordable.turn_off()to start or end the visualization.An example:

plb.engine.primitive.primitiveStep one: prepare the callbacks:

The

forward_kinematicsand theapply_robot_forward_kinemtaicsare two occasions where the poses of a primitive might be altered. Thus, we checkself.is_recording()here and publish the message if it is recording.Patch description

.gitmodulesIncludes the protocol as a submodule.

.vscode/launch.jsonAdds a new debugger config to demo the visualisation

DockerfileFixes the pip install issue

plb/engine/controller/The

plb/engine/controller_facade.pyandplb/engine/primitives_facade.pyAdds documentations

plb/engine/primitive/primitive.pyMakes the

Primitiveclass a publisher.plb/engine/taichi_env.pyPublishes the particle movements in

MPMSimulator.plb/envs/rope_robot.yml,tests/data/ur5/ur5_primitive_offset.urdfandtests/data/ur5/ur5_primitive.urdfThe

rope_robotenv now has no offset of the robot (robot initialised at the origin). If wanting to have offset, change the URDF totests/data/ur5/ur5_primitive_offset.urdfplb/utils/Create the

VisRecordableclass.plb/urdfpy/Publishes the URDF robots' poses changes.

Tests

Unit tests

Unit tests are updated according to the changes in source codes. All are passed:

_NOTE: the weird thing is one of the tests in

tests/unit/test_diff_fk.pyfails if running all UT together, but it is passed if we only run thetest_diff_fk.py._Functional tests

A new functional test is added to demonstrate the visualisation:

tests/vis_recording.py. To run the demo,Or using the VSCode debugger

Logistics

The pull request closes #14 and #15, finally completing #17.

Therefore, the pull request finishes milestone 2.