Hmm that's not a lot of info to go on.. can you post an example repo that creates the error you're seeing?

Closed jp887 closed 4 years ago

Hmm that's not a lot of info to go on.. can you post an example repo that creates the error you're seeing?

Had the same issue. Doing a manual npm install git+https://github.com/lovell/sharp.git to install the latest unreleased Sharp package seems to have fixed it for me. This might not be a good idea, however I'm not sure what else to do until a fixed version of Sharp is released.

I can confirm this bug. In my case it started happening, when I added some large images, 5mb or more.

I had the same problem;

Using an older version for gatsby-transformer-sharp (2.1.1) and gatsby-plugin-sharp (2.0.5) fixed it for me. Maybe if there is any debuggers, this might be an additional information.

Note that I also have a lot of images on my build.

@m-allanson I've started working on a repro here: https://github.com/jp887/gatsby-issue6291-repro Basically generate a large number of random images with a script and then query for them in a basic gatsby 2 site.

I'm building the project on CircleCI so build outputs can be inspected without having to run the project locally.

The most recent build reproduced the issue: https://circleci.com/gh/jp887/gatsby-issue6291-repro/3

@jp887 would you mind trying to take a build on your repro with these gatsby-transformer-sharp (2.1.1) gatsby-plugin-sharp (2.0.5) exact versions, on a new branch maybe? I have downgraded to these versions, and I have taken more than 10 successful builds since.

@btk OK done. Builds on the 'downgraded' branch can be found here: https://circleci.com/gh/jp887/workflows/gatsby-issue6291-repro/tree/downgraded

Seems it's still reproducing the issue on the new downgraded branch: https://circleci.com/gh/jp887/gatsby-issue6291-repro/4

I also have the problem in a recent project.

I also get a lot of vips warning: exif: unknown EXIF resolution unit errors.

I'm running into this as well but with a lot less images

For me it happens when I'm changing maxWidth and quality on a fluid graphql query

fluid(maxWidth: 400, quality: 100) {

...GatsbyImageSharpFluid_withWebp_tracedSVG

}@TylerBarnes ohhh the dreaded segmentation fault error, it brings me back to when i was using c language as a development tool. That looks like that somewhere deep down in the execution of that plugin, it's trying to allocate memory where's not allowed and it was not deallocated and trying to retrieve something that is not allowed. That is not a gatsby plugin issue per se, more like the underlying vips/sharp, the one written in c.

Hiya!

This issue has gone quiet. Spooky quiet. 👻

We get a lot of issues, so we currently close issues after 30 days of inactivity. It’s been at least 20 days since the last update here.

If we missed this issue or if you want to keep it open, please reply here. You can also add the label "not stale" to keep this issue open!

Thanks for being a part of the Gatsby community! 💪💜

I am having the same issue at the same build step.

My plugin versions are:

"bourbon-neat": "3.0.1",

"dotenv": "^6.2.0",

"gatsby": "^2.0.112",

"gatsby-image": "^2.0.29",

"gatsby-plugin-manifest": "^2.0.17",

"gatsby-plugin-offline": "^2.0.22",

"gatsby-plugin-react-helmet": "^3.0.6",

"gatsby-plugin-sass": "^2.0.10",

"gatsby-plugin-sharp": "^2.0.20",

"gatsby-source-wordpress": "^3.0.32",

"gatsby-transformer-sharp": "^2.1.13",

"node-sass": "^4.11.0",

"normalize-scss": "^7.0.1",

"react": "^16.7.0",

"react-dom": "^16.7.0",

"react-helmet": "^5.2.0"I seem to have encountered this issue as well. I'll add more to this thread if I discover any additional clues.

Also... I haven't been able to figure out how to apply the "not stale" label to this issue. I'm guessing that I need to be granted a permission?

In either case: the issue does appears to be somewhat random. I just reran the same build without encountering the problem this time. (In both cases, I cleared all cached files before starting the build)

@briandamaged added it for you.

@btk : Thx! So far, the issue has not reoccurred.

I still see this when build by nelify:

10:08:33 AM: success extract queries from components — 0.324 s

10:08:51 AM: (sharp:1324): GLib-GObject-WARNING **: 09:08:51.843: gtype.c:4265: type id '0' is invalid

10:08:51 AM: (sharp:1324): GLib-GObject-WARNING **: 09:08:51.843: can't peek value table for type '<invalid>' which is not currently referenced

10:08:51 AM: (sharp:1324): GLib-GObject-WARNING **: 09:08:51.843: gvalue.c:188: cannot initialize GValue with type '(null)', this type has no GTypeValueTable implementation

10:08:51 AM: (sharp:1324): GLib-GObject-CRITICAL **: 09:08:51.843: g_value_transform: assertion 'G_IS_VALUE (dest_value)' failed

10:08:54 AM: /usr/local/bin/build: line 32: 1324 Segmentation fault (core dumped) gatsby build

10:08:54 AM: Caching artifacts

…

10:08:55 AM: failed during stage 'building site': Build script returned non-zero exit code: 139It randomly happens using exactly the same git revision:

See http://filez.foxel.org/4dec3e172673 for version Information.

This seems to be unrelated and dos not lead to segfaults / failed builds (same repository):

12:04:08 AM: success Building production JavaScript and CSS bundles — 41.675 s

12:04:10 AM: success Building static HTML for pages — 2.570 s — 109/109 87.88 pages/second

12:04:16 AM: info Done building in 661.124 sec

12:04:16 AM: (sharp:1506): GLib-CRITICAL **: 23:04:16.327: g_hash_table_lookup: assertion 'hash_table != NULL' failed

12:04:16 AM: (sharp:1506): GLib-CRITICAL **: 23:04:16.750: g_hash_table_lookup: assertion 'hash_table != NULL' failed

12:04:17 AM: (sharp:1506): GLib-CRITICAL **: 23:04:17.106: g_hash_table_lookup: assertion 'hash_table != NULL' failed

12:04:17 AM: Caching artifacts

12:04:17 AM: Started saving node modules

12:04:17 AM: Finished saving node modulesRegarding the segfaults I have found this in the gatsby-plugin-sharp:

// Try to enable the use of SIMD instructions. Seems to provide a smallish

// speedup on resizing heavy loads (~10%). Sharp disables this feature by

// default as there's been problems with segfaulting in the past but we'll be

// adventurous and see what happens with it on.

sharp.simd(true);So far locally I haven't seen any segfaults after commenting out sharp.simd(true);.

That would make sense. I've only seen this on sites with a lot of images and then it's still rare on those sites. Maybe once every 20 - 30 builds.

@mdornseif @TylerBarnes Thanks for the discovery! I went ahead and opened a PR, removing the lines: https://github.com/gatsbyjs/gatsby/pull/11925 😌

Thank you so much for the awesome finds @mdornseif & the PR @niklasravnsborg. 😄

I am also running into these gatsby-plugin-sharp-inspired segmentation faults. Unfortunately, deactivating the SIMD functionality did not seem to improve the frequency of these segmentation faults. 😢

Something else we could do to improve stability is to move Sharp to a child process as if it crashes, we could just restart it.

That would be a cool approach, I like it! 👍

Despite this probably slowing down a build or two, I think that this would definitely satisfy a majority of the folks who run into this issue. 😄

Disabling the use of SIMD instructions solved it for me, but it means modifying node_modules/gatsby-plugin-sharp/process-file.js:30, which isn't a portable solution for cloud building.

Could we make that a configurable option?

I have a Gatsby site with 900+ images (all < 30kb). For the last few weeks my build has consistently failed with the same segmentation fault errors from sharp as described here.

E.g.

(sharp:1194): GLib-GObject-WARNING **: 22:25:05.263: gtype.c:4265: type id '0' is invalid

(sharp:11672): GLib-CRITICAL **: g_hash_table_lookup: assertion 'hash_table != NULL' failed

Segmentation fault: 11After re-trying the build up to 5-6 times locally, it would eventually succeed (each time the number images sharp processed would drop as images from the last failed build would be in cache).

I just tried updating the following:

.nvmrc:

node 9.3.1 --> node 10.2.1

package.json:

"gatsby-plugin-sharp": "^2.0.30" --> "gatsby-plugin-sharp": "^2.0.35"

"gatsby-transformer-sharp": "^2.1.17" --> "gatsby-transformer-sharp": "^2.1.18"

I deleted node_modules, .cache and public folders and ran npm i, gatsby build and for the first time, I got a successful build with all 900 images, on the first try!

Would be interested to see if this works for others?

Edit: But.. sadly still only failures with Netlify build, getting the same Segmentation fault as before :(

@adam-foster if deleting the cache solved it for you locally, you can clear your cache in netlify.

Go to the deploys list page /sites/[site-name]/deploys?filter=master, click the "Trigger deploy" dropdown in the top right and click to clear cache and redeploy.

Thanks @TylerBarnes not sure how I missed that. It worked 🎉 - I still get the occasional build failing locally but this is the first successful build I have had with Netlify for this number of images.

I have seen a few suggestions of having an automatic retry in gatsby-plugin-sharp on sharp error. I'd hope that would resolve any remaining issues of builds failing in this case

Just curious, how should I go about deploying a site with an insane number of images?

@ancashoria you'll have better luck if the images are stored locally in your gatsby repo instead of remote images. Which source plugin are you using?

@TylerBarnes I'm using createRemoteFileNode from gatsby-source-filesystem because I need to fetch the images from a remote location. Then I also try to transform them using childImageSharp which also takes a lot of time.

I was optimistic about deploying to Netlify, but I hear it has a build runtime limit of 15 minutes and by my calculations, I need a few hours for the initial build. Do you have any tips on how to approach this monster build? :)

Also, can I count on gatsby to cache the processed images from the previous build?

@ancashoria if you haven't already you should install the netlify cache plugin (while you're at it the netlify plugin is great for some easy performance gains). As long as you're caching your remote file downloads in your code I believe the netlify cache plugin will cache the image downloads, it definitely caches the image sharp transforms.

FYI the error in the OP still occurs randomly with a high number of local high-res images. Building from scratch for me involves generating around 200,000 thumbnails and it seems to randomly produce this error and fail. Retrying each time works, but it's inconvenient because it means I can't just walk away and leave it to build for a few hours.

https://github.com/gatsbyjs/gatsby/issues/6291#issuecomment-466202250 sounds like a nice solution for this and other Sharp related issues.

Still happens to me randomly (both locally and on Netlify, about 20-30% of the time). I have about 5,000 not that big images (mostly 500x500 pixels).

4:25:52 PM: (sharp:1393): GLib-GObject-WARNING **: 16:25:52.645: gtype.c:4265: type id '0' is invalid

4:25:52 PM: (sharp:1393): GLib-GObject-WARNING **: 16:25:52.645: can't peek value table for type '<invalid>' which is not currently referenced

4:25:52 PM: (sharp:1393): GLib-GObject-WARNING **: 16:25:52.645: gvalue.c:188: cannot initialize GValue with type '(null)', this type has no GTypeValueTable implementation

4:25:57 PM: /usr/local/bin/build: line 34: 1393 Segmentation fault (core dumped) gatsby buildI run into the same error. I'm running node v10 on Netlify, all packages on the latest version. I face the issue on ~1.5GB of images to resize. Deleting .cache, public and the node_modules didn't helped, not on Netlify nor locally.

If you can share links to your code that we can see reproduction, that would be very helpful.

I ran into this issue running the latest versions of gatsby-plugin-sharp and gatsby-transformer-sharp. Could not fix it at all until i downgraded to gatsby-transformer-sharp 2.1.1 and gatsby-plugin-sharp 2.0.5 as @btk suggested. Built locally first try after that.

@KyleAMathews I've updated the dependencies in the original repro and I'm still seeing the same error. After upgrading the first build succeeded but the next one reproduced the error.

For whatever reason, my experience when building locally is that each time I run the build it gets a bit further before failing. I don't see this pattern when building in CircleCI (which spins up a new container each time), so I assume some kind of caching is causing this behaviour locally.

In case it helps, here is a pull request on a public repo with the issue: https://github.com/browniebroke/chez-nicole-web/pull/351

The Netlify logs: https://app.netlify.com/sites/chez-nicole/deploys/5cff6ca75c28e2000917dfc4

Update: the above pull request was later retried by Renovate and ended up working fine. The issue appear intermittently still.

For me, my local build is working and may Netlify build has the same 1393 Segmentation fault (core dumped) gatsby build issue

It's a hard nut to crack... You could try setting the env variable GATSBY_CPU_COUNT=logical_cores. I'm unsure if this even helps but might be worth the try

I have the same issue. Running good on my local machine but getting the 1393 Segmentation fault (core dumped) gatsby build message on Netlify.

Any update on this?

This might be helpful...

The segfault happens to me when running the gatsby build in an alpine based container, but not in a debian based container.

To reproduce, try use this docker file based on alpine, and then this other dockerfile using debian. The error never happens on the debian based. Notice that those two, are both base on official node images.

This probably means netflify is using an alpine version. Or another container with a similar problem.

Let me see if I can get this reproduced on alpine docker container.

FWIW we worked with @KyleAMathews & the author of sharp to debug this on our internal ~large/~5,000 image gatsby build and the fixes were:

GATSBY_CPU_COUNT=1 in our build environment to ignore the bad cpu count +gatsby-plugin-sharp to respect GATSBY_CPU_COUNT instead of ignoring it ( https://github.com/gatsbyjs/gatsby/pull/14624)sharp.cache(false) and sharp.simd(false) to our project's ./gatsby-node.js to further turn off other "too much concurrency" behavior in sharp's implementation, again due to the misreported cpu/etc. stats from the CircleCI environment.With these changes, our build went from failing ~80% of the time to stable for a ~week. Props to @lovell for all of this work, I'm just the messenger. Also defer to @KyleAMathews and the other gatsby maintainers for how these lessons learned/etc. can get worked into the maintain gatsby code.

Hi @stephenh,

How did you add sharp.simd(false) and sharp.cache(false) to gatsby-node.js?

We have a website with 9K images. For one month we cannot get the build to work, it always fails with segmentation fault!

@MWalid I assume that a file named gatsby-node.js in the root of your project is just auto-included by gatsby, i.e. see https://www.gatsbyjs.org/tutorial/part-seven/ and search for gatsby-node.js.

Thank you so much @stephenh.

Actually I figured it out the same day I asked :)

Your comment resolved the issue for me, builds now take 4.5 hours to complete, but that's totally fine with a daily build.

We had run into a similar issue, building on Netlify started to fail consistently after updating something related to gatsby-image. our errors look like:

11:15:13 AM: (sharp:1397): GLib-CRITICAL **: 18:15:13.593: g_hash_table_lookup: assertion 'hash_table != NULL' failed

11:15:13 AM: (sharp:1397): GLib-CRITICAL **: 18:15:13.593: g_hash_table_insert_internal: assertion 'hash_table != NULL' failed

11:15:13 AM: (sharp:1397): GLib-CRITICAL **: 18:15:13.593: g_hash_table_lookup: assertion 'hash_table != NULL' failed

11:15:21 AM: /usr/local/bin/build: line 34: 1397 Segmentation fault (core dumped) gatsby buildwe implemented the approaches above, adding

const sharp = require('sharp')

sharp.simd(false)

sharp.cache(false)to our gatsby-node file. we also added a NODE_VERSION environment variable set to 12 in Netlify. Node 12 has an inherently higher memory cap. also added NODE_ENV as production.

Now in Netlify our builds don't immediately succeed, we have to clear the cache and rebuild

Description

I'm processing around 1500 images with gatsby-plugin-sharp/gatsby-transformer-sharp and GraphQL, to display with gatsby-image as per this page in the docs.

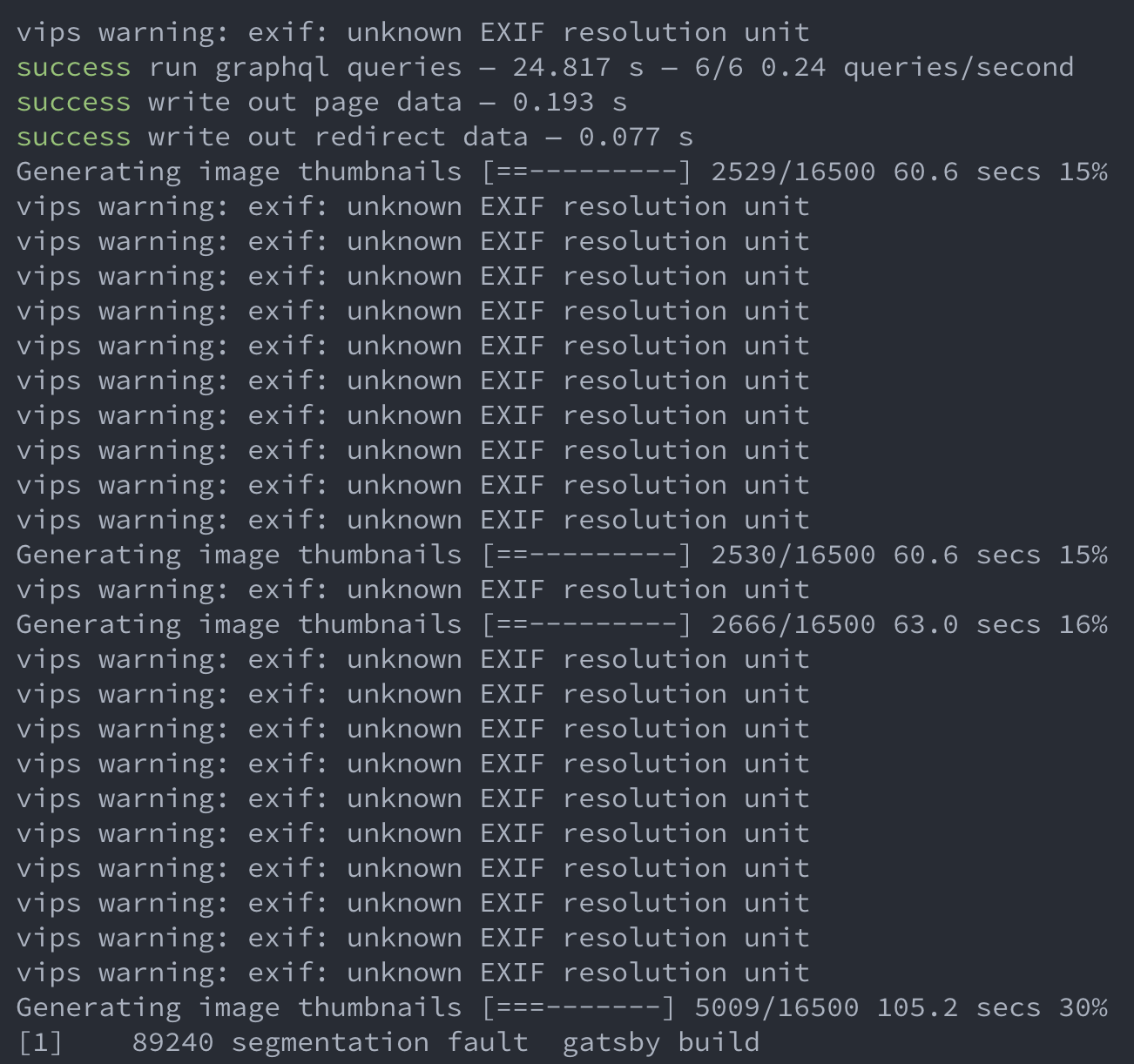

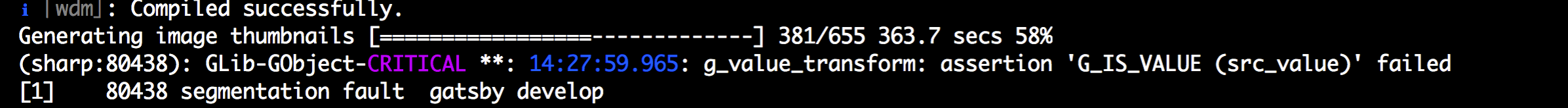

For smaller numbers of images I have had no issues, but now that I'm processing 1500 images the build randomly fails with the following error:

This happens during the "Generating image thumbnails" step in the build process and it occurs apparently randomly (sometimes 10% of the way through the images, sometimes at 80%, sometimes not at all). Therefore I do not believe it is caused by a "faulty image".