Feedback regarding image size (uncompressed): glcr.b-data.ch/jupyterlab/r/tidyverse (3.18 GB) vs rocker/tidyverse (2.38GB):

glcr.b-data.ch/jupyterlab/r/tidyverse is 800 MB bigger because

- code-server is bigger than RStudio Server OSE[^1]

- there is JupyterLab (incl. dependencies) installed

- some R packages have been moved up the build chain

[^1]: There are many Code extensions pre-installed.

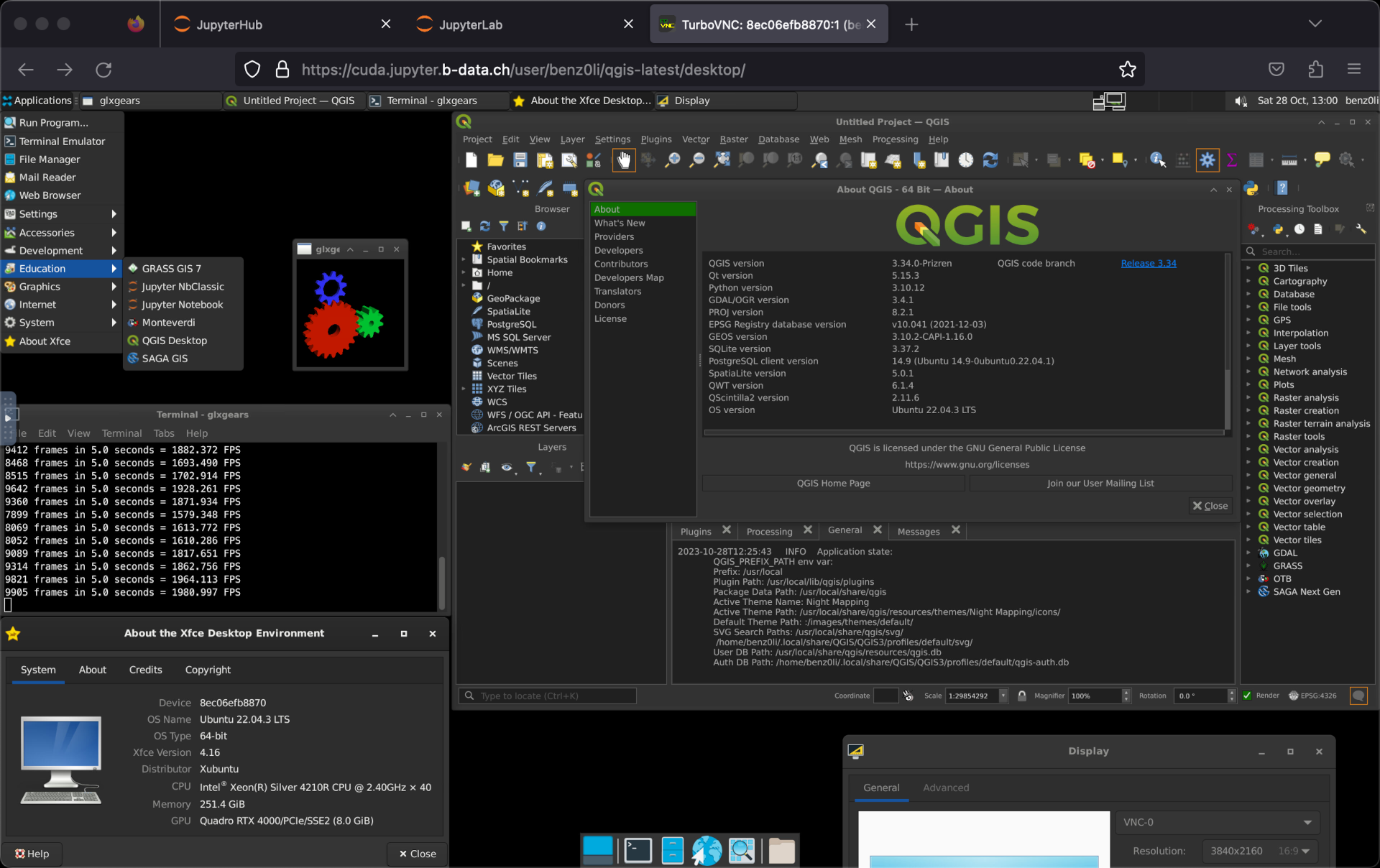

As discussed with @benz0li, we could use the

b-datastack throughout, with more consistency and good R/Python support. First we would need to think about similarities/differences and pros/cons, leaving this as an open question, I'm a bit out of my depth, so any advice / PRs welcome : )