@geoffwoollard

What do you think about this image?

Open geoffwoollard opened 5 years ago

@geoffwoollard

What do you think about this image?

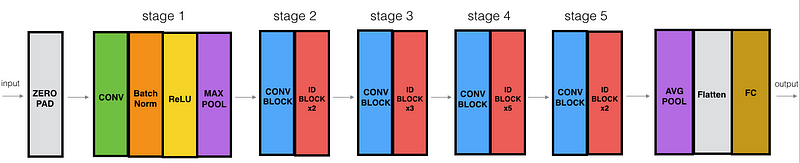

@Davjes15 I was thinking something more like Fig 2a in https://arxiv.org/pdf/1803.08207.pdf

@geoffwoollard ok I am making a figure like 2a. The image that I sent you is made using Keras I am not sure if I can do an image as 2a using Keras but I am looking for a solution. If I cannot do it in Keras I will do it by "hand"

@geoffwoollard

I plot the ROC curve for a model with a categorical accuracy of 100 % using the original deep consensus network and the synthetic images (1000 images, 380x380)

Obviously, it looks perfect since it is 100%

@geoffwoollard For the same model the Precision Recall is

@geoffwoollard I asked my prof about the neural network architecture

He recommended me to use Alexlenail http://alexlenail.me/NN-SVG/LeNet.html

This is the final result

Please let me know if I need to change something

Please let me know if I need to change something

Fantastic. I was going to recommend something like this  but like what you came up with better.

but like what you came up with better.

Also see

plot_model(model, to_file='model.png')

SVG(model_to_dot(model).create(prog='dot', format='svg'))At the end of this notebook.

@geoffwoollard Based on your comments regarding the dense layer and the number of epochs to get 100% accuracy I ran a model with 1024 dense layer and 13 epoch with the noise images that you used in the refactor notebook. But I cannot get a better accuracy than 48%

@geoffwoollard I changed the crop_n to 128x128, dense layer 1024 and 39 epochs ( I ran 3 times a training of 13 epochs so in total 39) and I got a better performance. The performance above is for a 380x380, dense 1024 and 13 epochs.

Epoch 1/13 72/72 [==============================] - 7s 95ms/step - loss: 0.2736 - categorical_accuracy: 0.8956 Epoch 2/13 72/72 [==============================] - 7s 97ms/step - loss: 0.2558 - categorical_accuracy: 0.8978 Epoch 3/13 72/72 [==============================] - 7s 99ms/step - loss: 0.2540 - categorical_accuracy: 0.8867 Epoch 4/13 72/72 [==============================] - 7s 98ms/step - loss: 0.2515 - categorical_accuracy: 0.9011 Epoch 5/13 72/72 [==============================] - 7s 97ms/step - loss: 0.2380 - categorical_accuracy: 0.8978 Epoch 6/13 72/72 [==============================] - 7s 98ms/step - loss: 0.2297 - categorical_accuracy: 0.9100 Epoch 7/13 72/72 [==============================] - 7s 97ms/step - loss: 0.1887 - categorical_accuracy: 0.9311 Epoch 8/13 72/72 [==============================] - 7s 97ms/step - loss: 0.2308 - categorical_accuracy: 0.9078 Epoch 9/13 72/72 [==============================] - 7s 97ms/step - loss: 0.1872 - categorical_accuracy: 0.9317 Epoch 10/13 72/72 [==============================] - 7s 97ms/step - loss: 0.1667 - categorical_accuracy: 0.9433 Epoch 11/13 72/72 [==============================] - 7s 97ms/step - loss: 0.1863 - categorical_accuracy: 0.9267 Epoch 12/13 72/72 [==============================] - 7s 98ms/step - loss: 0.1728 - categorical_accuracy: 0.9361 Epoch 13/13 72/72 [==============================] - 7s 97ms/step - loss: 0.1975 - categorical_accuracy: 0.9217 200/200 [==============================] - 0s 984us/step categorical_accuracy: 81.00%

These are the graphs ROC and Precision-Recall

@geoffwoollard These are the graphs accuracy vs epoch and loss vs epoch for the above model

@geoffwoollard As conclusion I would say that the input size of the image is very important when you have noise images. The model did not get a high accuracy when I used 380x380. However, using the same network and image size of 128x128 I got 90% accuracy after 39 epochs

Let's make an image like this (with the noisy data) to see how much the dataset size matters

@geoffwoollard the graph accuracy vs epoch represents the same image that you sent me.

By using your fit_generator function the model gets more data in every epoch so the graph is similar to accuracy vs Training set size

For instance, batch size is 100 in this graph so 1st epoch takes 100 images, 2nd 200 images, etc.

I am not sure if that is the function of fit-generator

By using your fit_generator function the model gets more data in every epoch so the graph is similar to accuracy vs Training set size

For instance, batch size is 100 in this graph so 1st epoch takes 100 images, 2nd 200 images, etc.

I am not sure if that is the function of fit-generator

@Davjes15 Please make ROC and PR figures for the data in J145, J151. See the more_layers.ipynb I committed and the model I shared model-missing-2cytc-J145-J151-256dense-5epochs-20190328-2123

@geoffwoollard I just produced the new graphs for j45 and j51. I did not get 100% accuracy and I am using the same model more_layers. I got an categorical accuracy of 47% the first time and 57% the second time in the validation set

epoch 0 WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.cast instead. Epoch 1/1 18/18 [==============================] - 450s 25s/step - loss: 0.9439 - categorical_accuracy: 0.5511 200/200 [==============================] - 31s 155ms/step categorical_accuracy: 57.50% epoch 1 Epoch 1/1 18/18 [==============================] - 361s 20s/step - loss: 0.6431 - categorical_accuracy: 0.6344 200/200 [==============================] - 13s 66ms/step categorical_accuracy: 57.50% epoch 2 Epoch 1/1 18/18 [==============================] - 362s 20s/step - loss: 0.2320 - categorical_accuracy: 0.9061 200/200 [==============================] - 13s 66ms/step categorical_accuracy: 57.50% epoch 3 Epoch 1/1 18/18 [==============================] - 362s 20s/step - loss: 0.0091 - categorical_accuracy: 1.0000 200/200 [==============================] - 13s 66ms/step categorical_accuracy: 57.50% epoch 4 Epoch 1/1 18/18 [==============================] - 362s 20s/step - loss: 0.0046 - categorical_accuracy: 1.0000 200/200 [==============================] - 13s 66ms/step categorical_accuracy: 57.50% I have the notebook on my google drive and I am gonna run it once again.

@geoffwoollard I do not have access to the file models from your google drive. Could you please commit those changes to git so I can download and use them

@Davjes15 figure for architecture @geoffwoollard figures for intro @geoffwoollard figs for pixel drop out @Davjes15 train/test for epoch @Davjes15 ROC @Davjes15 precision recall curve