Thanks for your review. You did nothing wrong. I am still looking for the reason of this behaviour. I have updated the code to support 5-shot learning with miniImagenet. But still I have low accuracy results with 1 and 5 shot in miniImagenet, with omniglot dataset it works fine. I will look into it as soon as possible. If you find any possible update to the code just let me know.

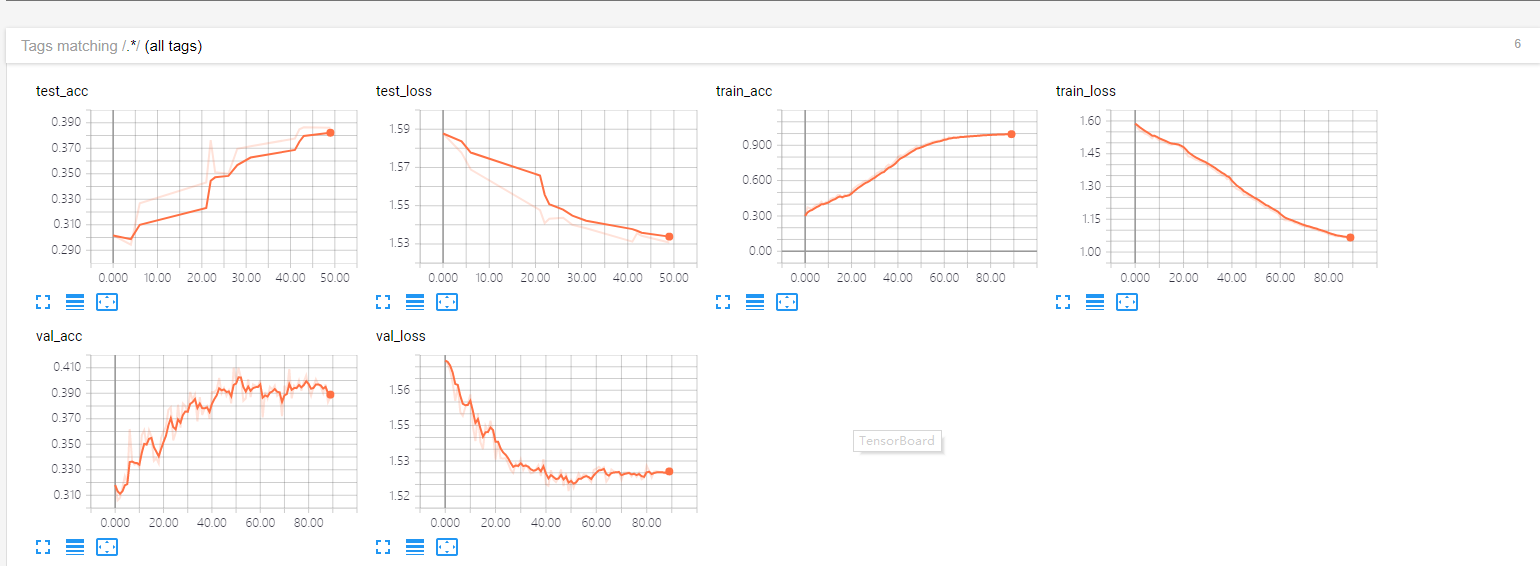

First very thanks for your implement of Matching-Networks with pytorch. I have follow your setup to run the miniImagenet example,the training accuracy can achieve about 100%,but the val and test accuracy is about 40%.In origin paper it's about 57%.So I wonder if where I'm wrong to run your code or can you tell me your result at miniImagenet?

This is my logs