Have the same issue Alpine: 3.5 Docker: 1.13.1-cs2

/ # time ping -c 1 dev11

PING dev11 (10.1.100.11): 56 data bytes

64 bytes from 10.1.100.11: seq=0 ttl=63 time=0.211 ms

--- dev11 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.211/0.211/0.211 ms

real 0m 2.50s

user 0m 0.00s

sys 0m 0.00s

Hi,

We are running alpine (3.4) in a docker container over a Kubernetes cluster (GCP).

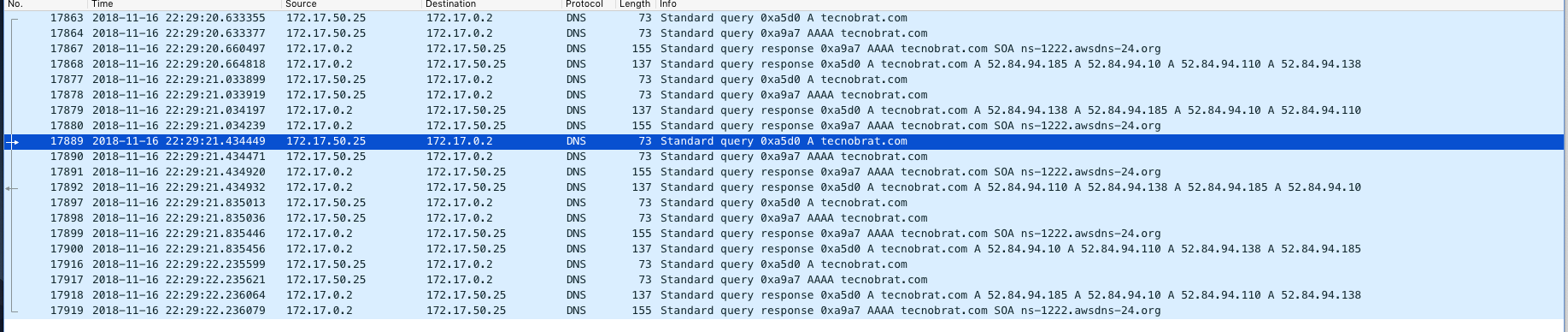

We have been seeing some anomalies where our thread is stuck for 2.5 sec. After some research using strace we saw that DNS resolving gets timed-out once in a while.

Here are some examples:

And a good example:

In the past we already had some issues with DNS resolving in older an version(3.3), which have been resolved since we moved to 3.4 (or so we thought).

Is this a known issue? Does anybody have a solution / workaround / suggestion what to do?

Thanks a lot.