Can you show us a complete, standalone example program? And provide your input file, or at least tell us about it?

What are the actual performance numbers?

Thanks.

Open 243083df opened 7 years ago

Can you show us a complete, standalone example program? And provide your input file, or at least tell us about it?

What are the actual performance numbers?

Thanks.

File is very big, around 10-50 millions records. The xml file looks like this:

<?xml version="1.0" encoding="utf-8"?>

<LIST>

<ELEMENT ATTRIBUTE1="" ATTRIBUTE2="" /><ELEMENT ATTRIBUTE1="" ATTRIBUTE2="" />

</LIST>I was curious, since I use .NET and Go regularly, so I turned this into a reproduction at https://github.com/a-h/sax

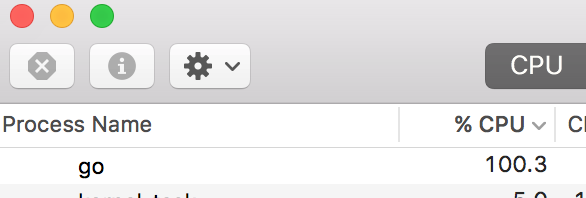

On my MacOS machine, I found that the Go version was much slower (around 20 seconds) than the .NET Core 2.0 one (around 3 seconds) for a file with 10 million elements in it laid out as per the example above.

I put the timing output in the README.md. I noted that the CPU was at 100% during Go execution.

I added an SVG of the Go CPU profile output to the repo.

I think, @a-h's benchmark enough to investigate. I used same code.

Change https://golang.org/cl/63390 mentions this issue: unicode: speed-up is16/is32

By the way. Is call len(p) in https://github.com/golang/go/blob/master/src/bufio/bufio.go#L196 necessarily?

xml.NewDecoder already create bufio.Reader.

@mattn Yes, but its create bufio.Reader without minimum read value. bufio.Reader with minimum read value around 64Mb, speed up parsing 2Gb xml from 2m40s to 2m5s(around 20%, but its not stable) on HDD.

A lot of speed could be gained if the parser was referring to the xml byte slice instead of copying everything. I am working on such an parser for go, based on the rapidxml library in c++

I am experiencing same issue. Parsing 1 GB XML takes minutes which C# version completes in few seconds ( < 10)

So the go sax parser is about 6~7 times slower than the C#, i.e., much room to improve. Watching the development on this...

Anyone can confirm, whether unmarshaling into defined data structures, if possible, can speed things up? I always use the sax parser way, maybe it is time to go with the DOM parser way, as my files are not terribly big. I went with the sax parser way only because intuitively thinking, it should be faster than the DOM parser way.

Just checked the performance of various versions using Docker containers to see if 1.10beta1 is likely to improve performance (I thought I saw a few perf improvements to Unicode handling in the changelog).

docker run -it --rm -v `pwd`:/go/src/github.com/a-h/sax golang:1.10beta1 /bin/bash

docker run -it --rm -v `pwd`:/go/src/github.com/a-h/sax golang:1.9.2 /bin/bash

docker run -it --rm -v `pwd`:/go/src/github.com/a-h/sax golang:1.8 /bin/bash1.10: 0m21.383s 1.9.2: 0m21.281s 1.8: 0m27.370s

So, looks like no improvement this version.

Well done!

Has anyone done a profile to see exactly where the pain-point is? Maybe that might help pushing things a bit?

@suntong - see https://github.com/a-h/sax/ and in particular the profile output at https://github.com/a-h/sax/blob/master/pprof001.svg

It's possible to reduce the amount of calls to the UTF8 DecodeRune etc. by keeping a cache of the names the decoder has already seen, on the basis that it's very likely those names will be seen again. This could be limited to a reasonable level, e.g. 10,000 XML names to stop it using too much RAM.

Over 1,000,000 XML elements, I saw an improvement from 1.632s to 1.431s.

$ go test -bench=Decoder -cpuprofile profile_cpu.out

processed authors: 1000000

goos: darwin

goarch: amd64

pkg: encoding/xml

BenchmarkDecoder-4 1 1437269859 ns/op 518663400 B/op 8000059 allocs/op

PASS

ok encoding/xml 1.632s

$ go test -bench=Decoder -cpuprofile profile_cpu.out

processed authors: 1000000

goos: darwin

goarch: amd64

pkg: encoding/xml

BenchmarkDecoder-4 1 1211802156 ns/op 518656056 B/op 8000053 allocs/op

PASS

ok encoding/xml 1.431sfunc (d *Decoder) isName(s []byte) bool {

// Check the cache first.

if _, ok := d.names[string(s)]; ok {

return true

}

// If it's not in the cache, add it if it's valid.

v := isName(s)

if v {

d.names[string(s)] = true

}

return v

}

// Get name: /first(first|second)*/

// Do not set d.err if the name is missing (unless unexpected EOF is received):

// let the caller provide better context.

func (d *Decoder) name() (s string, ok bool) {

d.buf.Reset()

if !d.readName() {

return "", false

}

// Now we check the characters.

b := d.buf.Bytes()

if !d.isName(b) {

d.err = d.syntaxError("invalid XML name: " + string(b))

return "", false

}

return string(b), true

}@ianlancetaylor - is it worth me pursuing that as a change?

My benchmark is:

func BenchmarkDecoder(b *testing.B) {

b.ReportAllocs()

count := 1000000

buffer := strings.NewReader(`<authors>` + authors(count) + `</authors`)

for i := 0; i < b.N; i++ {

buffer.Seek(0, io.SeekStart)

decoder := NewDecoder(buffer)

var authors = 0

for {

tok, _ := decoder.Token()

if tok == nil {

break

}

switch se := tok.(type) {

case StartElement:

if se.Name.Local == "author" {

authors++

}

}

}

if authors != count {

panic("failed to report correct number of authors")

}

fmt.Println("processed authors: ", authors)

}

}

func authors(count int) string {

buf := bytes.NewBufferString("")

for i := 0; i < count; i++ {

buf.WriteString(`<author name="Alan Watt" />`)

}

return buf.String()

}@a-h I think it would be better to use slice cache isead of Read|Unread byte

Do you mean something that reads into a buffer like this? https://github.com/a-h/lexical/blob/master/input/stream.go

@a-h The code could be further optimized like this:

// Get name: /first(first|second)*/

// Do not set d.err if the name is missing (unless unexpected EOF is received):

// let the caller provide better context.

func (d *Decoder) name() (s string, ok bool) {

d.buf.Reset()

if !d.readName() {

return "", false

}

// Now we check the characters.

b := d.buf.Bytes()

if s, ok = d.names[string(b)]; ok {

return s, ok

}

if !isName(b) {

d.err = d.syntaxError("invalid XML name: " + string(b))

return "", false

}

s = string(b)

d.names[s] = s

return s, true

}This way we can avoid the isName check and reuse the string from the d.names map without allocation.

For the existing unmarshal benchmark this gives me

name old time/op new time/op delta

Unmarshal-8 11.6µs ± 1% 11.7µs ± 3% ~ (p=0.990 n=12+14)

name old alloc/op new alloc/op delta

Unmarshal-8 8.27kB ± 0% 9.13kB ± 0% +10.40% (p=0.000 n=15+15)

name old allocs/op new allocs/op delta

Unmarshal-8 190 ± 0% 166 ± 0% -12.63% (p=0.000 n=15+15)No change on the time, but at work we have a tool that parses multiple XML files, each around 250MB+, and reusing the allocated names saves about 2 seconds per file (14s -> 12s).

In my case I shaved off another second by making copyValue accept both []byte and string, avoiding an allocation for each attribute value.

But again, this doesn't reflect in the existing benchmark:

name old time/op new time/op delta

Unmarshal-8 11.6µs ± 1% 11.4µs ± 1% -1.72% (p=0.000 n=12+12)

name old alloc/op new alloc/op delta

Unmarshal-8 8.27kB ± 0% 8.11kB ± 0% -1.93% (p=0.000 n=15+15)

name old allocs/op new allocs/op delta

Unmarshal-8 190 ± 0% 186 ± 0% -2.11% (p=0.000 n=15+15)Hi, any update on this or workaround solution? i also observed that it is slower than java.

I have also found my way here after seeing a very large box in my profile for encoding/xml (*Decoder) unmarshal. Golang XML 'feels' a lot slower than C++ rapidxml, pugixml and expat, though I have not benchmarked.

Hi all and @243083df, @l-we, @webern, @ajruckman, @qwantix, @evt

Regarding this issue you can try my library

Any progress on this issue?

Actually, programmer who coded this library is long gone so we are clueless.

Disclaimer: this is not official excuse, I made it up since community is mysteriously silent on this issue

@saleem-mirza this begs the question, what are the plans for Golang to continue supporting XML natively as part of the standard library?

Currently the XML implementation suffers from several issues that make it substandard (when compared with Xerces, Java or libxml2, for example)

1) It is very slow. 2) It is non-validating (we need xsd validation) 3) There is no support for loading XML when the schema is not known. i.e. we can load any json and we get a map[string]interface. But we cannot do this with xml.

I would love to work on something like this, but it would definitely be a full-time job, i.e. someone would have to be paid to build this.

Hi all and @243083df, @l-we, @webern, @ajruckman, @qwantix, @evt

Regarding this issue you can try my library

For people who are interested in streaming parsing i have refactored the library to improve code quality, error handling and now it is more than 30% faster compare to previous version.Let me know if you have suggestions/feedbacks.

Change https://golang.org/cl/218658 mentions this issue: encoding/xml: decoder to use buffered start attr

What version of Go are you using (

go version)?1.9

Does this issue reproduce with the latest release?

True

What operating system and processor architecture are you using (

go env)?Windows

What did you do?

I trying to parse large files with SAX with go, and get decadent performance. I rewrite code in C#, and get maximum performance.

What did you expect to see?

Mature and fast xml parser in golang.

What did you see instead?

The bottleneck in SAX xml parsing with go is CPU, instead of low HDD io performance.