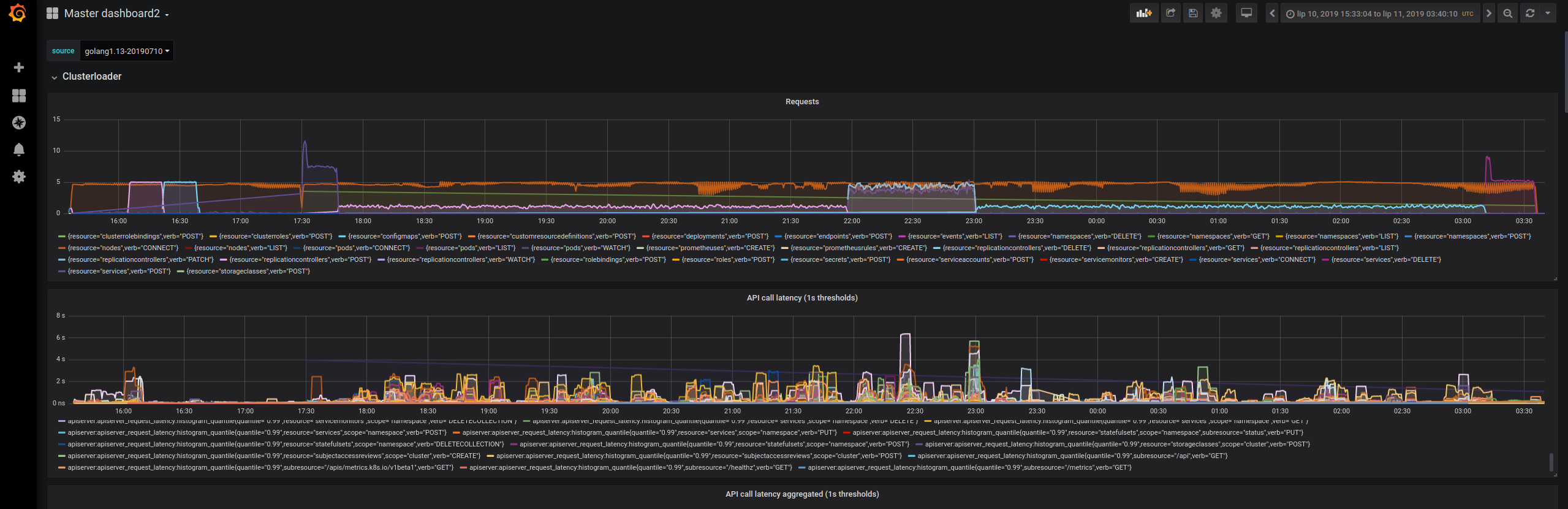

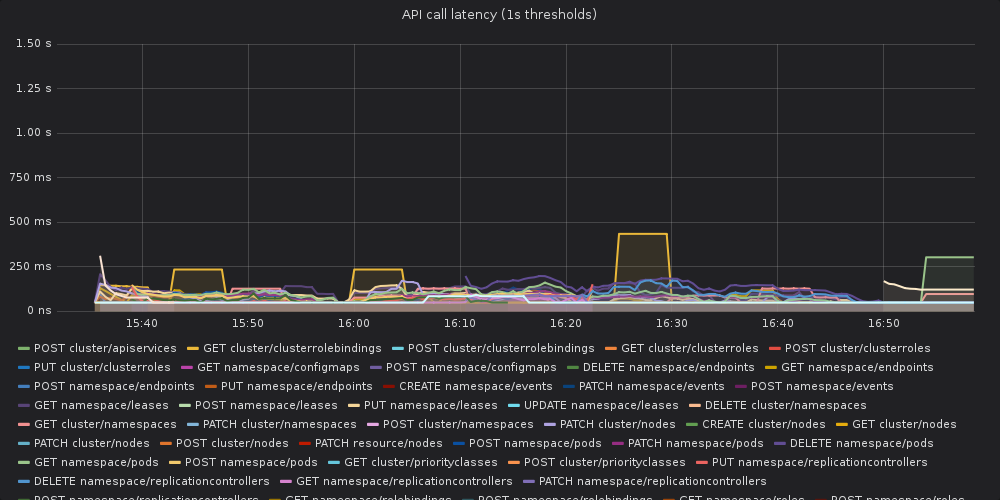

As asked by @mknyszek, we re-run the tests from golang head with patched https://go-review.googlesource.com/c/go/+/183857 Unfortunately, it didn't help much, the api-call latency was still visibly higher than in the baseline.

Closed mm4tt closed 5 years ago

As asked by @mknyszek, we re-run the tests from golang head with patched https://go-review.googlesource.com/c/go/+/183857 Unfortunately, it didn't help much, the api-call latency was still visibly higher than in the baseline.

CC @aclements @mknyszek

Interesting! The fact that the regression is still there indicates that it has little to do with that code snippet. This is a little surprising to me but it's definitely progress.

Also, by removing that code snippet, it seems we lost virtually nothing in terms of physical memory utilization for the load test, based on graphs which were shared out-of-band. This is particularly interesting because I ran a bunch of benchmarks against that patch, of which 2 worsened significantly in terms of average and peak RSS when applying this patch (others retained their RSS usage improvements). This indicates to me that leaving it in is probably makes the most sense.

My next best guess as to the cause of the regression (assuming it's the result of my changes, related to #30333) is the background scavenger existing in the first place, since it is a source of additional lock contention. If this is actually the cause, I have a new patch which might help. https://go-review.googlesource.com/c/go/+/184497

This patch makes it so that the background scavenger should rarely block all allocations from making progress. This patch is basically just a performance improvement, but it could be enough to help the 99th percentile API latency. If this patch doesn't help at all, then I'm out of guesses. I think we should consider bisecting at that point to try to find the source of the regression, though given that these scalability tests take a very long time to run, I imagine it'll take quite a few days to get to the bottom of that.

There's a more aggressive version of this patch (that's slightly trickier) where we only block the one allocation which conflicts with the background scavenger. It's a very minor change to this patch but what makes it tricky is all the consequences. For now, let's try this patch.

Out of curiosity, do you run the load test with GODEBUG=madvdontneed=1 set? In my experimentation, using MADV_FREE over MADV_DONTNEED does help here, but I understand that may not work if you need to track memory usage via any of the Linux-reported statistics.

This patch makes it so that the background scavenger should rarely block all allocations from making progress.

Sorry, re-reading this I realized it's slightly inaccurate. It doesn't just block allocations, it blocks frees, and anything else that needs to acquire the heap lock (which is a lot of things).

So, it's a good bit worse today than what I originally said, but even the common case is fairly uncommon when we're talking about these code-paths.

Thanks @mknyszek!

Just to confirm, do you want us to run the tests from golang head with just the new patch applied and forget about the previous patch, right?

Our scalability test resources are under high demand recently, but I will see what I can do to run the tests today or tomorrow. Will post an update here when I have the results.

Regarding the GODEBUG=madvdontneed=1, AFAIR when debugging the go1.12 performance regression we agreed that it shouldn't matter as we're using 4.14+ kernel version. Let me know if the situation has changed here in any way. Given that it would make impossible to track memory usage via Linux-reported statistics, I think we can try it as an experiment if it can provide you with any meaningful insights, but it wouldn't work for us as a long term solution.

Thanks @mknyszek!

No problem! Just to confirm, do you want us to run the tests from golang head with just the new patch applied and forget about the previous patch, right?

That's correct.

Our scalability test resources are under high demand recently, but I will see what I can do to run the tests today or tomorrow. Will post an update here when I have the results.

I realize this all takes a while to set up and run, so I appreciate you taking the time.

Regarding the GODEBUG=madvdontneed=1, AFAIR when debugging the go1.12 performance regression we agreed that it shouldn't matter as we're using 4.14+ kernel version. Let me know if the situation has changed here in any way. Given that it would make impossible to track memory usage via Linux-reported statistics, I think we can try it as an experiment if it can provide you with any meaningful insights, but it wouldn't work for us as a long term solution.

Ahhh right, I remember now. I forgot you're already using MADV_FREE. In that case you can ignore this, like last time.

The bottleneck here is not my time but the scalability test resources, i.e. the gcp projects where we run the tests. The test uses 5K+ CPUs and takes over 10+h, there is only a few projects where I can run it and all of them are in high demand, other teams use them to run their scalability tests. We'll need to figure out something for long term plan, especially if we'd like to test new golang releases automatically / continuously.

Nevertheless, I managed to find a free project and run the tests. I'm running the tests at the golang commit fbde753a58e286c405a04388816ed044486151bb (current head) with reverted https://go-review.googlesource.com/c/go/+/184098 (which apparently broke k8s build again, I asked for help in https://github.com/kubernetes/kubernetes/issues/78845#issuecomment-507736424) and with https://go-review.googlesource.com/c/go/+/184497 patched. Will post the results tomorrow morning.

The test passed, but the api-call latency is still visibly worse than in the baseline:

If we don't have any other ideas, I can try re-running the baseline to verify that we don't have any external infrastructure issues impacting the results.

@mm4tt when you say it "passed" do you mean there's some threshold that average 99th percentile latency needs to stay under? If it did better this time around, then that's good news, and I think I have a next step.

I have one more patch which is basically taking the idea from the previous patch further. It's a slightly riskier change to land for Go 1.13, but it might be enough to bring it back to expected levels.

Can you please do another run with the following two patches applied to HEAD in the following order?

@mknyszek - yes, that's exactly the case. We have a threshold that the 99th percentile of various groups of requests needs to stay under. It was the first run compiled with golang1.13 that passed, so there is an improvement, but the SLI is still much worse than in the baseline.

I'm rerunning the tests with your patches. I still needed to revert https://go-review.googlesource.com/c/go/+/184098, as I'm not able to compile k8s with it in, but I don't think it should have any performance implications. Will update the thread when I have the results.

I've run the tests twice over the weekend and both times it made our api-server explode during the load test.

From a cursory look at the graphs I found that there is a big increase in the memory usage comparing to the previous run (head + https://go-review.googlesource.com/c/go/+/184497)

Jul 2nd run:

Jul 5th: run

@mknyszek, any ideas how to interpret that?

In the meantime, I'm re-running the tests compiled with golang1.12 to get a better baseline.

That's unfortunate, but thanks for confirming. It's possible the second change is broken in some way (not correctness, but in breaking some invariant), so I'll do some more testing.

When you say "exploded" do you mean crashed in some way? OoM? What exactly went wrong?

Can you please clarify what you mean by "memory usage"? Is it RSS, or virtual memory footprint? Or is it computed from MemStats and if so, which MemStats?

By exploded I meant that it became overloaded (due to other components crashlooping) and eventually also started crashlooping, most likely because the load on it was so high that it wasn't able to reply on liveness probe in time and was restarted by kubelet. Hard to say what was the exact cause here, but there is a know vicious circle problem here. If the api-server becomes too overloaded, it slows down, other components may start restarting as they're not able to renew the "master lease" on time. Restarting components in turn introduce higher load on the apiserver as they issue more requests during initialization than during normal operation. Then api-server becomes even more overloaded and so the vicious circle continuous...

"memory usage" is based on container_memory_usage_bytes metrics which I think is a sum of all kinds of memory, e.g. container_memory_rss., container_memory_cache, container_memory_swap

I modified the graphs to see which one went up and it's the container_memory_rss:

Jul 2nd:

Jul 5th:

Thanks for looking into the source of the memory increase.

I'm having a hard time figuring out what could be going wrong that's related to my patch, especially since memstats seem to cut off a good amount (between 10:50 and 10:55) before memory actually spikes:

Based on this, it seems like the load spike comes in before RSS actually increases, and so the memory blow-up seems like it's just a symptom. The first stat, btw (heap_sys - heap_released) is what the runtime thinks the heap's contribution to RSS is, and I think it's fairly accurate. Naturally though it gets cut off when metrics start to disappear.

My patch definitely seems to be involved though; I tried to run a bunch of benchmarks with the latest patch and managed to OOM a machine (at least, as far as I can tell, I can't SSH back into it even though it's on, which is usually a symptom of accidentally OOMing it).

On the plus side, it seems like the latency is much better compared to before, at least while the server is alive? I think this is going in the right direction, I just need to figure out what went wrong here.

Thanks Michael,

Let me know if your benchmarks found anything.

We almost have a ready PR for fixing the disappearing metric problem under high load, I can try re-running the test with it patched if you think that it might be helpful in debugging.

I've re-run the baseline, will add it to Grafana shortly.

Well, I'm not sure now if I actually OOM'd the machine I mentioned, but I'm pretty sure I found a deadlock bug in that last patch that (I think if left sitting for long enough) has the potential to OOM. Is it possible that the api-server just deadlocked in the last run and got overloaded when it woke up, so much so that it started crash looping?

In any case, I rewrote the patch to be more "obviously correct," meaning the synchronization should be much less funky, and this specific deadlock should be gone.

To be safe and not waste valuable resources, I'm going to run my benchmarks overnight with those patches and make sure they don't OOM/deadlock/etc. I'll let you know tomorrow how it turns out, and if all goes well, could you please try applying the latest patch and trying it out again?

Just to be completely clear, I mean the following two patches, at their latest versions:

Sounds good, will run the tests once we get a green light from you. Thanks Michael!

The benchmarks didn't explode, which is a good sign. I think the patches are good to go.

Well, I took a longer look at everything and it seems like the fact that the scavenger tries to re-acquire the heap lock can actually make things worse in some cases.

I have one more patch which makes things a little better, but it still seems like it's not much better than the Go 1.13 baseline (without the patches) for the benchmarks I've been running. It seems like it's helping y'all at least, given that the test passed? Do you think it's possible it was a fluke? Do you know how much it failed by?

Finally, if you haven't started running the load test yet, would it be possible apply the following patches instead?

This includes that newest patch I mentioned.

Unfortunately, I didn't get your second message on time and the tests were run without the third patch.

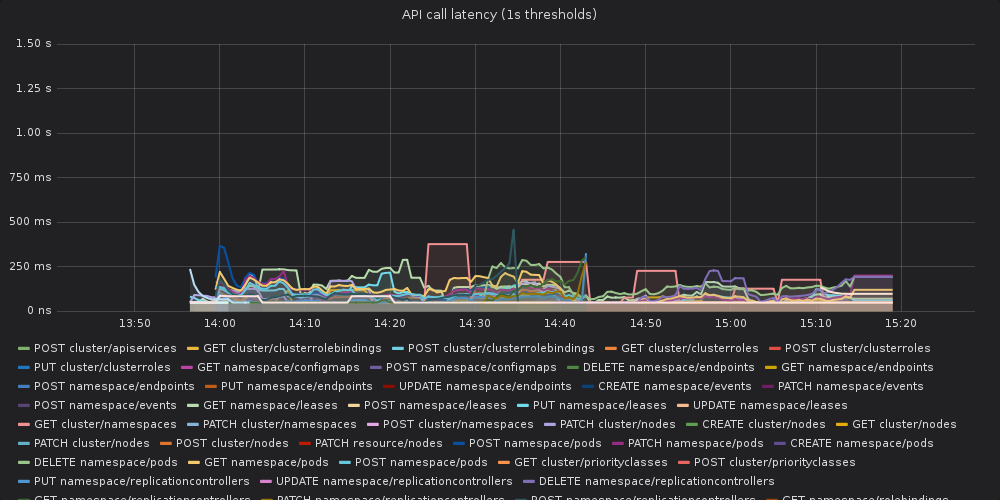

This time the API server didn't explode. Unfortunately the results are still visible worse than in the baseline

Baseline:

Yesterday's run:

To compare the same graph for Go 1.13 (no patches), imho it looks worse than the yesterday's run

I'm not sure if I'll find resources to run the tests with the third patch this week, so most likely expect the results in the beginning of the next week.

Nevertheless, I think we're still far from getting to the state where api-call latency is reasonable (i.e. similar to golang1.12 baseline). Given how long and expensive (and hard to find resources for) the tests we're currently running are, it's not the most efficient way to iterate on this issue. I'll see if we can find some faster and less expensive tests instead (that would also catch the difference between go1.12 and go1.13). Ideally, I could give you access to some smaller dev project where you could execute the tests yourself. This should allow us to iterate faster and once we had some reasonable version we could re-run the standard tests to verify.

I'll see what can be done and get back to you beginning of the next week.

I think you're right, and I think this direction of these patches unfortunately isn't going anywhere; I need to take a step back and try to better understand what specifically is causing the regression. No need to use more load test resources on this for the time being.

One of the benchmarks I'm running provides a 99th percentile latency and seems to be a good stand-in for the load test (and takes only a around half an hour to run), so I'll continue with that for now and try to pinpoint the source of the issue. If I make progress with a fix, it would be great to have a dev project to try things out on, but it's not urgent.

Thanks so much for your help and cooperation, I'll get back to this thread before the end of the week with any updates.

On Thu, Jul 11, 2019, 5:12 AM Matt Matejczyk notifications@github.com wrote:

Unfortunately, I didn't get your second message on time and the tests were run without the third patch.

This time the API server didn't explode. Unfortunately the results are still visible worse than in the baseline

Baseline: [image: OFTckmm27G7] https://user-images.githubusercontent.com/2604887/61035850-2faa7e00-a3c8-11e9-9567-dc655ca8cc70.png

Yesterday's run: [image: 3icudVa7Tfq] https://user-images.githubusercontent.com/2604887/61035864-36d18c00-a3c8-11e9-8fd7-952c2804d155.png

To compare the same graph for Go 1.13 (no patches), imho it looks worse than the yesterday's run [image: 3ttBm4gPc08] https://user-images.githubusercontent.com/2604887/61036064-9def4080-a3c8-11e9-88c9-2d60431d46c9.png

I'm not sure if I'll find resources to run the tests with the third patch this week, so most likely expect the results in the beginning of the next week.

Nevertheless, I think we're still far from getting to the state where api-call latency is reasonable (i.e. similar to golang1.12 baseline). Given how long and expensive (and hard to find resources for) the tests we're currently running are, it's not the most efficient way to iterate on this issue. I'll see if we can find some faster and less expensive tests instead (that would also catch the difference between go1.12 and go1.13). Ideally, I could give you access to some smaller dev project where you could execute the tests yourself. This should allow us to iterate faster and once we had some reasonable version we could re-run the standard tests to verify.

I'll see what can be done and get back to you beginning of the next week.

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/golang/go/issues/32828?email_source=notifications&email_token=AAJQ3HHHTMIUVDMAG425E73P632O5A5CNFSM4H4ER6T2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODZWBYFI#issuecomment-510401557, or mute the thread https://github.com/notifications/unsubscribe-auth/AAJQ3HFZYXS6RF2ZLYS5WWDP632O5ANCNFSM4H4ER6TQ .

I think I've narrowed down the range of CLs which contain the source of the regression, and they're all mine.

Turns out it has nothing to do with the background scavenger, but rather with changes I made to the treap implementation in the runtime, which feels familiar. Reducing the number of treap operations required for allocation/free/scavenging probably isn't possible without a huge refactoring, so I think the main thing to do here is to try to make the operations themselves faster. I didn't expect what I added to have such a large effect but given that there's a lot of heap lock contention I suppose I shouldn't be surprised.

In the past I attempted to add a cache to speed up allocations, but it didn't help much. But, perhaps there's something yet-unexplored here. I'll think about it some more.

A short update from my side, busy week, but haven't forgot about this. I'm working on reducing time and cost of the tests I used to verify differences between golang1.12 and 1.13, so it will be possible to run them on my dev project. Hopefully will have an update within the next few days.

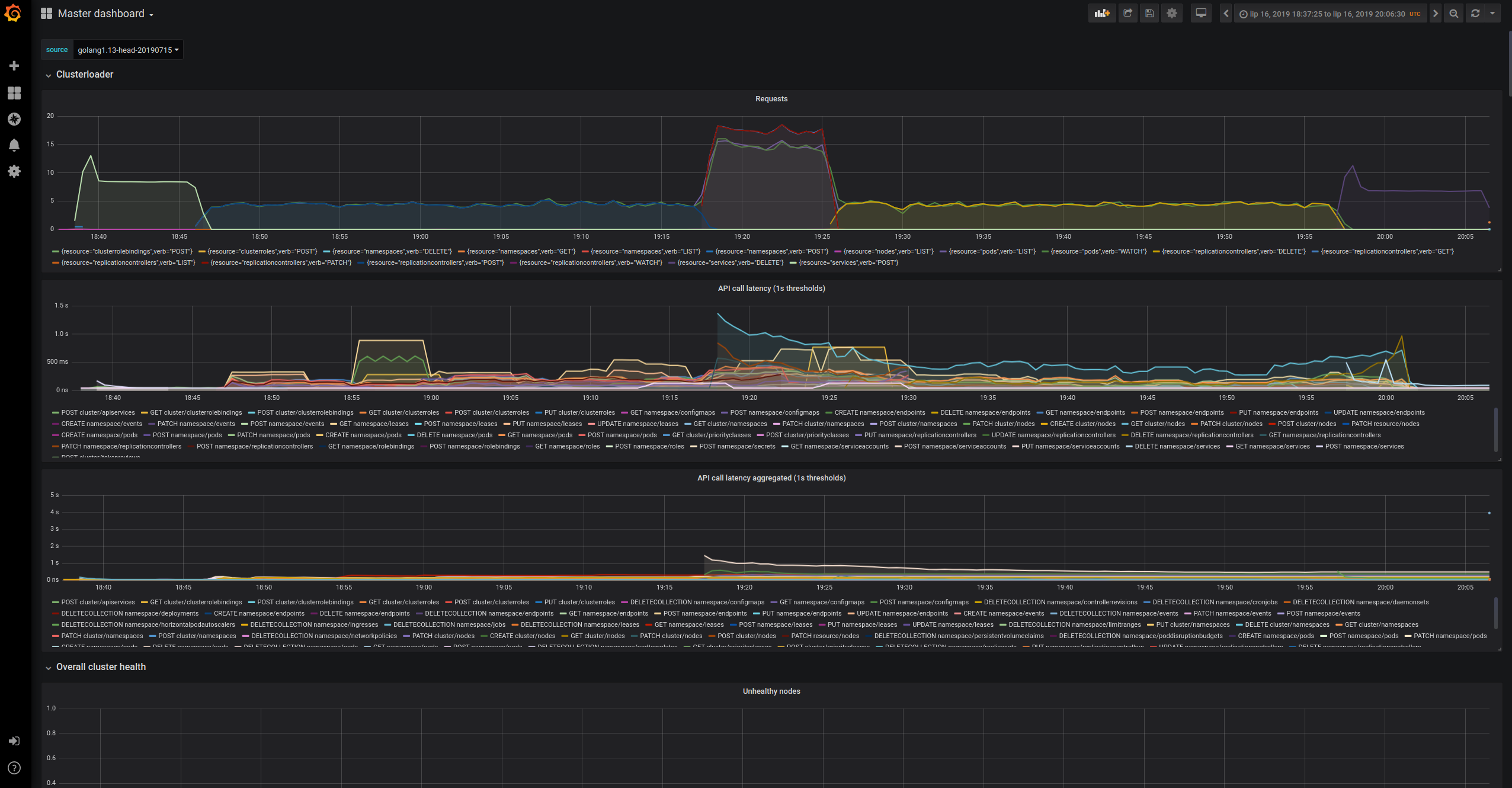

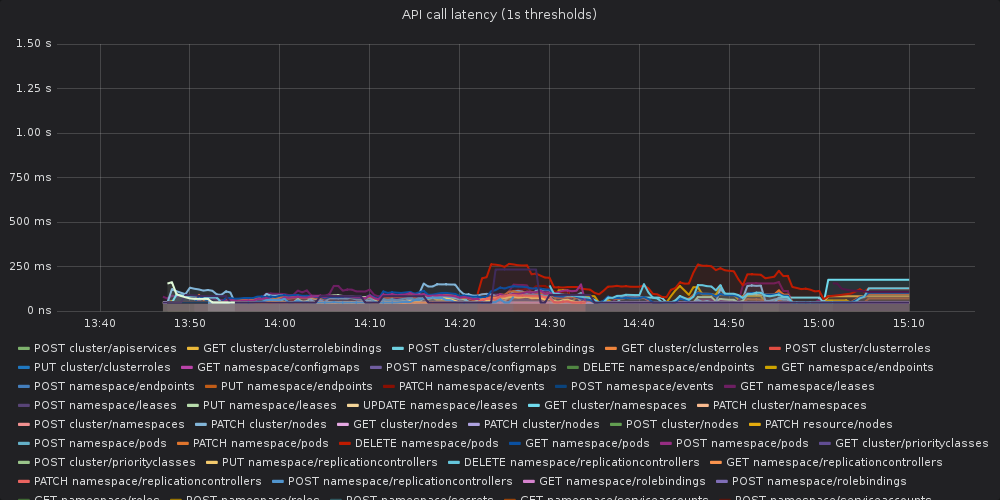

I managed to cook up a faster and cheaper test that reproduces golang1.13 performance issues. The test takes ~2h and uses about 10x less resources so can be easily run on our dev projects. Below some graphs showing the difference - there is a very visible difference in api-call latency:

golang1.12.6 (baseline):

golang1.13 (head as of 2019-07-15):

Good thing about this is that given how fast and cheap the test is we could probably run it continuously against golang head in the future. This will require some additional work, but now it's definitely doable and feasible.

@mknyszek, let me know if you have any new version you'd like to try. For now and until I clean up the test script and simplify the setup I can run it manually from my workstation.

Change https://golang.org/cl/186598 mentions this issue: runtime: add physHugePageShift

Thank you! I have one patch to try, which helped the proxy benchmark I've been running. It's the one referenced in the issue history.

It didn't totally solve the regression, but it helped. It's also just a low-risk patch that's probably a good idea to land anyway. Still working on understanding the root cause.

@mknyszek, do you want me to try running our test with https://golang.org/cl/186598 patched? In general and for future, if you want me to run something, please be explicit about it and provide me with an exact list of patches that I should apply to golang head :)

Yeah that's correct, and sorry about that. I'll be more specific in the future.

To be totally clear: could you please try out the benchmark with https://golang.org/cl/186598 applied to HEAD?

@mm4tt Here's our plan moving forward.

First we want to try and understand better whether the problem is where we think it is. To that end, can you please try running the test at the following three commits:

If you find that all three perform the same (or roughly the same, good or bad), then I think we need to take a step back and start bisecting, since we no longer have any clues as to where the regression could be coming from (and it means that the benchmark that I'm using is not a good proxy).

If you find that there's a noticeable difference between "first suspect" and "last suspect" can you please also run it for the following two:

Once we are able to pinpoint the issue, we can then start very aggressively logging to try to understand better what's going wrong in the tail.

Thank you for your help and cooperation! I hope this isn't too much at once.

I have one more patch for you to try, which really helped with the latency of one of the benchmarks I was running.

Can you please try the test with the following patch applied to HEAD?

Regarding priority, I think it would be best to run this after what I mentioned in my last message.

Change https://golang.org/cl/186926 mentions this issue: runtime: call sysHugePage less often

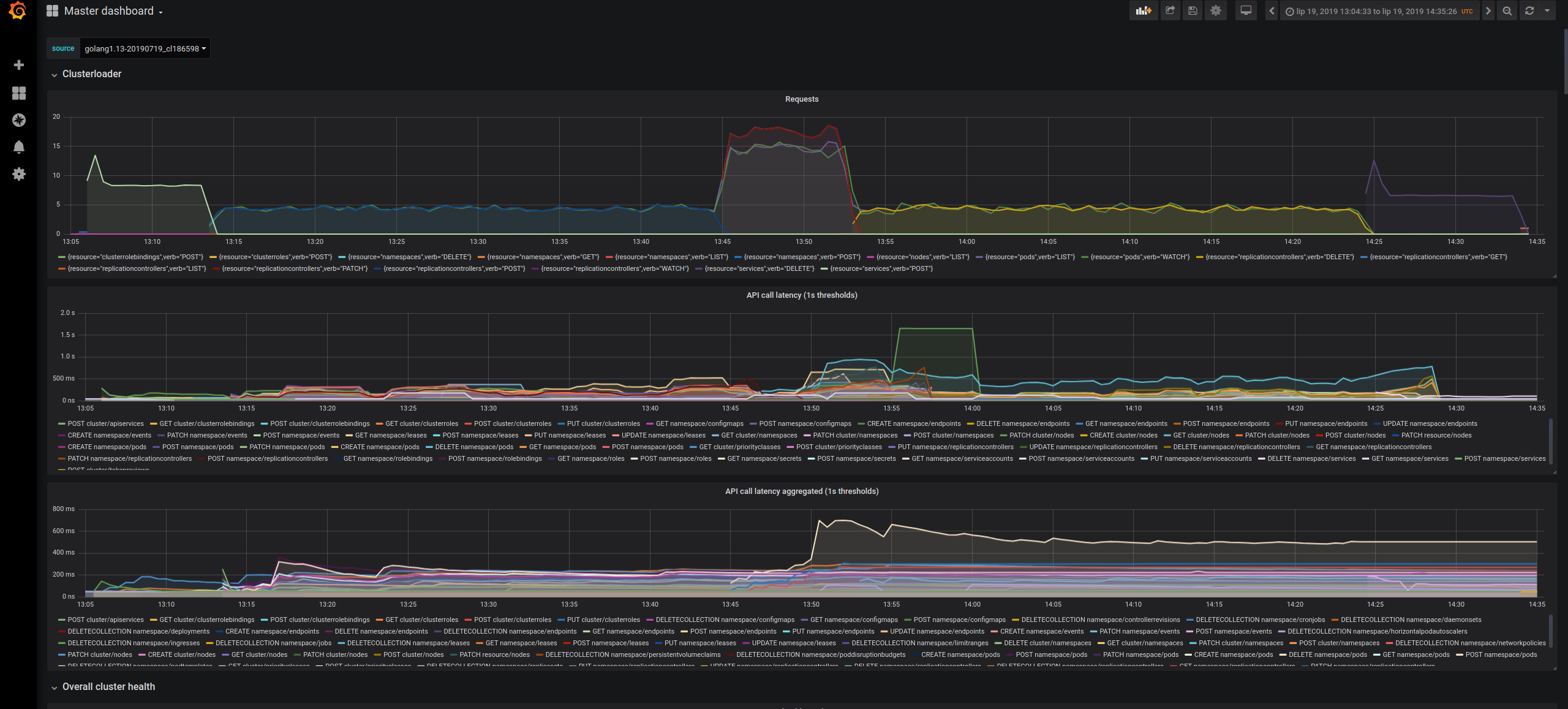

Forgot to document the results of running the tests with the https://go-review.googlesource.com/c/go/+/186598/ patch.

Below are the graphs, they look better than golang1.13 head, but much visible worse than the baseline (see https://github.com/golang/go/issues/32828#issuecomment-512238610)

I'm currently running the tests at 1ad2298 (before commit), will post the results once I have them

Bad news, the regression is already visible at 1ad2298 (before commit). I'm not sure if there is much gain in trying the other commits, we should rather try some older commits to find a place where it started. I'm currently running the tests at https://github.com/golang/go/commit/7b62e98 to see how far in the past it goes.

I adjusted the graphs to use the same y-max, here are the results:

baseline (go1.12.6)

golang at 1ad2298

Oh hm, that's interesting. I'm worried though because git history gets difficult to bisect here. The problem with going this far back was that the problem that affected y'all for the Go 1.12 release cycle (#31678, which was fixed in Go 1.12.5) was never addressed at HEAD because, as we saw in trying to apply the same fix to HEAD (the very very first thing we tried for the Go 1.13 release cycle), it didn't help.

However, it's possible there was a span a time between which the regression in #31678 and another, new regression overlapped, and things got even worse. Then somewhere between 1ad2298 and tip, #31678 got fixed (likely fe67ea32bf58fde5aae5609af12c71e547566513), yet the new regression remained.

Basically what I'm saying is that it's possible if you run a bisection you might find that the root cause to be the same one as in the Go 1.12 release cycle. This also could mean that performance gets especially bad somewhere between 1ad2298 and tip, or that perhaps one regression is masking another, and they overlap in some range of commits (my guess is between 1ad2298 and fe67ea32bf58fde5aae5609af12c71e547566513).

With that being said, this is fairly easy to check for. Can you please do a run with 1ad2298 and the following patch applied?

If this fixes it, then I think it's worthwhile trying the first suspect and last suspect as I mentioned in my previous message, that is:

And then, it would probably also be worthwhile trying:

Finally, I think it is also worthwhile to try the following patch applied to HEAD:

The reason I think this last one is worth a try is because it looks a lot like the regression in #31678 which we know affected the load test; it's an madvise syscall being called more often than needed on a slow path in the allocator. In the proxy benchmark I've been running, fixing this dramatically improved the 99th percentile free latency (like, by almost 3x). About 60% of all page-level frees were unnecessarily making a syscall in that benchmark.

Thanks for going ahead and running 7b62e98 though; it's still possible that the regression goes further back in history and has nothing to do with the range I suspected, and the results of that will give us more confidence as to whether my suspicions above are correct.

The 7b62e98 is much better than any other commit I've tried. It's slightly worse than baseline, but it might be noise (we'd have to run a full 5K CPU test to get better assessment).

Not sure though how much gain is in that given what you wrote above :/

But the good news is that I've finally found time to clean up my scripts and set up a VM from which they can be run. @mknyszek, I'll contact you with the details and explain how you can run these tests on your own. That should allow you to iterate faster.

Ah! That's very interesting. OK in that case it's probably worth bisecting between 7b62e98 and 1ad2298. I think we can call my theory from the last message was debunked (though I still think we would probably benefit from landing those two CLs before the release).

Thank you for running 7b62e98! It saved us a good bit of time.

FYI, I've started an automatic bisection, there about 7 steps left and each step takes 3-4h so we should have the results on Monday unless something goes wrong.

I ran the bisection but it didn't get me anywhere. When debugging it, I realized that I was completely wrong in saying that 7b62e984d9 was good. I added some new graphs that are much better in assessing whether a commit is good or bad (they are much more sensitive to spikes that we don't have in the baseline) and re-run 7b62e984d9 twice. Both times it turned out bad:

baseline

7b62e984d9 run 1

7b62e984d9 run 2

@mknyszek, looks like we should follow the path you described in https://github.com/golang/go/issues/32828#issuecomment-513917265 You can run these tests in the dev env I prepared for you.

I'll be OOO whole August, but I will pass the knowledge to one of my teammates - @oxddr. In case you have any questions or problems with the dev env you can reach out to him. Once you have a version that passes the simplified tests he'll also help you with running the full scale tests for it.

We've both spent a lot of time working on this, hope you'll figure it out and find a solution soon. Good luck!

@mknyszek is also OOO, so I'm going to see what I can do. Here's my summary of what we've tested and currently know (mostly for myself):

All tested commits from oldest to newest:

7b62e984d941c753e7fb20f8b59a49acf62c88a7 (bisection between 1.12 and 1.13): bad

1ad2298 (parent of merge all treaps into one implementation): bad

c290cb6338 (initial 1.13 test): bad

c290cb6338 + do not scavenge inline with allocation: bad

fbde753a58 (July 2 head) + block on background scavenger only if necessary: test passed, but still visibly bad

fbde753a58 + block on background scavenger only if necessary + unscavenge span without heap lock: bad

After the last three, @mknyszek dismissed the background scavenger as the problem and shifted focus to the treap implementation. New plan.

Current status:

@mm4tt / @oxddr, I'm going to work on getting spun up with the dev instance based on the instructions you sent via email.

Generally, though, I would love to have a more concrete metric we can track versus just seeing that it's "visibly worse" than the baseline. My particular concern is that all of these latency plots have both extreme overplotting and different palettes, which makes them very hard to compare visually. It's obvious that the worst p99 latencies are worse, since they stand out above the rest, but it seems like only a few request types may actually be affected. How many requests types are actually affected? Are they always the same request types? Is there a metric we can use to more easily compare these, like time-averaged p99 latency?

@aclements I am aware that eyeballing a graph is not an ideal approach for validation, however at the moment I don't think we have a better metric to track. From our perspective p99 latency of requests is real, user-focused metric. I'll give some thought and try to come back to you after the weekend. Something to try out is to plot only N top streams or actually aggregate them together, instead of grouping them by resource, verb. Most of types are handled the same way, with few exceptions.

I've finished running the experiments proposed by @mknyszek. The TL;DR is: at current HEAD, the answer appears to be to apply CL 183857.

Here's a full summary of the experiments:

Jumping forward to treap changes:

Jumping forward to background scavenger:

Jumping forward to HEAD, which includes CL 186926, which fixes a bug introduced in 31c4e09 that lots of unnecessary madvise calls:

I think we have our answer.

Thanks. Should we put 183857 into 1.13? It looks safe enough.

I would like to. It failed several trybots, which I haven't had a chance to debug. I'm also having trouble running the k8s load test to confirm the fix (infrastructure issue unrelated to the fix itself).

Change https://golang.org/cl/189957 mentions this issue: runtime: grow the heap incrementally

Change https://golang.org/cl/183857 mentions this issue: runtime: scavenge on growth instead of inline with allocation

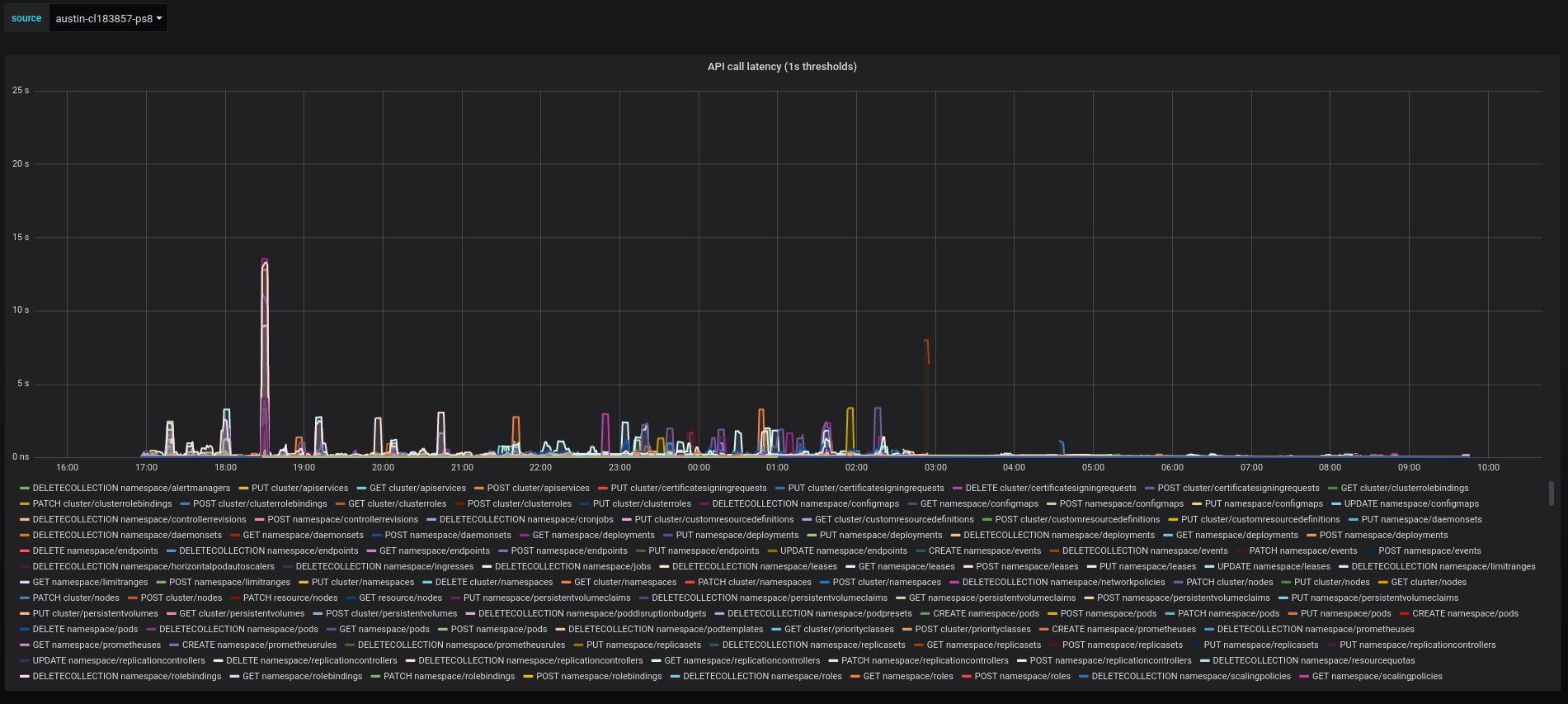

@mknyszek pointed out that the final result above (0ca4f6b + CL 183857: unified treaps + optimizations + mdavise fix, background scavenger, no inline scavenging) didn't do heap growth scavenging, so in some sense it was cheating. However, I just got a test run with CLs 189957 PS 3 and 183957 PS 8, with the fix to add back heap-growth scavenging. It doesn't look quite as good, but it still looks pretty good, and it's not cheating:

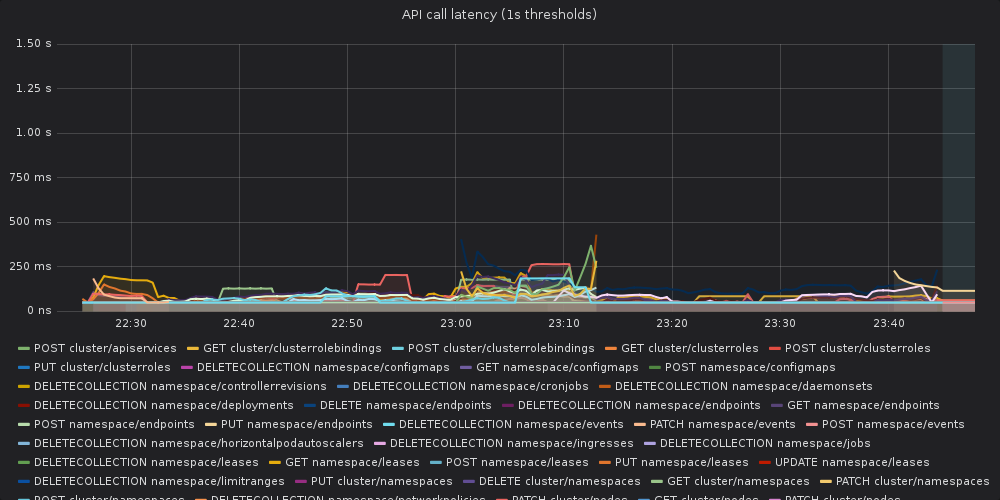

With @oxddr's help, I was able to run the full 14 hour load test with CL 183857 PS 8. The results look pretty good to me:

@oxddr says this is the same plot as the "non-WATCH request latency (99th percentile)" from the original posts. Compared to the 1.12 baseline, there are a lot of requests that actually appear much lower latency overall. There are a few brief spikes, but the baseline had that, too, and it's nothing compared to the fog of spikes from 1.13 beta.

So, based on my assessment, CL 183857 (and its parent) appears to fix this issue.

Actually, the plot I pasted isn't comparable because it omits LIST calls, so the results are currently inconclusive.

@oxddr built a tool to do a more principled comparison of the latency distributions and found that the proposed fixes "don't look good". Unfortunately, that means I don't really know how to make progress here. @mknyszek and I may have to go back to the drawing board.

We'll keep working on this issue, and once we find a fix, our plan is to issue a point release if at all possible, but in the mean time, we're going to go ahead with releasing 1.13.

Together with golang team, we, kubernetes sig-scalability, are testing not-yet-released golang1.13 against kubernetes scale tests.

What version of Go are you using (

go version)?Does this issue reproduce with the latest release?

Yes, it reproduces with golang head.

What did you do?

We've run k8s scale tests with kubernetes compiled with go1.13. There is a visible increase in 99th percentile of api call latency when compared to the same version of kubernetes compiled against go1.12.5.

What did you expect to see?

We expected similar api-call latency as in the baseline run compiled with go1.12.5

What did you see instead?

The api-call latency was visibly worse in the run compiled with golang1.13