Testing on an Intel MacOS (MacOS 12.6.1)

go version

go version go version go1.19.4 darwin/amd64

go env

go env GO111MODULE="" GOARCH="amd64" GOBIN="" GOCACHE="/Users/cameron.bedard/Library/Caches/go-build" GOENV="/Users/cameron.bedard/Library/Application Support/go/env" GOEXE="" GOEXPERIMENT="" GOFLAGS="" GOHOSTARCH="amd64" GOHOSTOS="darwin" GOINSECURE="" GOMODCACHE="/Users/cameron.bedard/go/pkg/mod" GONOPROXY="" GONOSUMDB="" GOOS="darwin" GOPATH="/Users/cameron.bedard/go" GOPRIVATE="" GOPROXY="https://proxy.golang.org,direct" GOROOT="/usr/local/go" GOSUMDB="sum.golang.org" GOTMPDIR="" GOTOOLDIR="/usr/local/go/pkg/tool/darwin_amd64" GOVCS="" GOVERSION="go1.19.4" GCCGO="gccgo" GOAMD64="v1" AR="ar" CC="clang" CXX="clang++" CGO_ENABLED="1" GOMOD="/dev/null" GOWORK="" CGO_CFLAGS="-g -O2" CGO_CPPFLAGS="" CGO_CXXFLAGS="-g -O2" CGO_FFLAGS="-g -O2" CGO_LDFLAGS="-g -O2" PKG_CONFIG="pkg-config" GOGCCFLAGS="-fPIC -arch x86_64 -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/zs/r6mt7f1n66n9mw2rz8f9w2540000gp/T/go-build1170536968=/tmp/go-build -gno-record-gcc-switches -fno-common"

I'm a bit of a go noob (start during advent of code lol), so I wasn't able to set up the UI you used but my IDE's built in pprof output looks similar to yours. If you want the matching profiler UI and send me a how-to link I can set it up :)

TestInterleavedIO

TestSequentialIO

What version of Go are you using (

go version)?This is on macOS 12.6.1 with an M1 chip, but the problem seems to affect Intel as well.

Does this issue reproduce with the latest release?

Yes.

What operating system and processor architecture are you using (

go env)?go envOutputWhat did you do?

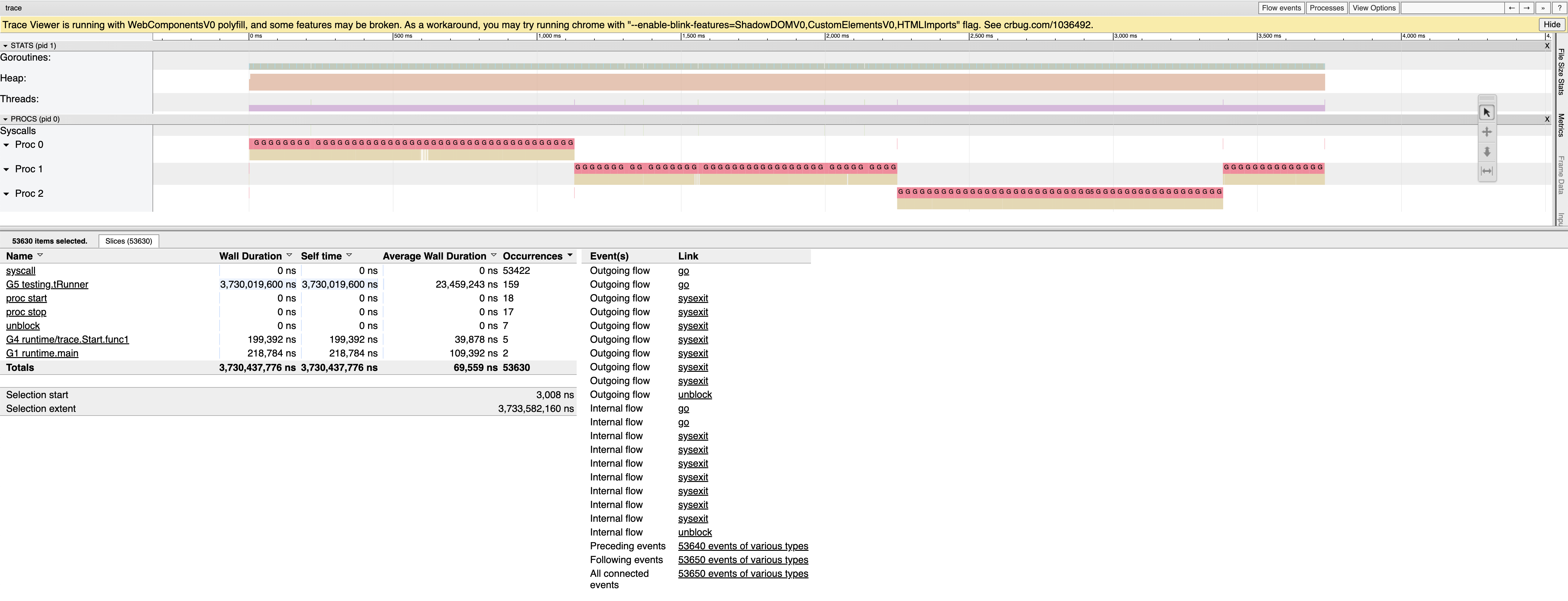

Create a CPU profile of a CPU-bound workload that is interleaved with short system calls.

For example TestInterleavedIO is a reproducer that is compressing a 250MB file containing random data.

What did you expect to see?

A profile that is similar to the one shown below for linux. Most of time should be spent on gzip compression, and a little bit on syscalls.

What did you see instead?

On macOS the majority of time is attributed to read and write syscalls. The gzip compression is barely showing up at all.

Sanity Check

TestSequentialIO implements the same workload, but instead of interleaved I/O, it does one big read first, followed by gzip compression, followed by a big write.

As expected, Linux produces a profile dominated by gzip compression.

And macOS now shows the right profile too.

Conclusion

macOS

setitimer(2)seems to bias towards syscalls. This issue was reported and fixed in the past, see https://github.com/golang/go/issues/17406 (2016) and https://github.com/golang/go/issues/6047 (2013). So this could be a macOS regression.I've uploaded all code, pprofs and screenshots to this google drive folder.