Without the full source its very hard to validate anything I'm afraid but if LPOP was broken I suspect we would see lots of reports.

My guess would be either:

- You're using

rconnin multiplegoroutinesconcurrently. - The source data is some how corrupted and that's what is in the key

"sm:"+host+":work"

One other thing I did spot is "sm:"+host+":work" is loop invariant so you could calculate that once, but unless host is also being used by multiple goroutines that shouldn't cause the behaviour you're describing.

This will be a long read since I have to give a lot of context of what's going on, so please bare with me.

First of all, I'm running Go

v1.15.6on a Windows 10 machine, Redisv6.0.6and Redigov1.8.3.I'm writing a sort of website scraper with Redis as link state storage. I'm using a goroutine worker model without channels (explained below).

I have 3 lists:

active,disabledandnotfound. They contain links stored without the domain (aaa, aab, aac, ...) and there's about 1.6 million items in total. For scraping, say,notfoundI firstRENAMEit towork, thenLPOPfrom that, make the request, andRPUSHit into the respective lists.The website I'm scraping has a link checker "API" which I make use of, and it limits the checking to only 200 links at a time so each worker runs

LPOP200 times in a loop (I'm still on Redis 6.0 so I can't specify a pop count), then puts each link on a variable like this:links += link + "\n"(the "API" takes one item per line) then use that as the POST request data.Here's the relevant code

```go var links string for i := 0; i < 200; i++ { link, err := redis.String(rconn.Do("LPOP", "sm:"+host+":work")) if err != nil { if err == redis.ErrNil { break // list empty, no more work to do } fmt.Printf("[Worker %2d] LPOP work error: %s\nWaiting 5 seconds before continuing.\n", wn, err) time.Sleep(time.Second * 5) } links += link + "\n" } ```So, here comes the weird part.. I started noticing some anomalies in the output, where the first link (and the second, as you'll see later) of the 200 would sometimes be a huge string containing 200 links, separated by space as you can see in the screenshots below.

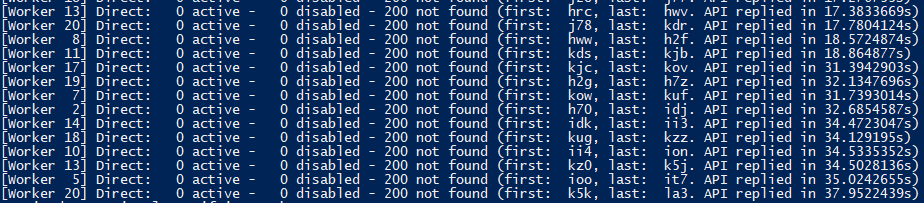

Normal operation

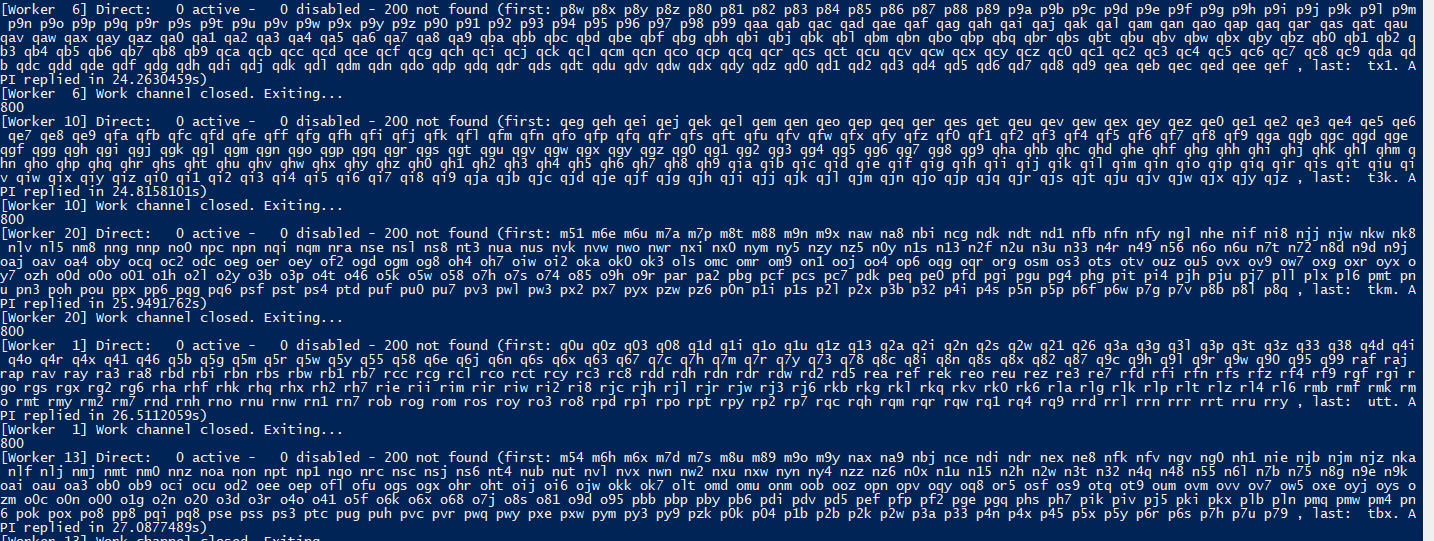

Abnormal operation

(please ignore the "800" prints on the left as it was part of the debugging process) (the "Worker channel closed" messages were also expected and have nothing to do with the bug)Note that this behavior is completely random, hence very difficult to debug. You can also notice in the second screenshot that when the bug occurs, it usually repeats itself a few times (on multiple workers) before going back to normal.

I started trying multiple things until I narrowed it down to the LPOP command in the loop. I've set up some code that dumps the whole

linksvariable right after the loop if it detects an anomaly, and this was the result:dump.txt

``` di40 di44 di46 di47 di5a di5c di5f di5k di5m di5o di5q di5s di5u di5w di5z di50 di53 di55 di57 di59 di6c di6f di6i di6j di6m di6n di6p di6r di6t di6v di6x di6z di61 di65 di66 di69 di7b di7e di7f di7h di7k di7m di7n di7p di7r di7t di7v di7x di70 di72 di73 di76 di78 di8a di8c di8e di8g di8i di8k di8m di8p di8q di8s di8u di8w di8y di80 di83 di85 di87 di89 di9a di9c di9f di9h di9i di9k di9m di9o di9q di9s di9u di9v di9y di90 di91 di94 di96 di98 djaa djab djad djaf djah djaj djak djam djap djaq djat djav djaw djay dja0 dja2 dja4 dja5 dja6 dja8 djba djbd djbe djbh djbi djbk djbn djbo djbq djbs djbv djbw djbz djb0 djb2 djb5 djb6 djb8 djb9 djca djcb djcc djcd djce djcf djcg djch djci djcj djck djcl djcm djcn djco djcp djcq djcr djcs djct djcu djcv djcw djcx djcy djcz djc0 djc1 djc2 djc3 djc4 djc5 djc6 djc7 djc8 djc9 djda djdb djdc djdd djde djdf djdg djdh djdi djdj djdk djdl djdm djdn djdo djdp djdq djdr djds djdt djdu djdv djdw djdx djdy djdz djd0 djd1 djd2 djd3 djd4 djd5 djd6 djd7 djd8 djd9 dhuq dhur dhus dhut dhuu dhuv dhuw dhux dhuy dhuz dhu0 dhu1 dhu2 dhu3 dhu4 dhu5 dhu6 dhu7 dhu8 dhu9 dhva dhvb dhvc dhvd dhve dhvf dhvg dhvh dhvi dhvj dhvk dhvl dhvm dhvn dhvo dhvp dhvq dhvr dhvs dhvt dhvu dhvv dhvw dhvx dhvy dhvz dhv0 dhv1 dhv2 dhv3 dhv4 dhv5 dhv6 dhv7 dhv8 dhv9 dhwa dhwb dhwc dhwd dhwe dhwf dhwg dhwh dhwi dhwj dhwk dhwl dhwm dhwn dhwo dhwp dhwq dhwr dhws dhwt dhwu dhwv dhww dhwx dhwy dhwz dhw0 dhw1 dhw2 dhw3 dhw4 dhw5 dhw6 dhw7 dhw8 dhw9 dhxa dhxb dhxc dhxd dhxe dhxf dhxg dhxh dhxi dhxj dhxk dhxl dhxm dhxn dhxo dhxp dhxq dhxr dhxs dhxt dhxu dhxv dhxw dhxx dhxy dhxz dhx0 dhx1 dhx2 dhx3 dhx4 dhx5 dhx6 dhx7 dhx8 dhx9 dhya dhyb dhyc dhyd dhye dhyf dhyg dhyh dhyi dhyj dhyk dhyl dhym dhyn dhyo dhyp dhyq dhyr dhys dhyt dhyu dhyv dhyw dhyx dhyy dhyz dhy0 dhy1 dhy2 dhy3 dhy4 dhy5 dhy6 dhy7 dhy8 dhy9 dhza dhzb dhzc dhzd dhze dhzf dhzg dhzh dhzi dhzj dhzk dhzl dhzm dhzn dhzo dhzp dhzq dhzr dhzs dhzt dhzu dhzv dhzw dhzx dhzy dhzz dhz0 dhz1 dhz2 dhz3 dhz4 dhz5 dhz6 dhz7 dhz8 dhz9 dj57 dj6f dj6v dj65 dj7c dj7o dj7z dj8d dj8n dj8x dj87 dj9h [...continues normally] ```As you can see, the first two lines contain 200 links, separated by space. And at least this part of the bug was very consistent.

I checked the code multiple times, looking for racy code but found nothing. It works perfectly fine 99% of the time, even with 20+ concurrent workers. I also triple-checked the source redis lists but everything is in it's place.

Another weird thing is that this bug is pretty much exclusive to this website. There's actually other 2 similar ones with the same "API", but the bug only happens with this one, which happens to be quite slower and unstable (returning 502 errors and timing out) which makes me wonder if it's somehow related...? but how could it be?

I have absolutely no clue of what could be happening here or how to dig deeper into the issue, so suggestions are appreciated.

Thank you!