Hi Trevor, hk.cond passes all Haiku state in and out of the cond to allow module parameters and state to be created/updated inside the branches. In this case, it looks to me like your branch functions do not actually call into Haiku modules, so you can probably just use regular jax.cond (which will not have all the extra operands).

JAX made a change earlier this year known as "omnistaging", I think this may allow us to just pass state out of the cond (rather than in). It is possible we could optimise the branch functions to also only return the updated state from the cond too. I'll take a look later this week. This meant if you did have to use hk.cond (e.g. because you used Haiku modules inside the branch functions) we could make them a lot more minimal.

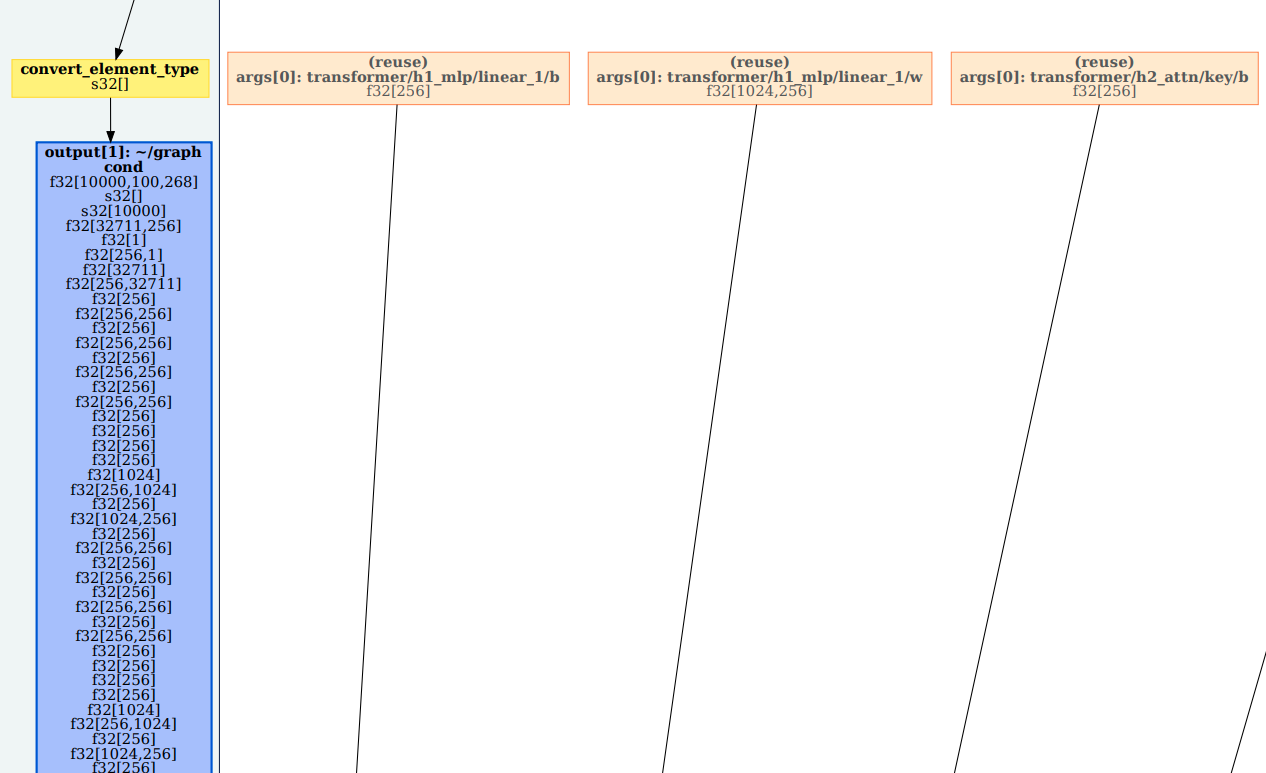

I was experiencing some slowness in my forward function, so I tried using the experimental visualization tool to debug it. One part of it in particular stuck out: It goes on even further to the right. Up close, that blue line is a giant cond:

It goes on even further to the right. Up close, that blue line is a giant cond:

I checked my code, and I narrowed it down to this cond. Commenting it out removes that weird bit from the graph and speeds up the function's execution by ~20%.

graphandedge_countsare very large ndarrays.current_indexis a counter that's incremented each time the function's called, andmax_graph_nodesis just a static int. (Basically, I'm trying to re-initialize the graph object once every few thousand times through the function.)graphstores values output by some of the transformers in my model, which I'm guessing is why you can see them feeding into the cond in the graph.I could understand it if JAX was just compiling around these objects in a weird way, but the fact that the function slows down so much when adding a cond, which I don't expect to do much of anything too often, makes me think that I'm doing something wrong with the haiku state. Are there any special considerations that need to be taken with these objects to avoid the compiler behaving this way?