The data creation looks okay.

It's important to point out that only the files in the tf_examples directory are in TF example format. The files in the interaction directory are also TF records but they hold serialized interaction protos.

Which files are you trying to open?

I am wondering whether it's a TF 1 / 2 issue.

Can you try this:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

def iterate_examples(filepath):

for value in tf.python_io.tf_record_iterator(filepath):

i = tf.train.Example()

i.ParseFromString(value)

yield i

I ran the following command to create tfrecords from the SQA TSV files (I'm on Windows, Python version 3.6.4, installed the protobuf compiler and tapas package as explained in your README):

This printed the following:

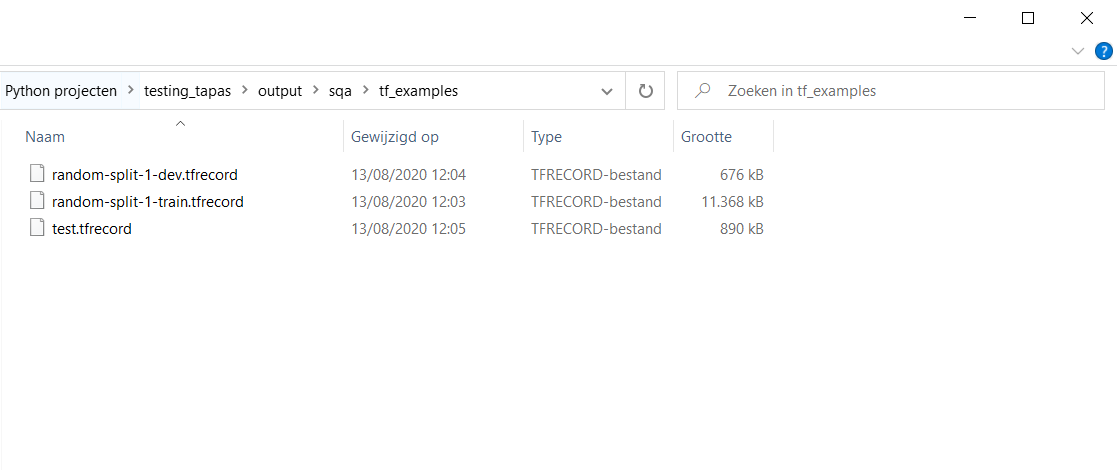

This resulted in 2 directories being created in the "output" directory, namely "interactions" and "tf_examples". In the "tf_examples" directory, only the first random split of training + dev seems to be created:

However, parsing these tfrecord files as strings (as explained in the Tensorflow docs) results in an error:

Am I doing something wrong here?