- In my opinion, the 58x speedup mentioned in the Github Readme over archivemount is misleading because the mount point is not ready for usage at that point yet! I do not see the importance of daemonizing before or after the bulk of processing has been done.

It is important for me because I have a separate GUI controller program that fork/exec's fuse-archive and synchronously waits for the exit code (e.g. to pop up a password dialog box if the archive is encrypted). Actually listing the archive's contents in the GUI can happen asynchronously, but I still don't want the GUI to block for long when waiting for an exit code (i.e. daemonization). "Wait for the exit code" could admittedly be async but it's simpler to be sync, and it's also nice for the password dialog box to pop up sooner (< 1 second) rather than later (10s of seconds).

- I could not reproduce a general 682x speedup for copying at contents of an uncompressed file (lower left chart).

Can you reproduce the 682x speedup from the example in fuse-archive's top level README.md? It's the section starting with truncate --size=256M zeroes.

But also, that particular example demonstrates archivemount's quadratic O(N*N) versus fuse-archive's linear O(N), which is a ratio of N (ignoring constant factors), 256M in this case. Supplying a different archive file with a different N, larger or smaller, will produce a different speedup ratio, larger or smaller.

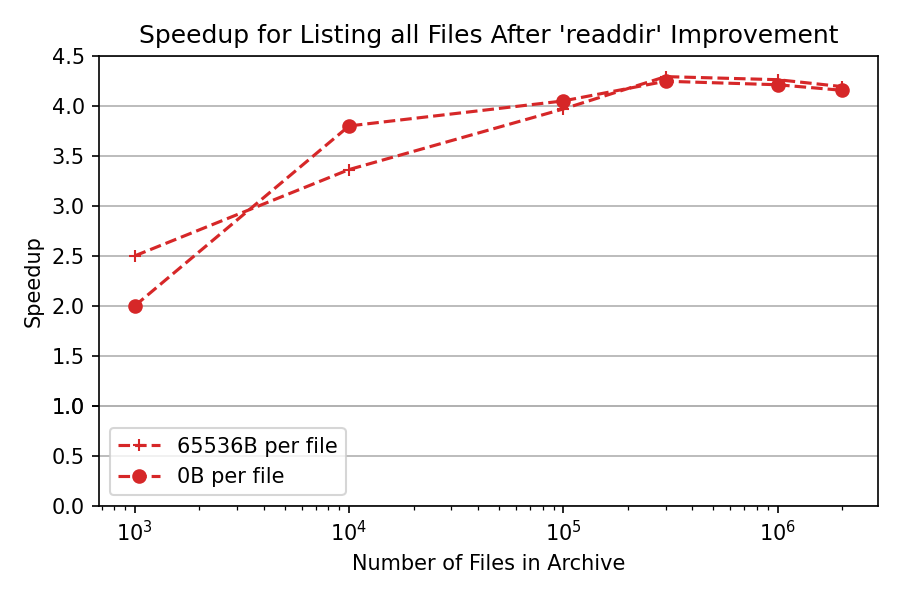

- The time for a simple find inside the mount point is similar to ratarmount, which is a magnitude slower than archivemount. This is surprising but exciting because I'm trying to track down why ratarmount is slower than archivemount in that metric since starting work on ratarmount! Do you have an idea, what you might do differently to archivemount? Could it be because archivemount uses the FUSE low-level interface?

I don't have an idea, but I wouldn't rule out the FUSE low-level API or richer readdir hypotheses. Did your further investigations find anything?

Hello again and thank you for fixing my last issue even on New Year's Day :).

I tried to include fuse-archive in my performance comparison and I noticed some things, which beget questions. First, here are my preliminary results as tested on an encrypted Intel SSD 660p 2TB:

fusearchive() { fuse-archive "$@" && stat -- "${@: -1}" &>/dev/null; }because fuse-archive daemonizes even when the mount point is not ready yet. I'm waiting for it to be ready by simply stat-ing the mount point, which hangs until then. Also, I do see an indicator, that the quadratic scaling issue has been fixed in the tests for the archives with 0B-files (plus sign markers all line styles). Starting from around 300k files, archivemount (blue lines) seem to begin to take quadratically (very reproducibly) more time whereas fuse-archive (green lines) stays O(n) and becomes considerably faster compared to archivemount.--sort=nameoption. Because then, I can infer the position in the archive by the name and can test with e.g., only the last file in the archive to trigger the worst-case scenario.The benchmark script can be found here. I did not yet push the changes necessary for also benchmarking fuse-archive.