This issue is intermittent and because it's not catchable, it's resulting in 'out-of-whack' errors in the database. Without deploying anything differently, the code now 'just works' on the same retries.

Closed timhj closed 4 years ago

This issue is intermittent and because it's not catchable, it's resulting in 'out-of-whack' errors in the database. Without deploying anything differently, the code now 'just works' on the same retries.

The vision API request may have been failing behind the scenes and causing this issue from a request to output json into a non-existent GCS Bucket... As the issue is intermittent, I'm not sure. So will watch an see what happens.

I'm having the same problem with various libraries inside cloud functions.

This example is listening for a bucket onFinalize event and sending a single http task to a cloud tasks queue with the payload.

It seems that my function works fine when its first deployed. Then after some time, (perhaps) after it scales to zero and then is re-triggered it always fails until I redeploy it.

I'm using the nodejs10 runtime with "@google-cloud/tasks": "^1.4.0".

Code

const { v2beta3 } = require('@google-cloud/tasks')

const client = new v2beta3.CloudTasksClient()

const queue = body => {

return client.createTask({

parent: process.env.QUEUE_URL,

task: {

httpRequest: {

httpMethod: 'POST',

url: process.env.TASK_URL,

headers: { 'Content-Type': 'application/json' },

body: Buffer.from(JSON.stringify(body)),

oidcToken: { serviceAccountEmail: process.env.SERVICE_ACCOUNT_EMAIL }

}

}

})

}

exports.default = async file => {

await queue(file)

console.info(`DONE: ${file.name} queued`)

}I also experience this issue for quite some time now. It's happening in my project all the time, let me know if I can be of any help to debug it.

@merlinnot what type of authentication are you using in your project, and what APIs specifically.

Firestore, BigQuery, Debugger, ...

Given the stack traces, the error observed originates here: https://github.com/googleapis/google-auth-library-nodejs/blob/944e2aa62a61c253ba153f49590d7416585c64eb/src/auth/googleauth.ts#L291-L296

As you can see, it is thrown if and only if the value of isGCE variable is falsy. The value is a result of a call of _checkIsGCE function: https://github.com/googleapis/google-auth-library-nodejs/blob/944e2aa62a61c253ba153f49590d7416585c64eb/src/auth/googleauth.ts#L311-L316

This function in turn calls isAvailable function from google-metadata library:

https://github.com/googleapis/gcp-metadata/blob/25bc11657001cb6b3807543377d74bafe126ea62/src/index.ts#L121-L142

As you can see, it depends on metadataAccessor function:

https://github.com/googleapis/gcp-metadata/blob/25bc11657001cb6b3807543377d74bafe126ea62/src/index.ts#L49

This function makes an HTTP request to http://169.254.169.254/computeMetadata/v1/ here: https://github.com/googleapis/gcp-metadata/blob/25bc11657001cb6b3807543377d74bafe126ea62/src/index.ts#L66

I see no other way for this error to occur other than a requests to this service fail.

I'm currently redeploying all of the functions with additional logging enabled (DEBUG_AUTH). Will post here as soon as I have a hit.

In the last 24 hrs I had 71,092 occurrences of this error, but it was last seen 5 hrs ago... I thought I'll be able to provide you more information straight away, this error used to happen all the time.

@merlinnot as you noticed, I've deployed a version of gcp-metadata with a debug option. I'd double check that your package-lock.json has gcp-metadata@3.2.0, at which point we should get a better picture of what's happening the next time you run into issues.

@bcoe Experiencing the same issue on a few functions on our side.

I can confirm @merlinnot's suspicion that requests to the metadata service is failing.

It's back :)

{ FetchError: network timeout at: http://metadata.google.internal./computeMetadata/v1/instance

at Timeout.<anonymous> (/srv/functions/node_modules/node-fetch/lib/index.js:1448:13)

at ontimeout (timers.js:436:11)

at tryOnTimeout (timers.js:300:5)

at listOnTimeout (timers.js:263:5)

at Timer.processTimers (timers.js:223:10)

message:

'network timeout at: http://metadata.google.internal./computeMetadata/v1/instance',

type: 'request-timeout',

config:

{ url:

'http://metadata.google.internal./computeMetadata/v1/instance',

headers: { 'Metadata-Flavor': 'Google' },

retryConfig:

{ noResponseRetries: 0,

currentRetryAttempt: 0,

retry: 3,

retryDelay: 100,

httpMethodsToRetry: [Array],

statusCodesToRetry: [Array] },

responseType: 'text',

timeout: 3000,

params: [Object: null prototype] {},

paramsSerializer: [Function: paramsSerializer],

validateStatus: [Function: validateStatus],

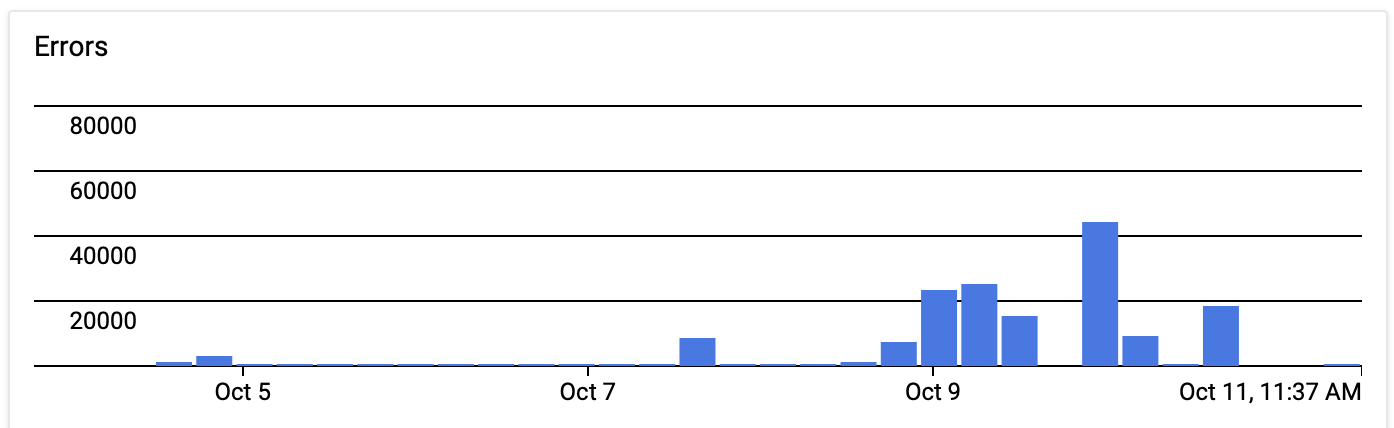

method: 'GET' } }Here's a timeline for the last 30 days:

And for the last 7 days:

Same problem...

@merlinnot @BluebambooSRL thank you, this gives us some valuable forensic information for the engineering team :+1:

Any remedy for this? I'm experiencing it in same circumstance (vision API in GCF (Node v8))

Edit: Odd thing is that I didn't change any deps or code. Just ran firebase deploy to update some unrelated code and then it started happening.

I think it's not related to dependencies. In my case redeployments also change the behavior of these errors: sometimes I have more, sometimes I have less (see the chart above), where the number of executions per day is rather stable.

I wild guess would be that it just depends on which node in the underlying infrastructure the code lands? Maybe a re-re-deploying would help in your case?

@merlinnot Yes I just redeployed it with ^@google-cloud/vision@1.5.0 (before it was 1.4.0) and it started working again.

Sadly, at europe-west2 seems like all underlying nodes have this issue? Redeploying the function a bunch of times has not really alleviated things for us.

Unfortunately, I'm running into this issue as well. It's happening pretty consistently for me at the moment. It just started after a recent full deploy of all my cloud functions. I'm receiving Error: Could not load the default credentials. followed by Unhandled error Error: Can't set headers after they are sent.(mostly likely from "Ignoring exception from a finished function" ). A bit out of ideas on this one. I'll keep poking at it.

For me it's happening at:

GoogleAuth.getApplicationDefaultAsync (/srv/node_modules/@google-cloud/logging/node_modules/google-auth-library/build/src/auth/googleauth.js:161:19)

Commenting out all logging logger.debug() within the functions seems to fix the issue for me. But... no logs. Wonder why the auth is failing for it now.

Same here. I have more apps .. none of them are failing with this error.

But one of them ( after recently upgrading my functions dependencies ), started resulting in the same error as above.

Weird thing is, given there are two functions, only one triggers this error.

I have no outside libraries or network requests, no APIs being used, simple firestore document triggers and updates.

So while I’m using the latest version of everything, only one function out of the two is randomly failing.

Had 11 fails during past 5 days. My client is losing revenue based on those fails though, so it’s a bit worrying.

@ollydixon this thread is specifically related to authentication issues with cloud functions, which I think is potentially related to something specifically happening within this environment.

Could I bother you to open a new issue, with more specifics about the environment you're running in, and the steps you're using to bootstrap your application.

@edi, @davedc, @smasha :wave: sorry about your frustration, I've raised an internal issue with the Cloud Functions folks (which is why this is labeled external), and am going to follow up again today.

There's an internal issue with the GCF folks that has been updated throughout the day, we're trying to get the root cause of the timeouts that occur attempting to connect to the metadata server (this is what's in turn resulting in the credentials issue).

A potential workaround for folks, would be to create a service account, rather than relying on the default credentials:

https://cloud.google.com/docs/authentication/getting-started

:point_up: this requires that the credentials are available in a file on disk, so you would need to either use the API to deploy your project, or use the file upload option (rather than the inline editor).

At which point you would set your credentials to GOOGLE_APPLICATION_CREDENTIALS=./my-service-account.son.

I understand this workaround is suboptimal, and we are continuing to dig into things on our end.

I have the same problem on my new MacBook. I am able to run my functions locally on my old MacBook and iMac at the office. All using the same Firebase Functions project. So the default credential works on admin.initializeApp. I don't want to change the initialization code to use the workaround for 1 device. I will wait for the permanent fix. Please let us know when it will be fixed. Thanks.

Sounds like it might be a different issue to this one. I'd make sure you've got the appropriate environment variable set with the location of your json credentials first.

There's no implicit default credentials on a new Mac.

On Sun, Oct 27, 2019, 5:02 PM Dara-To notifications@github.com wrote:

I have the same problem on my new MacBook. I am able to run my functions locally on my old MacBook and iMac at the office. All using the same Firebase Functions project. So the default credential works on admin.initializeApp. I don't want to change the initialization code to use the workaround for 1 device. I will wait for the permanent fix. Please let us know when it will be fixed. Thanks.

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/googleapis/google-auth-library-nodejs/issues/798?email_source=notifications&email_token=AA5XHE63WRHJIRREZ7GUMWLQQUVIRA5CNFSM4I4ECSIKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOECKXCFI#issuecomment-546664725, or unsubscribe https://github.com/notifications/unsubscribe-auth/AA5XHE3AXK7DC7VDS6AJFY3QQUVIRANCNFSM4I4ECSIA .

@Dara-To I believe @timhj is correct, you will want to go through the steps outlined here:

https://cloud.google.com/docs/authentication/getting-started

To setup authentication on your new laptop; if you bump into issues, please feel free to open up an issue here.

I just saw the same error with one of our webhook functions after redeploying it. Special about this function was that it sent the response early with resp.sendStatus(202); and did the heavy lifting afterwards. This caused the function to already log Function execution took X ms, finished with status code: 202 early. My guess is that the function then tried to fetch the default credentials, but couldn't get them because the execution was already marked as finished.

I am now writing the webhook's payload into the database before returning 202 and then using a separate cloud function to process it asynchronously. This made the error go away.

This started happening to one of my functions now. Is there any news regarding a fix? We are using Cloud Build for function deployment and getting a json file embedded into the build without having it in git is non-trivial.

@Tebro I see a few internal threads regarding timeout issues on the metadata server, there was one rollout today, but it looks like the issue is still periodically happening.

I will continue to keep this thread updated.

Note, the workaround of specify an explicit service account, using GOOGLE_APPLICATION_CREDENTIALS should do the trick for the time being as a workaround.

@aldobaie Not the case.

It's just a request timeout, when it happens. Seems like the SDK can't each one of the credentials endpoints ( eg. 169.254.169.254 ).

Given the fact that it happens randomly ( for the same code snippets ) means it's not code-oriented.

The workaround (until they sort it) is to load your service account credentials manually like this:

const credentials = require('./credentials.json')

admin.initializeApp({

credential: admin.credential.cert(credentials),

databaseURL: 'https://PROJECT_ID.firebaseio.com'

})I think I got it. I installed the new version of the Firebase CLI firebase-tools on my new laptop whereas my iMac is still on an older version. I will confirm the version on my iMac when I get back to the office. I believe pass a certain version, they have removed the default credential so that admin.initializeApp(functions.config().firebase) wouldn't work anymore.

My next question is which service account should I generate a key for? I am so confused with the many options stated in the documentations. My backend project is only for cloud functions in node.js, no hosting, and I want to access the auth service. Any clarifications would be appreciated.

1. Firebase service account

In the Firebase console, you could generate a key for the Firebase Admin SDK.

https://console.firebase.google.com/project/

2. App Engine default service account

According to this doc, it says to create a key for the App Engine default service account.

https://firebase.google.com/docs/functions/local-emulator

https://console.cloud.google.com/iam-admin/serviceaccounts?project=

3. New service account

According to this doc (provided by an earlier message in this thread), it says to set up a new service account and set the role as the project owner. https://cloud.google.com/docs/authentication/getting-started#auth-cloud-implicit-nodejs

@timhj @aldobaie @Tebro there have been some stability fixes deployed internally, are you continuing to see these issues?

@Dara-To I'm glad you've made some progress :+1: could I bother you to open a new issue with your questions, this thread is related to specific issues were were seeing with cloud functions not loading default credentials (I don't want to lose your questions in the shuffle).

Sure thing, I can email Firebase support. Thanks

@Dara-To happy to have a tracking issue here too; starting a conversation with Firebase is probably also worthwhile, given they'll have more specific expertise.

@bcoe - Still happens intermittently but not frequently -  , the tight grouping of errors within microseconds of each other agree with it being a quick connection dropout issue.

, the tight grouping of errors within microseconds of each other agree with it being a quick connection dropout issue.

How recently a redeploy does the fix need to have been included?

Definitely experiencing this, particularly when I have about >1,400 instances active, seems some sputter and die with this error

@timhj the deploy I believe was in the past week, mind sharing with me the project identifier (feel free to send it by email bencoe [at] google.com.

It sounds promising that this error has become a rare occurrence, makes me think the upstream issue has at least been partially addressed.

Have not seen it in a while, but the project does not have high activity at this point.

Edit: Scratch that, just did some tests and got it again.

Just to add on this, we're seeing this error as well in the context of logging-winston (https://www.npmjs.com/package/@google-cloud/logging-winston).

This happens almost everytime the cloud function is redeployed (e.g. after an update). The function is running but logging does not work. I'm writing almost here, because from time to time it is actually working, but not consistently.

Just for context, I deployed my functions - it did not work. I deployed them again and it's been working ever since. Very odd.

Redeploying works, but it's trail and error. We get the error, redeploy and check that. We have a credential file, but it is encrypted in Storage and we decrypt it when the function is run. Really odd behavior indeed.

Note, the workaround of specify an explicit service account, using GOOGLE_APPLICATION_CREDENTIALS should do the trick for the time being as a workaround.

Hi @bcoe would that be an env variable at deploytime or a file in the root of the function? Thanks!

Note, the workaround of specify an explicit service account, using GOOGLE_APPLICATION_CREDENTIALS should do the trick for the time being as a workaround.

Hi @bcoe would that be an env variable at deploytime or a file in the root of the function? Thanks!

@seriousManual I used this workaround and haven't had any errors since. I uploaded the secret in the root of the function and then declared the variable at the start of my code:

process.env.GOOGLE_APPLICATION_CREDENTIALS = './my-secret.json';

Good workaround!

Though the good folks on the team need to dig into the root cause since their software is inconsistent at scale, which means there's a deeper root cause to discover and patch :)

We had the same issue in our Cloud Functions in a Firebase project, but the workaround proposed by @arfnj seems to fix it. Are there any potential security issues to take note of when doing it this way?

We had the same issue in our Cloud Functions in a Firebase project, but the workaround proposed by @arfnj seems to fix it. Are there any potential security issues to take note of when doing it this way?

I appreciate the kudos, @NawarA and @runelk, but that's not my workaround! The esteemed @bcoe suggested it on October 21st and I was just sharing my implementation of it to help answer @seriousManual's question. 🙂

@bcoe , not sure if you can answer this but am thinking about implementing this option too.

1) How would you dynamically switch the secret.json file if you had a prod and dev environment?

2) Do you need to add process.env.GOOGLE_APPLICATION_CREDENTIALS = './my-secret.json'; at the top of every single function?

3) And what role does the service account key need to have when creating it?

Thanks

Update:

1) I tried to save both secret files in a creds folder and access them in this fashion:

process.env.GOOGLE_APPLICATION_CREDENTIALS = ./creds/${process.env.GCP_PROJECT}-secret.json;

which seemed like a good idea but I am on Node 10 runtime and because this runtime removed access to GCP_PROJECT variable, it is not an option.

I tried to use the workaround on my new MPB laptop with setting export GOOGLE_APPLICATION_CREDENTIALS="path/to/key.json" on my shell session as suggested by @bcoe but it didn't work. I even updated all the firebase dependencies. Why does this have to be so complicated?

Is it because of this message on my terminal?

The default interactive shell is now zsh. To update your account to use zsh, please runchsh -s /bin/zsh. For more details, please visit https://support.apple.com/kb/HT208050.

@Dara-To another option for you would be installing the gcloud command line tool, running gcloud auth login (to login to your account), and then also making sure you set a default project ID, with gcloud config set project.

You can also run your application like so:

GOOGLE_APPLICATION_CREDENTIALS="path/to/key.json" node my-app.jsIf you continue to have issues please feel free to open a separate issue in this library, or to reach out to support for the specific product you're using 👍

Your issue is I believe unrelated to this thread however, which relates to folks running applications in a GCP environment (not on their local machine).

This has only recently started happening for me. Some cloud functions which are using the Cloud Vision API have started failing due to an Auth error. The failures seem random, with requests working sometimes and other times not. As there is no explicit Auth happening (it's the Node JS GCF runtime for an existing project, it's not clear what could be the issue).

Errors look like this:

Triggering code is:

The unhandled rejection is happening inside the Vision request try/catch block so there's nowhere further to debug for me, hope someone can help or is getting the same issue. This used to work without issue.

Environment details

Steps to reproduce