Hey! This is a common confusion between Kubernetes labels and Loki labels. Kubernetes labels on your pods do not automatically become labels in Loki, mostly because Loki can optimize based on common label sets.

If you want to add pod labels to your logs, you can add something like this:

logs:

pod_logs:

extraRelabelingRules: |

rule {

source_labels = ["__meta_kubernetes_pod_label_app_kubernetes_io_instance"]

action = "replace"

target_label = "instance"

}This should set the app.kubernetes.io/instance pod label to the instance label on the logs.

This rule gets inserted into the discovery.relabel component that is used to discover the pods to gather logs from. You can read the full syntax for the rules here: https://grafana.com/docs/alloy/latest/reference/components/discovery.relabel/

To find the meta labels that are available (like __meta_kubernetes_pod_label_app_kubernetes_io_instance), you can look at this doc: https://grafana.com/docs/alloy/latest/reference/components/discovery.kubernetes/#pod-role

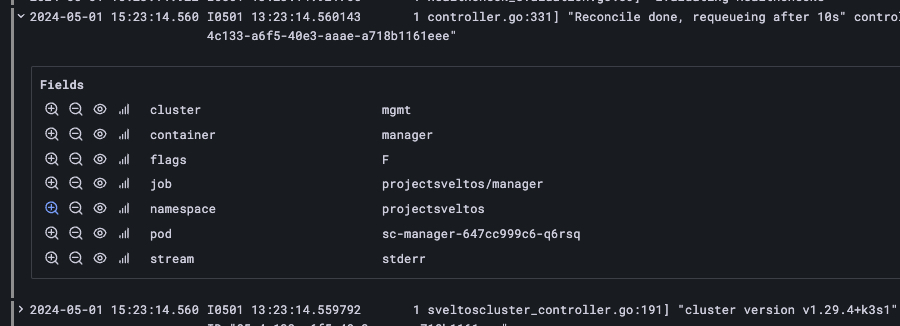

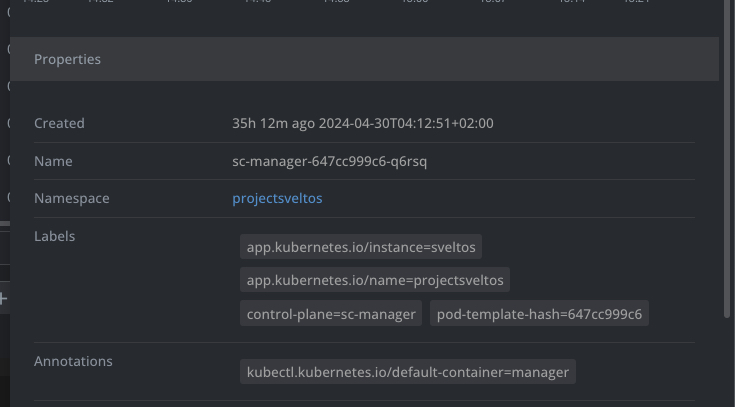

Labels are not being sent to loki:

These labels are missing:

Config used: