@gulvarol Hi Gül, I am still confused about the values of 3D joints data: 1) There seems to be a left/right inconsistency, e.g. the joint with index 4, which is supposed to be the left knee, appears to be the right knee instead. Therefore, when plotting 3D joints a left/right swap is needed.

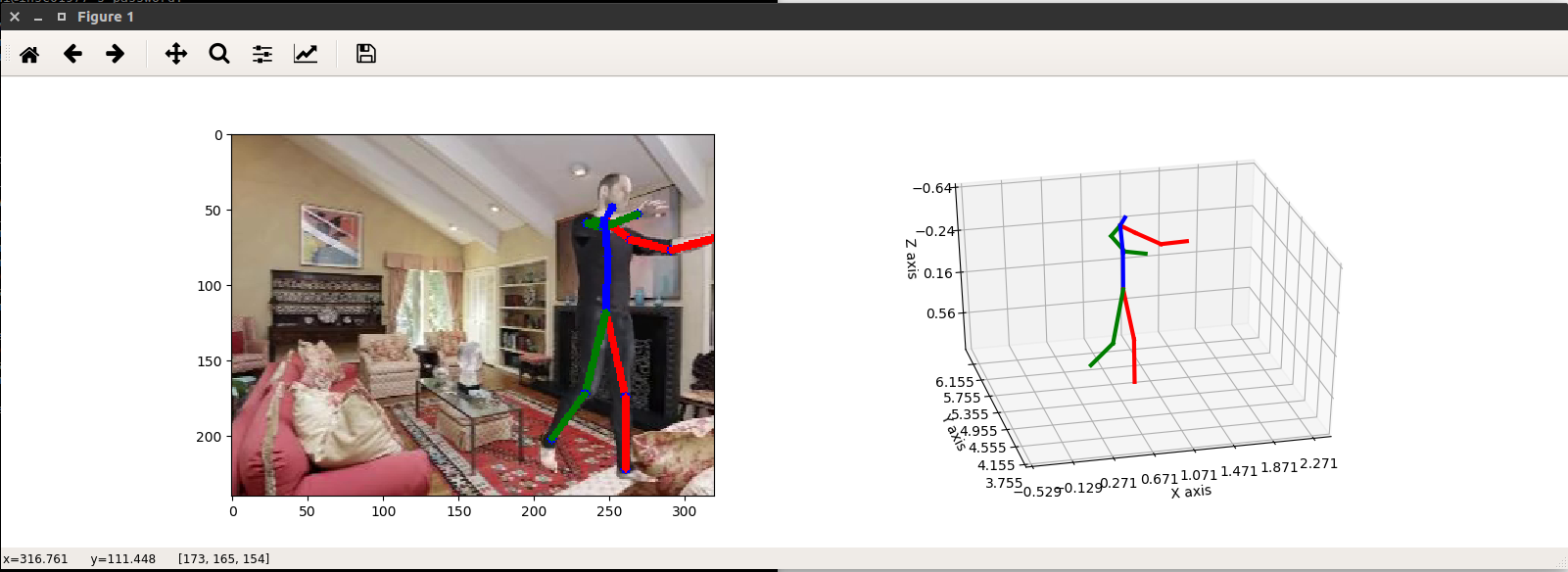

2) In this answer you say that the reference point for 3D joint data is the camera location. This means that there is no need to multiply joint positions by the camera extrinsic matrix (as I did in the code above). However, I tried to plot the 3D joint data as stored in the _info.mat files, and they don’t seem to be expressed in camera coordinates. See these sample images:

Hi, thanks for the great dataset! I used some of the code you provided (e.g. for retrieving the camera extrinsic matrix) to convert 3D joint positions from world to camera coordinates. However, the values of joint positions in camera coordinates seem a bit strange to me. Here is the code:

When plotting the joints in 3D I get strange depth values (see Y axis in the figure below). For example, in the image below the subject appears very close to the camera, however it's position on the Y axis (computed with the above code) is about 6 meters, which seems quite unrealistic to me:

Do you have any idea why this is happening? Thanks