Hi Sebastian,

Thanks for trying our project for pruning LLaMA.

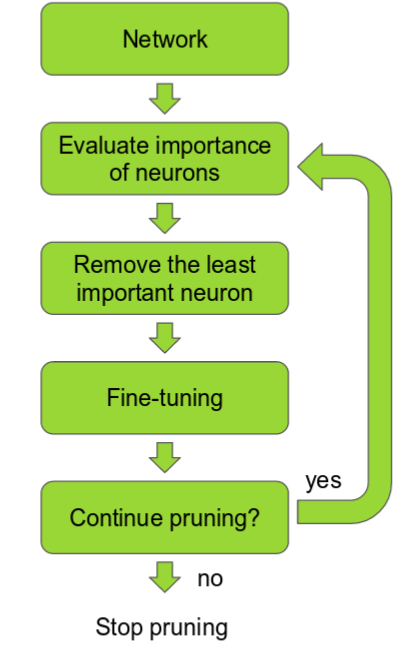

After pruning a model, it is imperative to perform post-training before using it for any further application. This is because pruning adversely impacts the model's structure, necessitating the post-training step. Failure to execute this step would significantly reduce the model's efficacy, as evidenced by your results and also the results of our own experiments.

It is a widely recognized problem that arises in model pruning. At present, our LLaMA library only supports structural pruning, but not the post training of that model. We are developing the code for post-training, but it still needs some time before we can release it in the repo.

from

from

Hi,

I've tried the code "out of the box" and the output is very bad / unusable.

I've tried all pruner_types with a ratio of 0.5, and I also tried to reduce the pruning_ratio to 0.1.

The result stays the same.

Am I doing something wrong? (

How) have you been able to obtain different results?

Kind regards, Sebastian