Summary

This paper propose a auxiliary bidirectional language modeling objective for neural sequence labeling, and evaluated on error detection in learner texts, name entity recognition (NER), chunking and part-of-speech (POS) tagging.

Notes on the name of this paper:

- "Semi-supervised": Utilize the data without label, which is context words.

- "Multitask Learning": Jointly training on labeling and predicting context words.

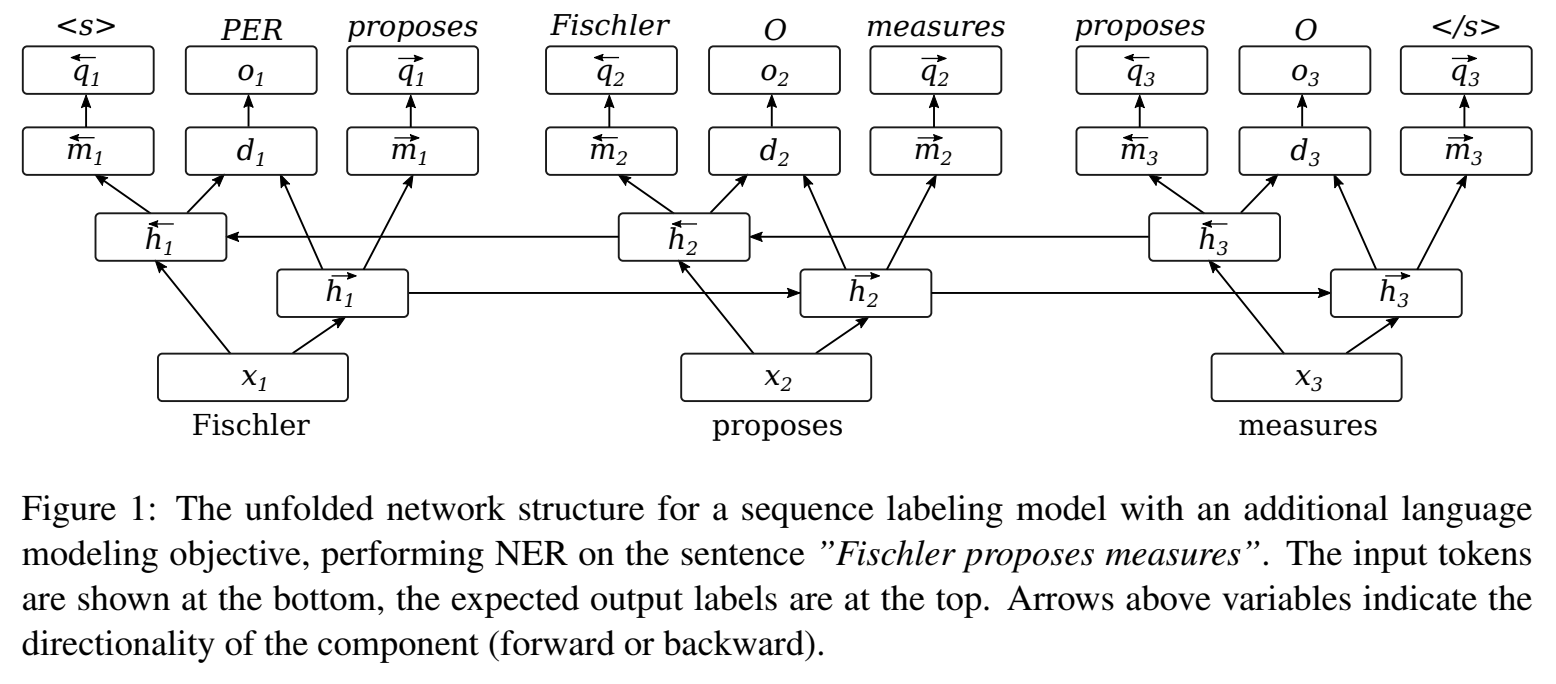

Neural Sequence Labeling Model

- Bidirectional LSTM (dynamic weighting char+word embeddings)

- Concatenating forward and backward hidden state as output hidden state at each time step.

- Project each output hidden state to 1-layer feedforward net with tanh activation + softmax (or + CRF).

-

Code: https://github.com/marekrei/sequence-labeler

Language Modeling Objective

Since bidirectional LSTM has access to the full context on each side of the target token, they predict the next word only from the forward-moving hidden state and the previous word only from the backward-moving hidden state. (The hidden states are mapped to 1-layer, tanh projection before projecting to context word vocabulary using softmax)

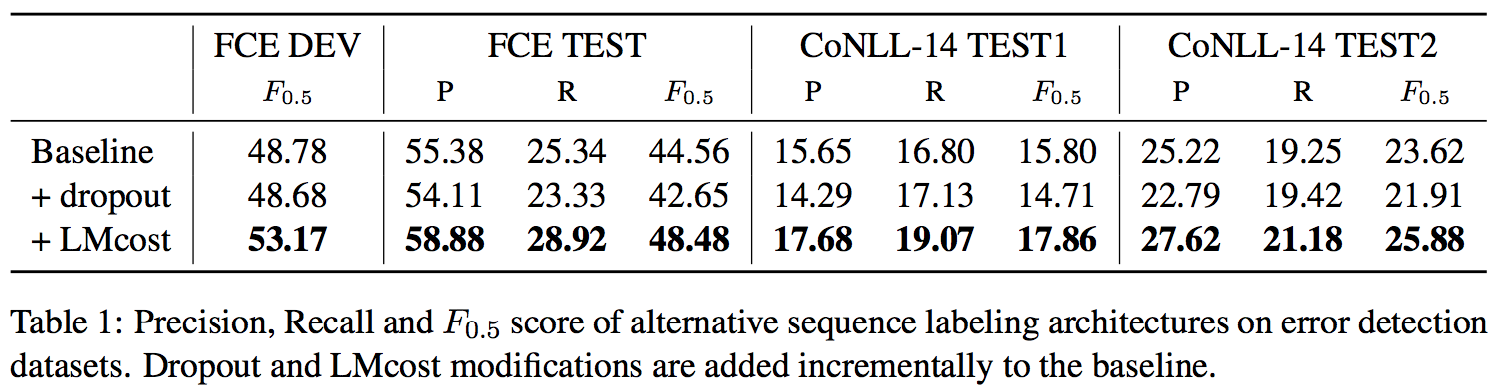

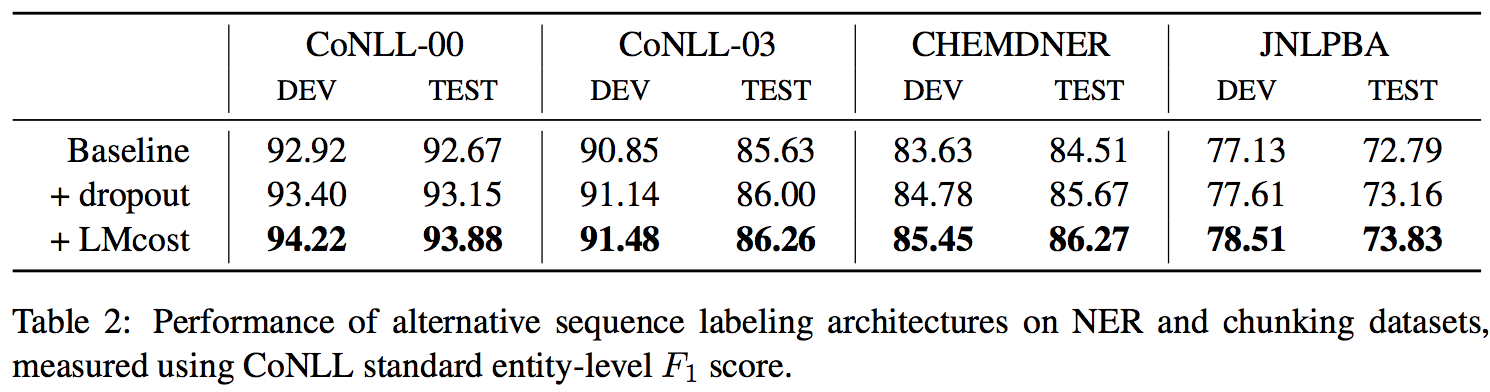

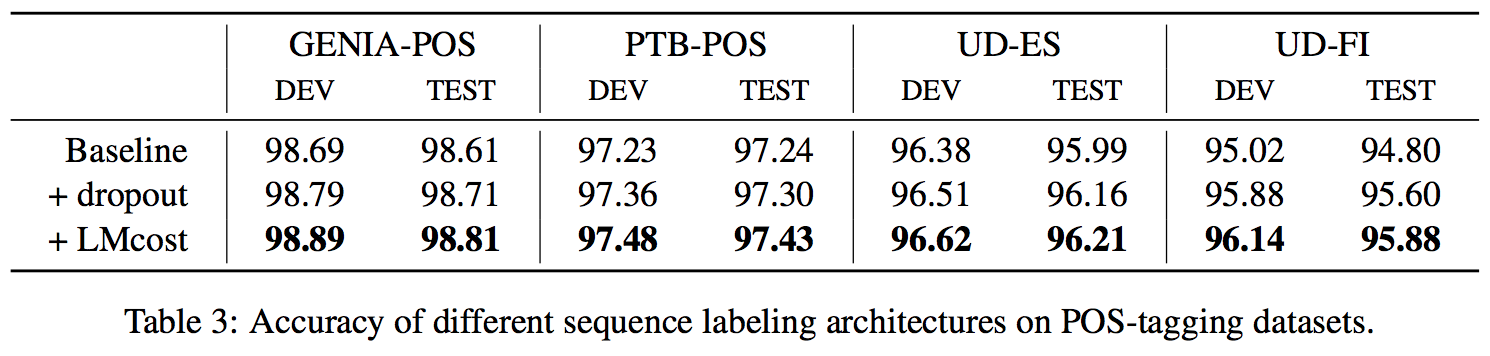

Experimental Results

Metadata