Highlights

-

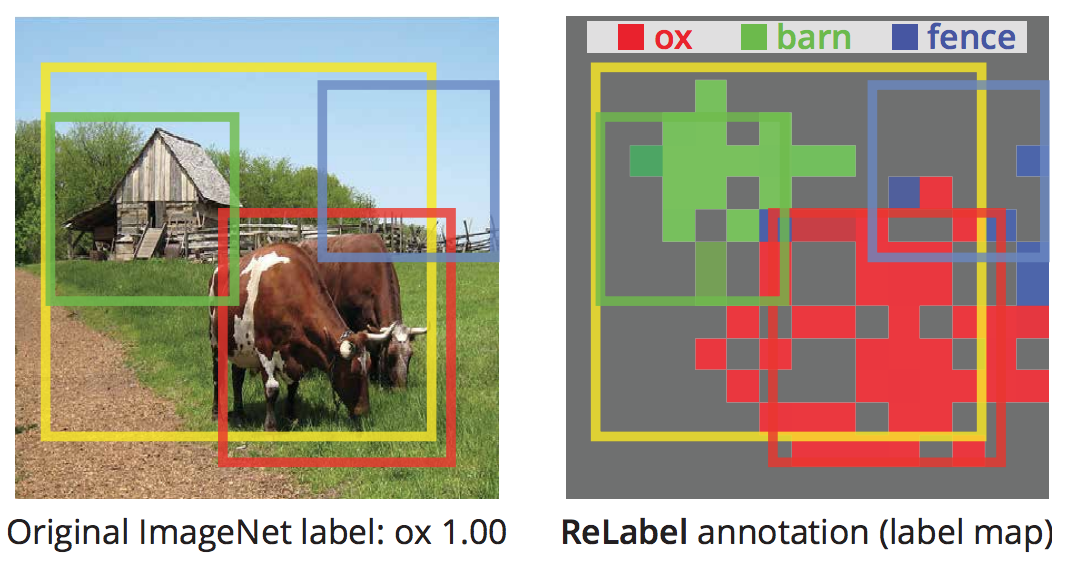

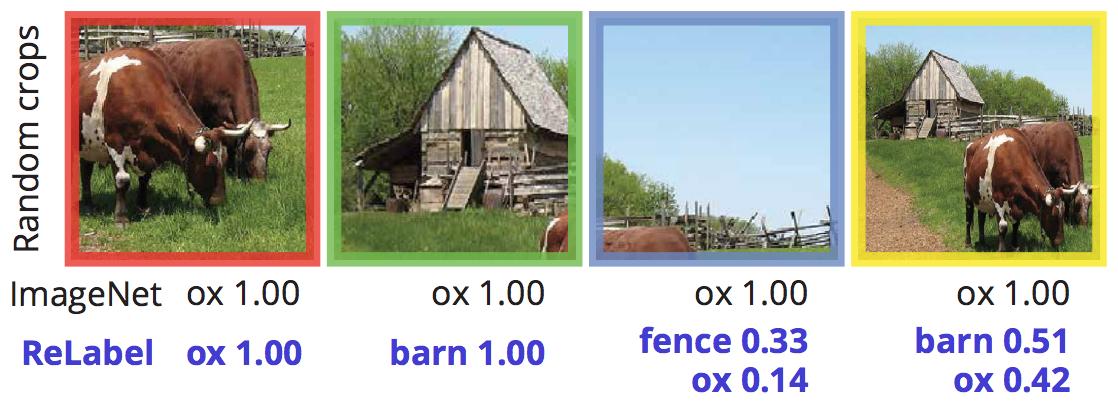

Motivation. ImageNet label is noisy: An image may contain multiple objects but is annotated with image-level single class label.

-

Intuition. A model trained with the single-label cross-entropy loss tends to predict multi-label outputs when training label is noisy.

-

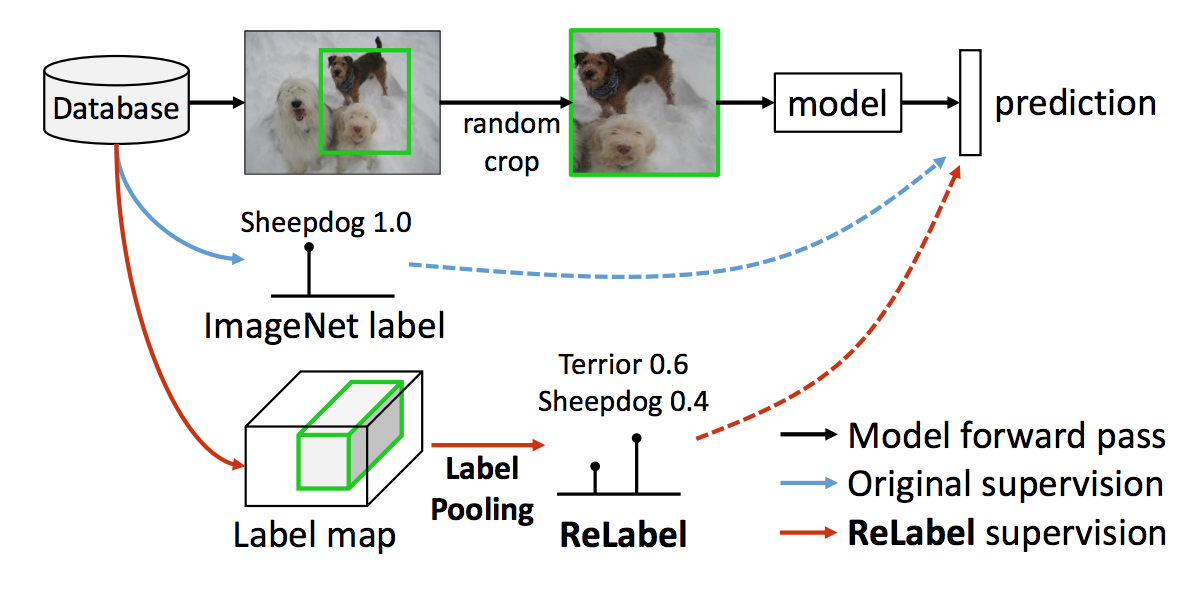

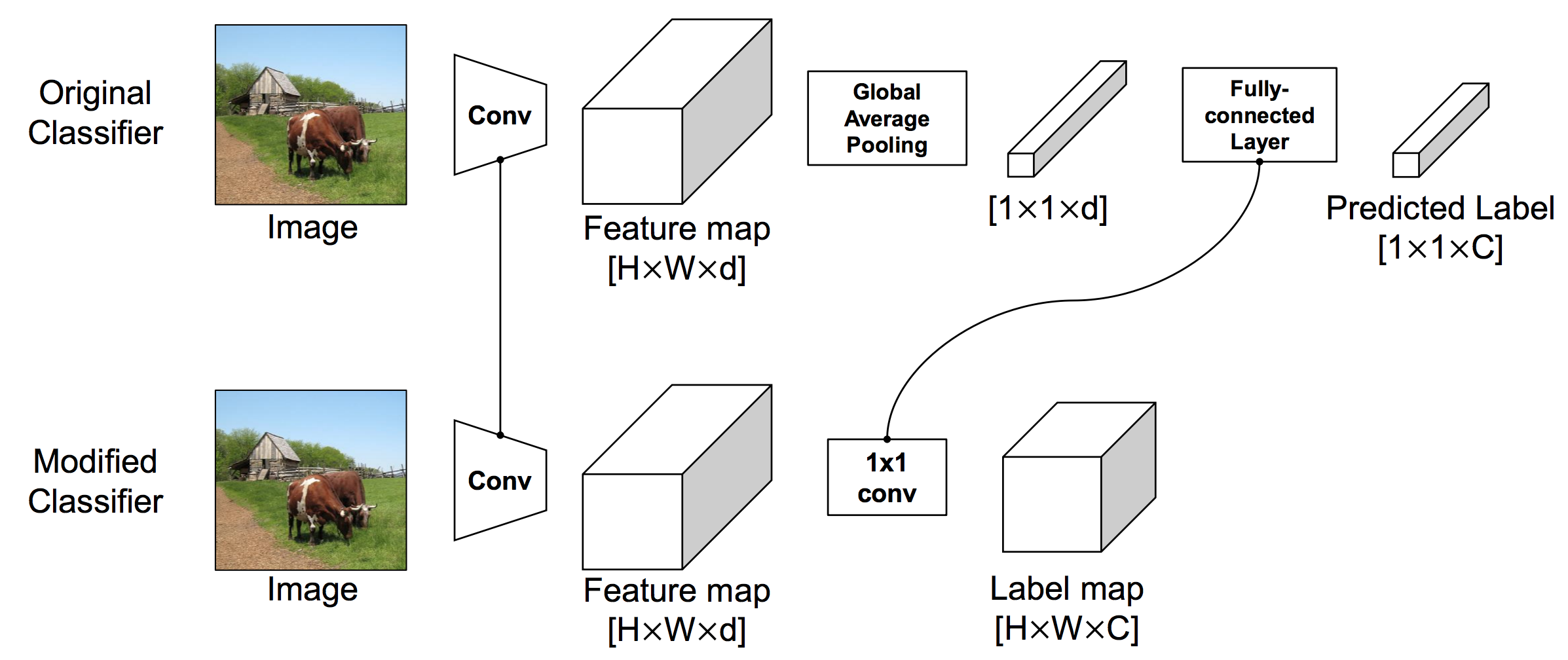

Relabel. They propose to use a strong image classifier that trained on extra data (super-ImageNet scale, JFT-300M, InstagramNet-1B) + fine-tuned on ImageNet, to generate multi-labels for ImageNet images. Obtain pixel-wise multi-label predictions before the final global pooling layer (offline preprocessing once).

-

Novel training scheme -- LabelPooling. Given a random crop during training, pool multi-labels and their corresponding probability scores from the crop region of the relabeled image.

-

Results. Trained on relabeled images with multi-and-localized labels can obtains 78.9% accuracy with ResNet-50 (+1.4% improvement over baseline trained with original labels), and can be boosted to 80.2% with CutMix, new SoTA on ImageNet of ResNet-50.

Related work: Better evaluation protocol for ImageNet

- ImageNetV2: Do ImageNet Classifiers Generalize to ImageNet?

- Are we done with imagenet?

- Evaluating machine accuracy on imagenet. ICML 2020.

- (Not on ImageNet but related) Evaluating Weakly Supervised Object Localization Methods Right. CVPR 2020

The above works have identified 3 categories for the erroneous single labels

- An image contains multiple objects

- Exists multiple labels that are synonymous or hierarchically including the other

- Inherent ambiguity in an image makes multiple labels plausible.

Difference from this work

- This work also refines training set while previous work only refine validation set.

- This work correct labels while previous work remove erroneous labels.

Related work: Distillation (I hand-picked some by their practical usefulness in my opinion)

- [Ensemble distillation] Knowledge distillation by on-the-fly native ensemble. NIPS 2018.

- [Self-distillation] Snapshot distillation: Teacher-student optimization in one generation. CVPR 2019.

- [Self-distillation] Self-training with noisy student improves imagenet classification. CVPR 2020.

Difference from this work

- Previous work did not consider a strong, SoTA network as a teacher.

- Distillation approach requires forwarding teacher on-the-fly, leading to heavy computation.

Metadata