Ccing @dwofk (the author of fast-depth).

Thanks, @awsaf49 for reporting this. I believe this is because the NYU Depth V2 shipped from fast-depth is already preprocessed.

If you think it might be better to have the NYU Depth V2 dataset from BTS here feel free to open a PR, I am happy to provide guidance :)

Describe the bug

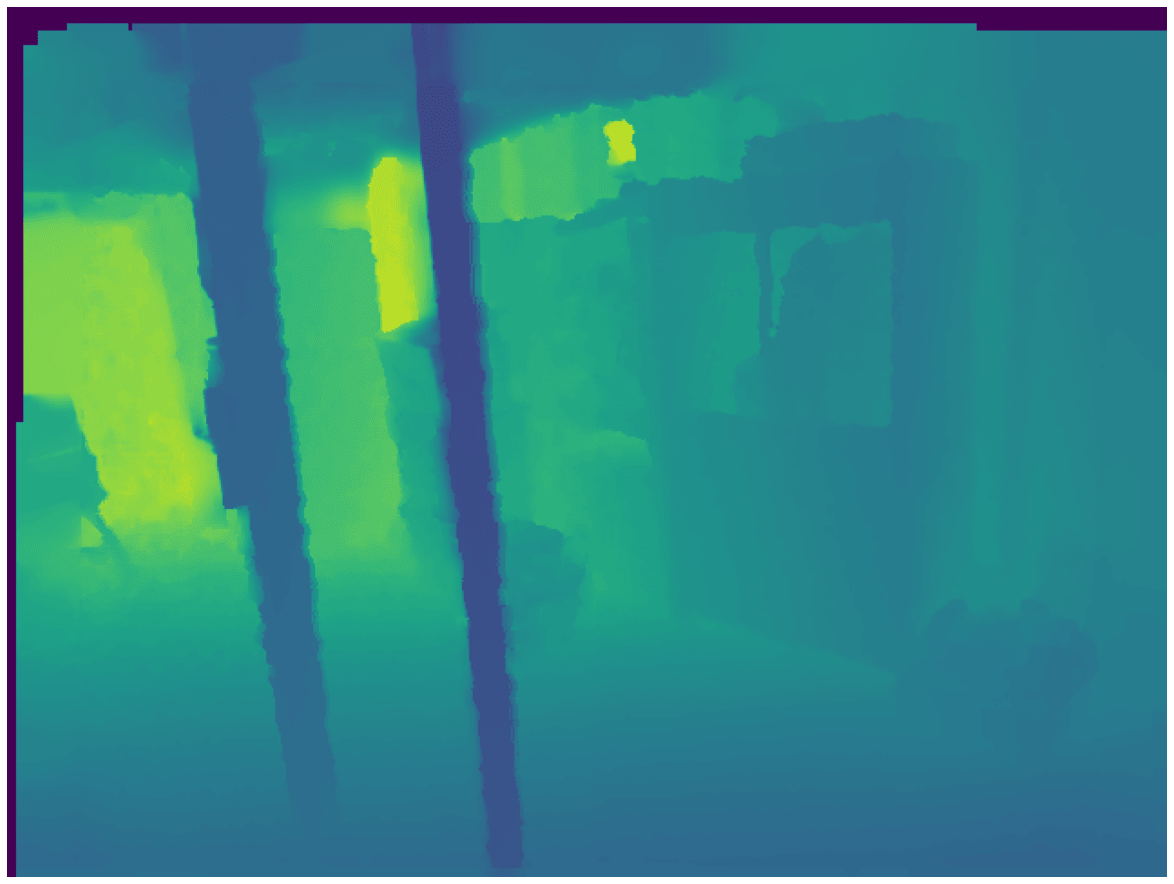

I think there is a discrepancy between depth map of

nyu_depth_v2dataset here and actual depth map. Depth values somehow got discretized/clipped resulting in depth maps that are different from actual ones. Here is a side-by-side comparison,I tried to find the origin of this issue but sadly as I mentioned in tensorflow/datasets/issues/4674, the download link from

fast-depthdoesn't work anymore hence couldn't verify if the error originated there or during porting data from there to HF.Hi @sayakpaul, as you worked on huggingface/datasets/issues/5255, if you still have access to that data could you please share the data or perhaps checkout this issue?

Steps to reproduce the bug

This notebook from @sayakpaul could be used to generate depth maps and actual ground truths could be checked from this dataset from BTS repo.

Expected behavior

Expected depth maps should be smooth rather than discrete/clipped.

Environment info

datasetsversion: 2.8.1.dev0