Can you give us more information on your os and pip environments (pip list)?

Closed BramVanroy closed 3 years ago

Can you give us more information on your os and pip environments (pip list)?

@thomwolf Sure. I'll try downgrading to 3.7 now even though Arrow say they support >=3.5.

certifi 2020.6.20 chardet 3.0.4 click 7.1.2 datasets 1.0.1 dill 0.3.2 fasttext 0.9.2 filelock 3.0.12 future 0.18.2 idna 2.10 joblib 0.16.0 nltk 3.5 numpy 1.19.1 packaging 20.4 pandas 1.1.2 pip 20.0.2 protobuf 3.13.0 pyarrow 1.0.1 pybind11 2.5.0 pyparsing 2.4.7 python-dateutil 2.8.1 pytz 2020.1 regex 2020.7.14 requests 2.24.0 sacremoses 0.0.43 scikit-learn 0.23.2 scipy 1.5.2 sentence-transformers 0.3.6 sentencepiece 0.1.91 setuptools 46.1.3 six 1.15.0 stanza 1.1.1 threadpoolctl 2.1.0 tokenizers 0.8.1rc2 torch 1.6.0+cu101 tqdm 4.48.2 transformers 3.1.0 urllib3 1.25.10 wheel 0.34.2 xxhash 2.0.0

certifi 2020.6.20 chardet 3.0.4 click 7.1.2 datasets 1.0.1 dill 0.3.2 fasttext 0.9.2 filelock 3.0.12 future 0.18.2 idna 2.10 joblib 0.16.0 nlp 0.4.0 nltk 3.5 numpy 1.19.1 packaging 20.4 pandas 1.1.1 pip 20.0.2 protobuf 3.13.0 pyarrow 1.0.1 pybind11 2.5.0 pyparsing 2.4.7 python-dateutil 2.8.1 pytz 2020.1 regex 2020.7.14 requests 2.24.0 sacremoses 0.0.43 scikit-learn 0.23.2 scipy 1.5.2 sentence-transformers 0.3.5.1 sentencepiece 0.1.91 setuptools 46.1.3 six 1.15.0 stanza 1.1.1 threadpoolctl 2.1.0 tokenizers 0.8.1rc1 torch 1.6.0+cu101 tqdm 4.48.2 transformers 3.0.2 urllib3 1.25.10 wheel 0.34.2 xxhash 2.0.0

Downgrading to 3.7 does not help. Here is a dummy text file:

Verzekering weigert vaker te betalen

Bedrijven van verzekeringen erkennen steeds minder arbeidsongevallen .

In 2012 weigerden de bedrijven te betalen voor 21.055 ongevallen op het werk .

Dat is 11,8 % van alle ongevallen op het werk .

Nog nooit weigerden verzekeraars zoveel zaken .

In 2012 hadden 135.118 mensen een ongeval op het werk .

Dat zijn elke werkdag 530 mensen .

Bij die ongevallen stierven 67 mensen .

Bijna 12.000 hebben een handicap na het ongeval .

Geen echt arbeidsongeval Bedrijven moeten een verzekering hebben voor hun werknemers .A temporary work around for the "text" type, is

dataset = Dataset.from_dict({"text": Path(dataset_f).read_text().splitlines()})

even i am facing the same issue.

even i am facing the same issue.

@banunitte Please do not post screenshots in the future but copy-paste your code and the errors. That allows others to copy-and-paste your code and test it. You may also want to provide the Python version that you are using.

I have the exact same problem in Windows 10, Python 3.8.

I have the same problem on Linux of the script crashing with a CSV error. This may be caused by 'CRLF', when changed 'CRLF' to 'LF', the problem solved.

I pushed a fix for pyarrow.lib.ArrowInvalid: CSV parse error. Let me know if you still have this issue.

Not sure about the windows one yet

To complete what @lhoestq is saying, I think that to use the new version of the text processing script (which is on master right now) you need to either specify the version of the script to be the master one or to install the lib from source (in which case it uses the master version of the script by default):

dataset = load_dataset('text', script_version='master', data_files=XXX)We do versioning by default, i.e. your version of the dataset lib will use the script with the same version by default (i.e. only the 1.0.1 version of the script if you have the PyPI version 1.0.1 of the lib).

win10, py3.6

win10, py3.6

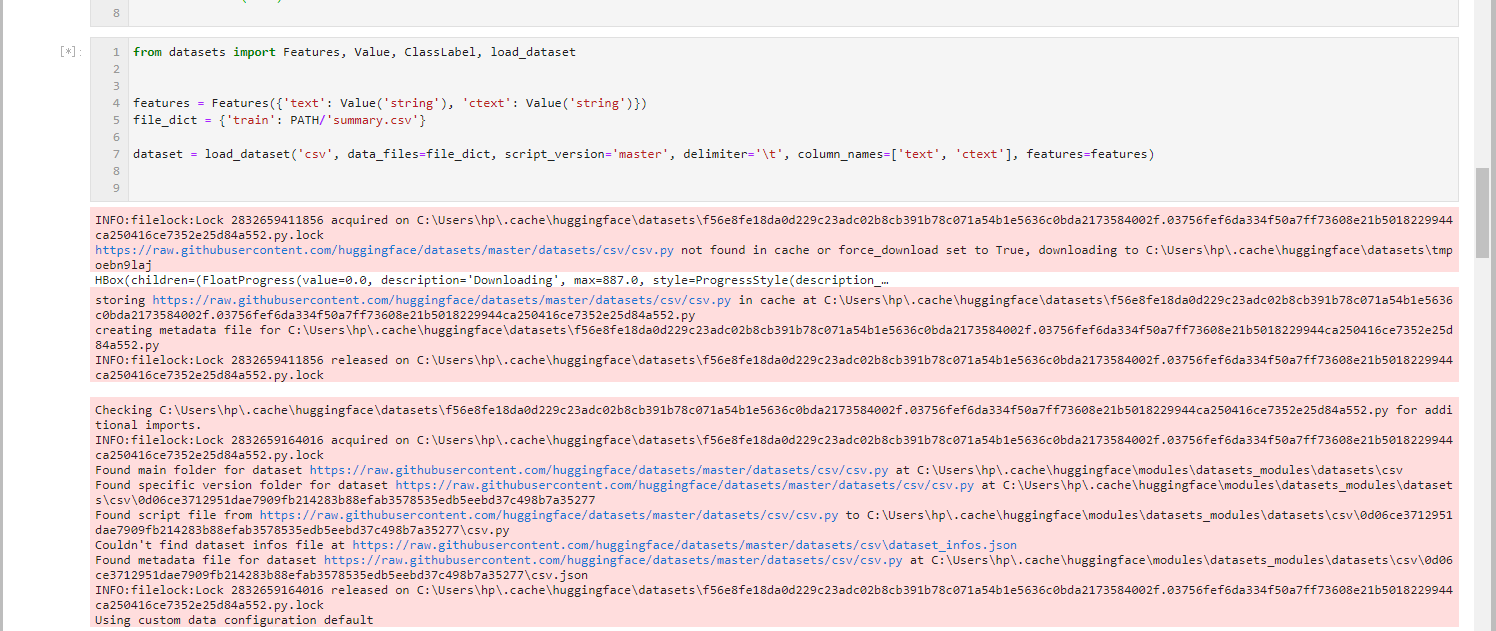

from datasets import Features, Value, ClassLabel, load_dataset

features = Features({'text': Value('string'), 'ctext': Value('string')})

file_dict = {'train': PATH/'summary.csv'}

dataset = load_dataset('csv', data_files=file_dict, script_version='master', delimiter='\t', column_names=['text', 'ctext'], features=features)Traceback` (most recent call last):

File "main.py", line 281, in <module>

main()

File "main.py", line 190, in main

train_data, test_data = data_factory(

File "main.py", line 129, in data_factory

train_data = load_dataset('text',

File "/home/me/Downloads/datasets/src/datasets/load.py", line 608, in load_dataset

builder_instance.download_and_prepare(

File "/home/me/Downloads/datasets/src/datasets/builder.py", line 468, in download_and_prepare

self._download_and_prepare(

File "/home/me/Downloads/datasets/src/datasets/builder.py", line 546, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/me/Downloads/datasets/src/datasets/builder.py", line 888, in _prepare_split

for key, table in utils.tqdm(generator, unit=" tables", leave=False, disable=not_verbose):

File "/home/me/.local/lib/python3.8/site-packages/tqdm/std.py", line 1130, in __iter__

for obj in iterable:

File "/home/me/.cache/huggingface/modules/datasets_modules/datasets/text/512f465342e4f4cd07a8791428a629c043bb89d55ad7817cbf7fcc649178b014/text.py", line 103, in _generate_tables

pa_table = pac.read_csv(

File "pyarrow/_csv.pyx", line 617, in pyarrow._csv.read_csv

File "pyarrow/error.pxi", line 123, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 85, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: CSV parse error: Expected 1 columns, got 2Unfortunately i am still getting this issue on Linux. I installed datasets from source and specified script_version to master.

win10, py3.6

from datasets import Features, Value, ClassLabel, load_dataset features = Features({'text': Value('string'), 'ctext': Value('string')}) file_dict = {'train': PATH/'summary.csv'} dataset = load_dataset('csv', data_files=file_dict, script_version='master', delimiter='\t', column_names=['text', 'ctext'], features=features)

Since #644 it should now work on windows @ScottishFold007

Trying the following snippet, I get different problems on Linux and Windows.

dataset = load_dataset("text", data_files="data.txt") # or dataset = load_dataset("text", data_files=["data.txt"])Windows just seems to get stuck. Even with a tiny dataset of 10 lines, it has been stuck for 15 minutes already at this message:

Checking C:\Users\bramv\.cache\huggingface\datasets\b1d50a0e74da9a7b9822cea8ff4e4f217dd892e09eb14f6274a2169e5436e2ea.30c25842cda32b0540d88b7195147decf9671ee442f4bc2fb6ad74016852978e.py for additional imports. Found main folder for dataset https://raw.githubusercontent.com/huggingface/datasets/1.0.1/datasets/text/text.py at C:\Users\bramv\.cache\huggingface\modules\datasets_modules\datasets\text Found specific version folder for dataset https://raw.githubusercontent.com/huggingface/datasets/1.0.1/datasets/text/text.py at C:\Users\bramv\.cache\huggingface\modules\datasets_modules\datasets\text\7e13bc0fa76783d4ef197f079dc8acfe54c3efda980f2c9adfab046ede2f0ff7 Found script file from https://raw.githubusercontent.com/huggingface/datasets/1.0.1/datasets/text/text.py to C:\Users\bramv\.cache\huggingface\modules\datasets_modules\datasets\text\7e13bc0fa76783d4ef197f079dc8acfe54c3efda980f2c9adfab046ede2f0ff7\text.py Couldn't find dataset infos file at https://raw.githubusercontent.com/huggingface/datasets/1.0.1/datasets/text\dataset_infos.json Found metadata file for dataset https://raw.githubusercontent.com/huggingface/datasets/1.0.1/datasets/text/text.py at C:\Users\bramv\.cache\huggingface\modules\datasets_modules\datasets\text\7e13bc0fa76783d4ef197f079dc8acfe54c3efda980f2c9adfab046ede2f0ff7\text.json Using custom data configuration default

Same for you @BramVanroy .

Not sure about the one on linux though

To complete what @lhoestq is saying, I think that to use the new version of the

textprocessing script (which is on master right now) you need to either specify the version of the script to be themasterone or to install the lib from source (in which case it uses themasterversion of the script by default):dataset = load_dataset('text', script_version='master', data_files=XXX)We do versioning by default, i.e. your version of the dataset lib will use the script with the same version by default (i.e. only the

1.0.1version of the script if you have the PyPI version1.0.1of the lib).

Linux here:

I was using the 0.4.0 nlp library load_dataset to load a text dataset of 9-10Gb without collapsing the RAM memory. However, today I got the csv error message mentioned in this issue. After installing the new (datasets) library from source and specifying the script_verson = 'master' I'm still having this same error message. Furthermore, I cannot use the dictionary "trick" to load the dataset since the system kills the process due to a RAM out of memory problem. Is there any other solution to this error? Thank you in advance.

Hi @raruidol To fix the RAM issue you'll need to shard your text files into smaller files (see https://github.com/huggingface/datasets/issues/610#issuecomment-691672919 for example)

I'm not sure why you're having the csv error on linux. Do you think you could to to reproduce it on google colab for example ? Or send me a dummy .txt file that reproduces the issue ?

@lhoestq

The crash message shows up when loading the dataset:

print('Loading corpus...')

files = glob.glob('corpora/shards/*')

-> dataset = load_dataset('text', script_version='master', data_files=files)

print('Corpus loaded.')And this is the exact message:

Traceback (most recent call last):

File "run_language_modeling.py", line 27, in <module>

dataset = load_dataset('text', script_version='master', data_files=files)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/load.py", line 611, in load_dataset

ignore_verifications=ignore_verifications,

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 471, in download_and_prepare

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 548, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 892, in _prepare_split

for key, table in utils.tqdm(generator, unit=" tables", leave=False, disable=not_verbose):

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/tqdm/std.py", line 1130, in __iter__

for obj in iterable:

File "/home/jupyter-raruidol/.cache/huggingface/modules/datasets_modules/datasets/text/512f465342e4f4cd07a8791428a629c043bb89d55ad7817cbf7fcc649178b014/text.py", line 107, in _generate_tables

convert_options=self.config.convert_options,

File "pyarrow/_csv.pyx", line 714, in pyarrow._csv.read_csv

File "pyarrow/error.pxi", line 122, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 84, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: CSV parse error: Expected 1 columns, got 2And these are the pip packages I have atm and their versions:

Package Version Location

--------------- --------- -------------------------------------------------------------

certifi 2020.6.20

chardet 3.0.4

click 7.1.2

datasets 1.0.2

dill 0.3.2

filelock 3.0.12

future 0.18.2

idna 2.10

joblib 0.16.0

numpy 1.19.1

packaging 20.4

pandas 1.1.1

pip 19.0.3

pyarrow 1.0.1

pyparsing 2.4.7

python-dateutil 2.8.1

pytz 2020.1

regex 2020.7.14

requests 2.24.0

sacremoses 0.0.43

sentencepiece 0.1.91

setuptools 40.8.0

six 1.15.0

tokenizers 0.8.1rc2

torch 1.6.0

tqdm 4.48.2

transformers 3.0.2 /home/jupyter-raruidol/DebatAnalyser/env/src/transformers/srcI tested on google colab which is also linux using this code:

wget https://raw.githubusercontent.com/abisee/cnn-dailymail/master/url_lists/all_train.txt

from datasets import load_datasetd = load_dataset("text", data_files="all_train.txt", script_version='master')

And I don't get this issue.

\> Could you test on your side if these lines work @raruidol ?

also cc @Skyy93 as it seems you have the same issue

If it works:

It could mean that the issue could come from unexpected patterns in the files you want to use.

In that case we should find a way to handle them.

And if it doesn't work:

It could mean that it comes from the way pyarrow reads text files on linux.

In that case we should report it to pyarrow and find a workaround in the meantime

Either way it should help to find where this bug comes from and fix it :)

Thank you in advance !Update: also tested the above code in a docker container from jupyter/minimal-notebook (based on ubuntu) and still not able to reproduce

It looks like with your text input file works without any problem. I have been doing some experiments this morning with my input files and I'm almost certain that the crash is caused by some unexpected pattern in the files. However, I've not been able to spot the main cause of it. What I find strange is that this same corpus was being loaded by the nlp 0.4.0 library without any problem... Where can I find the code where you structure the input text data in order to use it with pyarrow?

Under the hood it does

import pyarrow as pa

import pyarrow.csv

# Use csv reader from Pyarrow with one column for text files

# To force the one-column setting, we set an arbitrary character

# that is not in text files as delimiter, such as \b or \v.

# The bell character, \b, was used to make beeps back in the days

parse_options = pa.csv.ParseOptions(

delimiter="\b",

quote_char=False,

double_quote=False,

escape_char=False,

newlines_in_values=False,

ignore_empty_lines=False,

)

read_options= pa.csv.ReadOptions(use_threads=True, column_names=["text"])

pa_table = pa.csv.read_csv("all_train.txt", read_options=read_options, parse_options=parse_options)Note that we changed the parse options with datasets 1.0

In particular the delimiter used to be \r but this delimiter doesn't work on windows.

Could you try with \a instead of \b ? It looks like the bell character is \a in python and not \b

I was just exploring if the crash was happening in every shard or not, and which shards were generating the error message. With \b I got the following list of shards crashing:

Errors on files: ['corpora/shards/shard_0069', 'corpora/shards/shard_0043', 'corpora/shards/shard_0014', 'corpora/shards/shard_0032', 'corpora/shards/shard_0088', 'corpora/shards/shard_0018', 'corpora/shards/shard_0073', 'corpora/shards/shard_0079', 'corpora/shards/shard_0038', 'corpora/shards/shard_0041', 'corpora/shards/shard_0007', 'corpora/shards/shard_0004', 'corpora/shards/shard_0102', 'corpora/shards/shard_0096', 'corpora/shards/shard_0030', 'corpora/shards/shard_0076', 'corpora/shards/shard_0067', 'corpora/shards/shard_0052', 'corpora/shards/shard_0026', 'corpora/shards/shard_0024', 'corpora/shards/shard_0064', 'corpora/shards/shard_0044', 'corpora/shards/shard_0013', 'corpora/shards/shard_0062', 'corpora/shards/shard_0057', 'corpora/shards/shard_0097', 'corpora/shards/shard_0094', 'corpora/shards/shard_0078', 'corpora/shards/shard_0075', 'corpora/shards/shard_0039', 'corpora/shards/shard_0077', 'corpora/shards/shard_0021', 'corpora/shards/shard_0040', 'corpora/shards/shard_0009', 'corpora/shards/shard_0023', 'corpora/shards/shard_0095', 'corpora/shards/shard_0107', 'corpora/shards/shard_0063', 'corpora/shards/shard_0086', 'corpora/shards/shard_0047', 'corpora/shards/shard_0089', 'corpora/shards/shard_0037', 'corpora/shards/shard_0101', 'corpora/shards/shard_0093', 'corpora/shards/shard_0082', 'corpora/shards/shard_0091', 'corpora/shards/shard_0065', 'corpora/shards/shard_0020', 'corpora/shards/shard_0070', 'corpora/shards/shard_0008', 'corpora/shards/shard_0058', 'corpora/shards/shard_0060', 'corpora/shards/shard_0022', 'corpora/shards/shard_0059', 'corpora/shards/shard_0100', 'corpora/shards/shard_0027', 'corpora/shards/shard_0072', 'corpora/shards/shard_0098', 'corpora/shards/shard_0019', 'corpora/shards/shard_0066', 'corpora/shards/shard_0042', 'corpora/shards/shard_0053']I also tried with \a and the list decreased but there were still several crashes:

Errors on files: ['corpora/shards/shard_0069', 'corpora/shards/shard_0055', 'corpora/shards/shard_0043', 'corpora/shards/shard_0014', 'corpora/shards/shard_0073', 'corpora/shards/shard_0025', 'corpora/shards/shard_0068', 'corpora/shards/shard_0102', 'corpora/shards/shard_0096', 'corpora/shards/shard_0076', 'corpora/shards/shard_0067', 'corpora/shards/shard_0026', 'corpora/shards/shard_0024', 'corpora/shards/shard_0044', 'corpora/shards/shard_0087', 'corpora/shards/shard_0092', 'corpora/shards/shard_0074', 'corpora/shards/shard_0094', 'corpora/shards/shard_0078', 'corpora/shards/shard_0039', 'corpora/shards/shard_0077', 'corpora/shards/shard_0040', 'corpora/shards/shard_0009', 'corpora/shards/shard_0107', 'corpora/shards/shard_0063', 'corpora/shards/shard_0103', 'corpora/shards/shard_0047', 'corpora/shards/shard_0033', 'corpora/shards/shard_0089', 'corpora/shards/shard_0037', 'corpora/shards/shard_0082', 'corpora/shards/shard_0071', 'corpora/shards/shard_0091', 'corpora/shards/shard_0065', 'corpora/shards/shard_0070', 'corpora/shards/shard_0058', 'corpora/shards/shard_0081', 'corpora/shards/shard_0060', 'corpora/shards/shard_0002', 'corpora/shards/shard_0059', 'corpora/shards/shard_0027', 'corpora/shards/shard_0072', 'corpora/shards/shard_0098', 'corpora/shards/shard_0019', 'corpora/shards/shard_0045', 'corpora/shards/shard_0036', 'corpora/shards/shard_0066', 'corpora/shards/shard_0053']Which means that it is quite possible that the assumption of that some unexpected pattern in the files is causing the crashes is true. If I am able to reach any conclusion I will post It here asap.

Hmmm I was expecting it to work with \a, not sure why they appear in your text files though

Hi @lhoestq, is there any input length restriction which was not before the update of the nlp library?

No we never set any input length restriction on our side (maybe arrow but I don't think so)

@lhoestq Can you ever be certain that a delimiter character is not present in a plain text file? In other formats (e.g. CSV) , rules are set of what is allowed and what isn't so that it actually constitutes a CSV file. In a text file you basically have "anything goes", so I don't think you can ever be entirely sure that the chosen delimiter does not exist in the text file, or am I wrong?

If I understand correctly you choose a delimiter that we hope does not exist in the file, so that when the CSV parser starts splitting into columns, it will only ever create one column? Why can't we use a newline character though?

Okay, I have splitted the crashing shards into individual sentences and some examples of the inputs that are causing the crashes are the following ones:

4. DE L’ORGANITZACIÓ ESTAMENTAL A L’ORGANITZACIÓ EN CLASSES A mesura que es desenvolupava un sistema econòmic capitalista i naixia una classe burgesa cada vegada més preparada per a substituir els dirigents de les velles monarquies absolutistes, es qüestionava l’abundància de béns amortitzats, que com s’ha dit estaven fora del mercat i no pagaven tributs, pels perjudicis que ocasionaven a les finances públiques i a l’economia en general. Aquest estat d’opinió revolucionari va desembocar en un conjunt de mesures pràctiques de caràcter liberal. D’una banda, les que intentaven desposseir les mans mortes del domini de béns acumulats, procés que acostumem a denominar desamortització, i que no és més que la nacionalització i venda d’aquests béns eclesiàstics o civils en subhasta pública al millor postor. D’altra banda, les que redimien o reduïen els censos i delmes o aixecaven les prohibicions de venda, és a dir, les vinculacions. La desamortització, que va afectar béns dels ordes religiosos, dels pobles i d’algunes corporacions civils, no va ser un camí fàcil, perquè costava i costa trobar algú que sigui indiferent a la pèrdua de béns, drets i privilegis. I té una gran transcendència, va privar els antics estaments de les Espanyes, clero i pobles —la noblesa en queda al marge—, de la força econòmica que els donaven bona part de les seves terres i, en última instància, va preparar el terreny per a la substitució de la vella societat estamental per la nova societat classista. En aquesta societat, en teoria, les agrupacions socials són obertes, no tenen cap estatut jurídic privilegiat i estan definides per la possessió o no d’uns béns econòmics que són lliurement alienables. A les Espanyes la transformació va afectar poc l’aristocràcia latifundista, allà on n’hi havia. Aquesta situació va afavorir, en part, la persistència de la vella cultura de la societat estamental en determinats ambients, i això ha influït decisivament en la manca de democràcia que caracteritza la majoria de règims polítics que s’han anat succeint. Una manera de pensar que sempre sura en un moment o altre, i que de fet no acaba de desaparèixer del tot. 5. INICI DE LA DESAMORTITZACIÓ A LES ESPANYES Durant el segle xviii, dins d’aquesta visió lliberal, va agafar força en alguns cercles de les Espanyes el corrent d’opinió contrari a les mans mortes. Durant el regnat de Carles III, s’arbitraren les primeres mesures desamortitzadores proposades per alguns ministres il·lustrats. Aquestes disposicions foren modestes i poc eficaces, no van aturar l’acumulació de terres per part dels estaments que constituïen les mans mortes i varen afectar principalment béns dels pobles. L’Església no va ser tocada, excepte en el cas de 110

la revolució liberal, perquè, encara que havia perdut els seus drets jurisdiccionals, havia conservat la majoria de terres i fins i tot les havia incrementat amb d’altres que procedien de la desamortització. En la nova situació, les mans mortes del bosc públic eren l’Estat, que no cerca mai l’autofinançament de les despeses de gestió; els diners que manquin ja els posarà l’Estat. 9. DEFENSA I INTENTS DE RECUPERACIÓ DELS BÉNS COMUNALS DESAMORTITZATS El procés de centralització no era senzill, perquè, d’una banda, la nova organització apartava de la gestió moltes corporacions locals i molts veïns que l’havien portada des de l’edat mitjana, i, de l’altra, era difícil de coordinar la nova silvicultura amb moltes pràctiques forestals i drets tradicionals, com la pastura, fer llenya o tallar un arbre aquí i un altre allà quan tenia el gruix suficient, les pràctiques que s’havien fet sempre. Les primeres passes de la nova organització centralitzada varen tenir moltes dificultats en aquells indrets en què els terrenys municipals i comunals tenien un paper important en l’economia local. La desobediència a determinades normes imposades varen prendre formes diferents. Algunes institucions, com, per exemple, la Diputació de Lleida, varen retardar la tramitació d’alguns expedients i varen evitar la venda de béns municipals. Molts pobles permeteren deixar que els veïns continuessin amb les seves pràctiques tradicionals, d’altres varen boicotejar les subhastes d’aprofitaments. L’Estat va reaccionar encomanant a la Guàrdia Civil el compliment de les noves directrius. Imposar el nou règim va costar a l’Administració un grapat d’anys, però de mica en mica, amb molta, molta guarderia i gens de negociació, ho va aconseguir. La nova gestió estatal dels béns municipals va deixar, com hem comentat, molta gent sense uns recursos necessaris per a la supervivència, sobre tot en àrees on predominaven les grans propietats, i on els pagesos sense terra treballaven de jornalers temporers. Això va afavorir que, a bona part de les Espanyes, les primeres lluites camperoles de la segona meitat del segle xix defensessin la recuperació dels comunals desamortitzats; per a molts aquella expropiació i venda dirigida pels governs monàrquics era la causa de molta misèria. D’altres, més radicalitzats, varen entendre que l’eliminació de la propietat col·lectiva i la gestió estatal dels boscos no desamortitzats suposava una usurpació pura i dura. En les zones més afectades per la desamortització això va donar lloc a un imaginari centrat en la defensa del comunal. La Segona República va arribar en una conjuntura econòmica de crisi, generada pel crac del 1929. Al camp, aquesta situació va produir una forta caiguda dels preus dels productes agraris i un increment important de l’atur. QUADERNS AGRARIS 42 (juny 2017), p. 105-126

I think that the main difference between the crashing samples and the rest is their length. Therefore, couldn't the length be causing the message errors? I hope with these samples you can identify what is causing the crashes considering that the 0.4.0 nlp library was loading them properly.

So we're using the csv reader to read text files because arrow doesn't have a text reader. To workaround the fact that text files are just csv with one column, we want to set a delimiter that doesn't appear in text files. Until now I thought that it would do the job but unfortunately it looks like even characters like \a appear in text files.

So we have to option:

\x1b esc or \x18 cancel)@lhoestq Can you ever be certain that a delimiter character is not present in a plain text file? In other formats (e.g. CSV) , rules are set of what is allowed and what isn't so that it actually constitutes a CSV file. In a text file you basically have "anything goes", so I don't think you can ever be entirely sure that the chosen delimiter does not exist in the text file, or am I wrong?

As long as the text file follows some encoding it wouldn't make sense to have characters such as the bell character. However I agree it can happen.

If I understand correctly you choose a delimiter that we hope does not exist in the file, so that when the CSV parser starts splitting into columns, it will only ever create one column? Why can't we use a newline character though?

Exactly. Arrow doesn't allow the newline character unfortunately.

Okay, I have splitted the crashing shards into individual sentences and some examples of the inputs that are causing the crashes are the following ones

Thanks for digging into it !

Characters like \a or \b are not shown when printing the text, so as it is I can't tell if it contains unexpected characters.

Maybe could could open the file in python and check if "\b" in open("path/to/file", "r").read() ?

I think that the main difference between the crashing samples and the rest is their length. Therefore, couldn't the length be causing the message errors? I hope with these samples you can identify what is causing the crashes considering that the 0.4.0 nlp library was loading them properly.

To check that you could try to run

import pyarrow as pa

import pyarrow.csv

open("dummy.txt", "w").write((("a" * 10_000) + "\n") * 4) # 4 lines of 10 000 'a'

parse_options = pa.csv.ParseOptions(

delimiter="\b",

quote_char=False,

double_quote=False,

escape_char=False,

newlines_in_values=False,

ignore_empty_lines=False,

)

read_options= pa.csv.ReadOptions(use_threads=True, column_names=["text"])

pa_table = pa.csv.read_csv("dummy.txt", read_options=read_options, parse_options=parse_options)on my side it runs without error though

That's true, It was my error printing the text that way. Maybe as a workaround, I can force all my input samples to have "\b" at the end?

That's true, It was my error printing the text that way. Maybe as a workaround, I can force all my input samples to have "\b" at the end?

I don't think it would work since we only want one column, and "\b" is set to be the delimiter between two columns, so it will raise the same issue again. Pyarrow would think that there is more than one column if the delimiter is found somewhere.

Anyway, I I'll work on a new text reader if we don't find the right workaround about this delimiter issue.

I just merged a new text reader based on pandas. Could you try again on your data @raruidol ?

Until we do a new release you can experiment with it using

from datasets import load_dataset

d = load_dataset("text", data_files=..., script_version="master")(script_version is master by default if you installed from source)

Thank you @lhoestq I have tried again with the new text reader and there is still some error. Depending on how do I load the data I have spotted two different crashes. When I try to load the full-size corpus text file I get the following output:

Traceback (most recent call last):

File "run_language_modeling.py", line 27, in <module>

dataset = load_dataset('text', script_version='master', data_files=['corpora/cc_ca.txt', 'corpora/viquipedia_clean.txt'])['train']

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/load.py", line 611, in load_dataset

ignore_verifications=ignore_verifications,

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 471, in download_and_prepare

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 548, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 892, in _prepare_split

for key, table in utils.tqdm(generator, unit=" tables", leave=False, disable=not_verbose):

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/tqdm/std.py", line 1130, in __iter__

for obj in iterable:

File "/home/jupyter-raruidol/.cache/huggingface/modules/datasets_modules/datasets/text/7c0f8af3178a6c694d530e71edd8f1ec1d7311867f8c29955898c29a0624adf5/text.py", line 68, in _generate_tables

for j, df in enumerate(text_file_reader):

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 1161, in __next__

return self.get_chunk()

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 1220, in get_chunk

return self.read(nrows=size)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 1186, in read

ret = self._engine.read(nrows)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 2145, in read

data = self._reader.read(nrows)

File "pandas/_libs/parsers.pyx", line 847, in pandas._libs.parsers.TextReader.read

File "pandas/_libs/parsers.pyx", line 874, in pandas._libs.parsers.TextReader._read_low_memory

File "pandas/_libs/parsers.pyx", line 918, in pandas._libs.parsers.TextReader._read_rows

File "pandas/_libs/parsers.pyx", line 905, in pandas._libs.parsers.TextReader._tokenize_rows

File "pandas/_libs/parsers.pyx", line 2042, in pandas._libs.parsers.raise_parser_error

pandas.errors.ParserError: Error tokenizing data. C error: Buffer overflow caught - possible malformed input file.However, when loading the sharded version, the error is different:

Traceback (most recent call last):

File "run_language_modeling.py", line 27, in <module>

dataset = load_dataset('text', script_version='master', data_files=files)['train']

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/load.py", line 611, in load_dataset

ignore_verifications=ignore_verifications,

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 471, in download_and_prepare

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 548, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/datasets/builder.py", line 892, in _prepare_split

for key, table in utils.tqdm(generator, unit=" tables", leave=False, disable=not_verbose):

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/tqdm/std.py", line 1130, in __iter__

for obj in iterable:

File "/home/jupyter-raruidol/.cache/huggingface/modules/datasets_modules/datasets/text/7c0f8af3178a6c694d530e71edd8f1ec1d7311867f8c29955898c29a0624adf5/text.py", line 68, in _generate_tables

for j, df in enumerate(text_file_reader):

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 1161, in __next__

return self.get_chunk()

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 1220, in get_chunk

return self.read(nrows=size)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 1186, in read

ret = self._engine.read(nrows)

File "/home/jupyter-raruidol/DebatAnalyser/env/lib/python3.7/site-packages/pandas/io/parsers.py", line 2145, in read

data = self._reader.read(nrows)

File "pandas/_libs/parsers.pyx", line 847, in pandas._libs.parsers.TextReader.read

File "pandas/_libs/parsers.pyx", line 874, in pandas._libs.parsers.TextReader._read_low_memory

File "pandas/_libs/parsers.pyx", line 918, in pandas._libs.parsers.TextReader._read_rows

File "pandas/_libs/parsers.pyx", line 905, in pandas._libs.parsers.TextReader._tokenize_rows

File "pandas/_libs/parsers.pyx", line 2042, in pandas._libs.parsers.raise_parser_error

pandas.errors.ParserError: Error tokenizing data. C error: EOF inside string starting at row 254729I had the same error but managed to fix it with adding lineterminator='\n' to the pd.read_csv() in the text.py

Have a look at Stackoverflow

EDIT: I tested it with my 1,5tb dataset. With the lineterminator added it works fine!

Indeed good catch ! I'm going to add the lineterminator and add tests that include the \r character. According to the stackoverflow thread this issue occurs when there is a \r in the text file.

You can expect a patch release by tomorrow

Setting lineterminator='\n' works on my side but it keeps the \r at the end of each line (they're not stripped).

I guess it's the same for you @Skyy93 ?

Also I'm not able to reproduce your issue on macos with files containing \r\n as end of lines.

I found a way to implement it without third party lib and without separator/delimiter logic. Creating a PR now.

I'd love to have your feedback on the PR @Skyy93 , hopefully this is the final iteration of the text dataset :)

Let me know if it works on your side !

Until there's a new release you can test it with

from datasets import load_dataset

d = load_dataset("text", data_files=..., script_version="master")Looks good! Thank you for your support.

The same problem happens with "csv". ` from datasets import load_dataset

dataset = load_dataset("csv", data_files="custom_data.csv", delimiter="\t", column_names=["title", "text"], script_version="master") ` ArrowInvalid: CSV parse error: Expected 1 columns, got 2

The same problem happens with "csv". ` from datasets import load_dataset

dataset = load_dataset("csv", data_files="custom_data.csv", delimiter="\t", column_names=["title", "text"], script_version="master") ` ArrowInvalid: CSV parse error: Expected 1 columns, got 2

Could you open a new issue for CSV please ? Text and CSV datasets used to share some similarities in the way the file are loaded and parsed but it's no longer the case since 1.1.2 Hopefully we can find a fix quicker now for such errors :) Also if you can provide a google colab to reproduce the issue that would be awesome !

@lhoestq I've opened a new issue #743 and added a colab. When do you have free time, can you please look upon it. I would appreciate it :D. Thank you.

I think this issue (loading text files) is now solved.

Closing it, please open a new issue with full details to continue or start another discussion on this topic.

Trying the following snippet, I get different problems on Linux and Windows.

(ps This example shows that you can use a string as input for data_files, but the signature is

Union[Dict, List].)The problem on Linux is that the script crashes with a CSV error (even though it isn't a CSV file). On Windows the script just seems to freeze or get stuck after loading the config file.

Linux stack trace:

Windows just seems to get stuck. Even with a tiny dataset of 10 lines, it has been stuck for 15 minutes already at this message: