these authors reckon it's better to train on an unmasked text embeddings (even though that risks learning from PAD token embeddings):

https://github.com/huggingface/diffusers/issues/1890#issuecomment-1597564866

as for inference: the user needs to be able to match whatever approach was used during training.

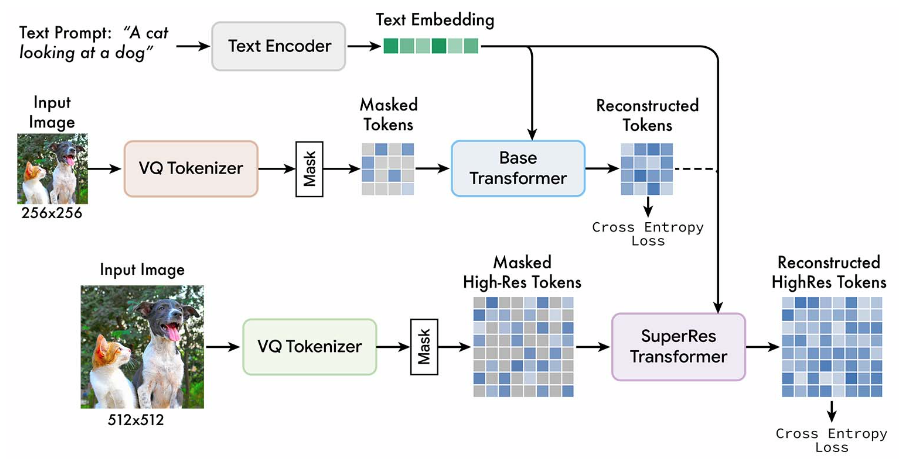

I thought Muse was a bit wackier though. it actually masks vision tokens:

Like in Stable Diffusion, no attention mask appears to be used for input tokens: https://github.com/huggingface/open-muse/blob/2a0365707c0c3d91b0b91bc456161db693652ff1/muse/pipeline_muse.py#L93-L101

But according to third party analysis this appears to have been a mistake all along. Do we have insight on whether attention masks would help for better prompt-image alignment?