Hello!

Thanks for reporting this issue! Did you try to convert your H5 file to be able to use it with tensorflowjs_converter --input_format keras path/to/my_model.h5 path/to/tfjs_target_dir? You can also have a SavedModel version with:

model = TFAutoModel.from_pretrained("distilbert-base-uncased")model.save_pretrained(path, saved_model=True)

H5 and SavedModel conversion process are nicely explained in https://www.tensorflow.org/js/tutorials/conversion/import_keras and https://www.tensorflow.org/js/tutorials/conversion/import_saved_model

Environment info

transformersversion: 4.3.3Who can help

Information

Model I am using (Bert, XLNet ...): Any tensorflow ones

The problem arises when using:

The tasks I am working on is:

To reproduce

Steps to reproduce the behavior:

model = TFAutoModel.from_pretrained("distilbert-base-uncased"))model.save_pretrained(path)UnhandledPromiseRejectionWarning: TypeError: Cannot read property 'model_config' of nullExpected behavior

Be able to load a model in the LayerModel format as it's the only one which allows finetuning.

More informations.

I know the question was already posted in https://github.com/huggingface/transformers/issues/4073

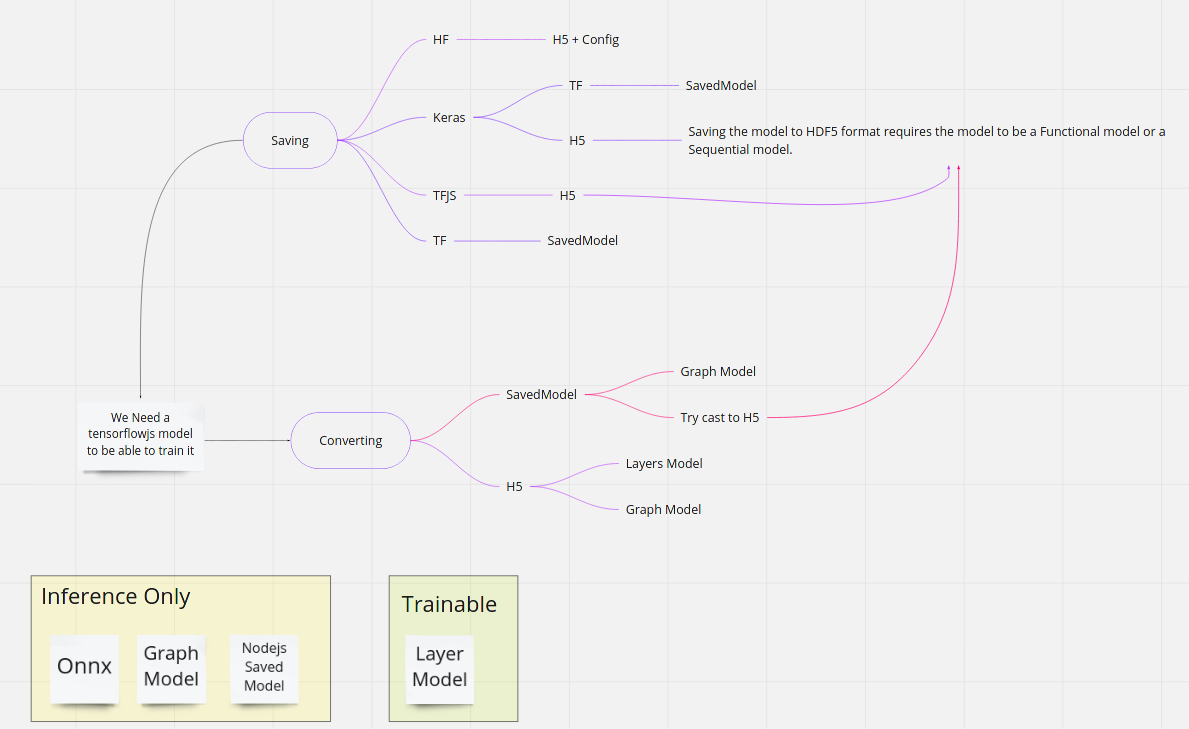

I need to do finetuning so onnx, graphModels & cie should be avoided.

Seems that the H5 model just need the config file which seems saved on the side with the custom HF script. I went to read some issues on tensorflowjs (exemple : this or that) and the problem is that the HF model contains only the weights and not the architecture. The goal would be to adapt the

save_pretrainedfunction to save the architecture as well. I guess it's complex because of theSaving the model to HDF5 format requires the model to be a Functional model or a Sequential model.error in described bellow.Seems also that only H5 model can be converted to a trainable LayerModel.

I'm willing to work on a PR or to help as i'm working on a web stack (nodejs) and I need this.

I made a drawing of all models (that I'm aware of) to summarize loading converting :

Also tried :

Use the nodejs

tf.node.loadSavedModelwhich return only a saved model which I cannot use as the base structure with something like :Look for other libraries to train models (libtorch : incomplete, onnx training: only in python etc..)

Should I also write an issue on tensorflowjs ?

Thanks in advance for you time and have a great day.