This means you're encoding a sequence that is larger than the max sequence the model can handle (which is 512 tokens). This is not an error but a warning; if you pass that sequence to the model it will crash as it cannot handle such a long sequence.

You can truncate the sequence: seq = seq[:512] or use the max_length tokenizer parameter so that it handles it on its own.

❓ Questions & Help

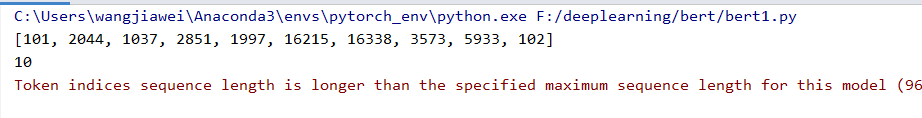

When I use Bert, the "token indices sequence length is longer than the specified maximum sequence length for this model (1017 > 512)" occurs. How can I solve this error?