I had this problem today,too. I create a new container as my new gpu environment, but cannot load any pretrained due to this error, but the same load pretrained codes are normal run on my old enviroment to download the pretrained

Closed manueltonneau closed 4 years ago

I had this problem today,too. I create a new container as my new gpu environment, but cannot load any pretrained due to this error, but the same load pretrained codes are normal run on my old enviroment to download the pretrained

Hi @mananeau, when I look on the website and click on "show all files" for your model, it only lists the configuration and vocabulary. Have you uploaded the model file?

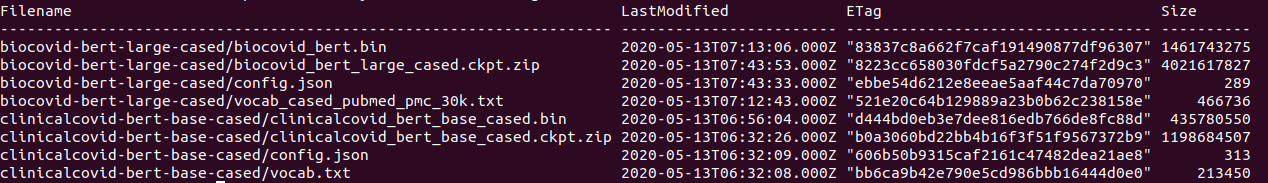

I believe I did. It can be found under this link. Also, when doing transformers-cli s3 ls, I get this output:

I have this problem too, and I do have all my files

For this problem I switched to pip install with the repository of tranformers=2.8 which had been download in my old environment.

It normal works to download and load any pretrained weight

I don't know why, but it's work

I switched to pip install with the repository of tranformers=2.8 which had been download in my old environment.

I cannot confirm this on my end. Tried with transformers==2.8.0 and still getting the same error.

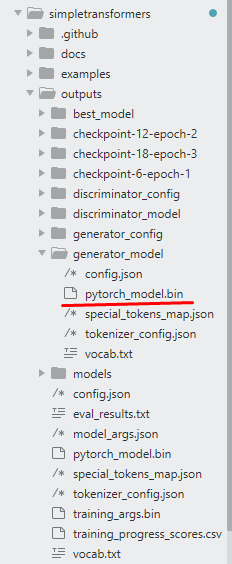

@mananeau We could make it clearer/more validated, but the upload CLI is meant to use only for models/tokenizers saved using the .save_pretrained() method.

In particular here, your model file should be named pytorch_model.bin

For this problem I switched to pip install with the repository of tranformers=2.8 which had been download in my old environment.

It normal works to download and load any pretrained weight

I don't know why, but it's work

This worked for me too

hi !!

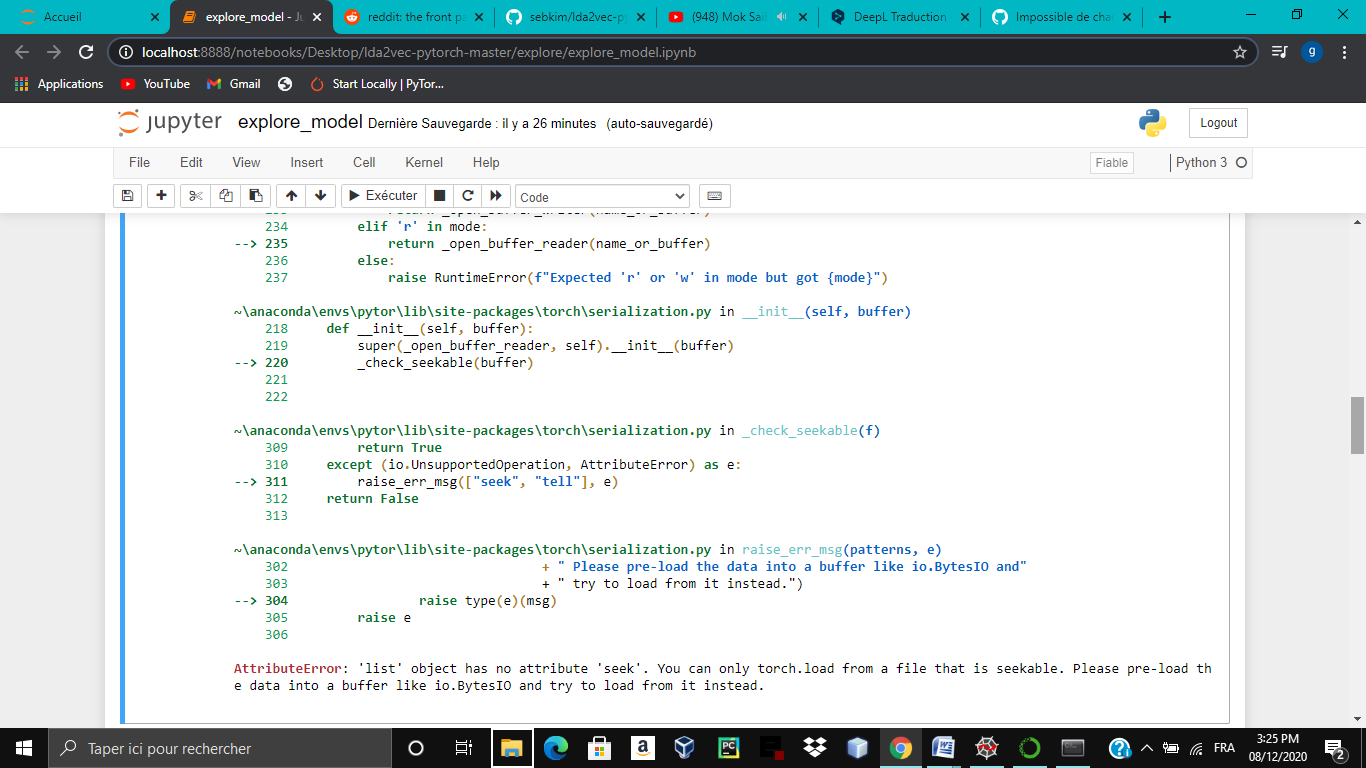

When i try this code explore_model.ipynb from https://github.com/sebkim/lda2vec-pytorch, the following error occurs .

how to resolve it ?? someone help me plz

how to resolve it ?? someone help me plz

@fathia-ghribi this is unrelated to the issue here. Please open a new issue and fill out the issue template so that we may help you. On a second note, your error does not seem to be related to this library.

I had this problem when I trained the model with torch==1.6.0 and tried to load the model with 1.3.1. The issue was fixed by upgrading to 1.6.0 in my environment where I'm loading the model.

Had same error on torch==1.8.1 and simpletransfomers==0.61.4

downgrading torch or simpletransfomers doesn't work for me, because the issue caused by the file - not properly downloaded.

I solved this issue with git clone my model on local, or upload model files on google drive and change directory.

model = T5Model("mt5", "/content/drive/MyDrive/dataset/outputs",

args=model_args, use_cuda=False, from_tf=False, force_download=True)For this problem I switched to pip install with the repository of tranformers=2.8 which had been download in my old environment.

It normal works to download and load any pretrained weight

I don't know why, but it's work

thank youuuuuu

I had the same problem with:

sentence-transformers 2.2.0

transformers 4.17.0

torch 1.8.1

torchvision 0.4.2

Python 3.7.6I solved it by upgrading torch with pip install --upgrade torch torchvision. Now working with

sentence-transformers 2.2.0

transformers 4.17.0

torch 1.10.2

torchvision 0.11.3

Python 3.7.6In my case, there was something problem during moving the files, so pytorch_model.bin file existed but the size was 0 byte. After replacing it with correct file, the error removed.

Just delete the corrupted cached files and rerun your code; it will work.

Just delete the corrupted cached files and rerun your code; it will work.

Yes, it works for me

I had to downgrade from torch 2.0.1 to 1.13.1.

does it work? I had the same problem

yes, it works, i delete all the .cache files,then redownload ,Error gone

🐛 Bug

Information

I uploaded two models this morning using the

transformers-cli. The models can be found on my huggingface page. The folder I uploaded for both models contained a PyTorch model in bin format, a zip file containing the three TF model files, theconfig.jsonand thevocab.txt. The PT model was created from TF checkpoints using this code. I'm able to download the tokenizer using:tokenizer = AutoTokenizer.from_pretrained("mananeau/clinicalcovid-bert-base-cased").Yet, when trying to download the model using:

model = AutoModel.from_pretrained("mananeau/clinicalcovid-bert-base-cased")I am getting the following error:

AttributeError Traceback (most recent call last) ~/anaconda3/lib/python3.7/site-packages/torch/serialization.py in _check_seekable(f) 226 try: --> 227 f.seek(f.tell()) 228 return True

AttributeError: 'NoneType' object has no attribute 'seek'

During handling of the above exception, another exception occurred:

AttributeError Traceback (most recent call last) ~/anaconda3/lib/python3.7/site-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs) 625 try: --> 626 state_dict = torch.load(resolved_archive_file, map_location="cpu") 627 except Exception:

~/anaconda3/lib/python3.7/site-packages/torch/serialization.py in load(f, map_location, pickle_module, pickle_load_args) 425 pickle_load_args['encoding'] = 'utf-8' --> 426 return _load(f, map_location, pickle_module, pickle_load_args) 427 finally:

~/anaconda3/lib/python3.7/site-packages/torch/serialization.py in _load(f, map_location, pickle_module, **pickle_load_args) 587 --> 588 _check_seekable(f) 589 f_should_read_directly = _should_read_directly(f)

~/anaconda3/lib/python3.7/site-packages/torch/serialization.py in _check_seekable(f) 229 except (io.UnsupportedOperation, AttributeError) as e: --> 230 raise_err_msg(["seek", "tell"], e) 231

~/anaconda3/lib/python3.7/site-packages/torch/serialization.py in raise_err_msg(patterns, e) 222 " try to load from it instead.") --> 223 raise type(e)(msg) 224 raise e

AttributeError: 'NoneType' object has no attribute 'seek'. You can only torch.load from a file that is seekable. Please pre-load the data into a buffer like io.BytesIO and try to load from it instead.

During handling of the above exception, another exception occurred:

OSError Traceback (most recent call last)