Hey, issues here have been reenabled and will be used for bug reports/feature requests in the future.

We are currently clearing out all the existing issues from 2017 and prior. If your bug report or feature request is still relevant, please create a new issue (or use one of the other channels; see https://hydrusnetwork.github.io/hydrus/).

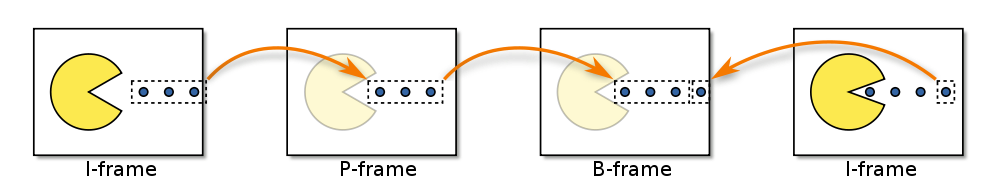

8s (1 i-frame)

8s (1 i-frame)

I think that's what he does for gif already, so I'd say yes.