Also, with the elasticity suite, I sometimes have HYPRE_BoomerAMGSetup return error code 12 = 0b1100. I'm not sure how to translate this.

#define HYPRE_ERROR_GENERIC 1 /* generic error */

#define HYPRE_ERROR_MEMORY 2 /* unable to allocate memory */

#define HYPRE_ERROR_ARG 4 /* argument error */

/* bits 4-8 are reserved for the index of the argument error */

#define HYPRE_ERROR_CONV 256 /* method did not converge as expected */Most recently, this was for a problem with less than 100k dofs after two successful Newton solves.

0 SNES Function norm 3.435982086055e-03

Linear solve converged due to CONVERGED_RTOL iterations 62

Iteratively computed extreme singular values: max 0.99973 min 0.00244952 max/min 408.133

1 SNES Function norm 1.619585499176e-02

Linear solve converged due to CONVERGED_RTOL iterations 30

Iteratively computed extreme singular values: max 0.999418 min 0.0104634 max/min 95.5159

2 SNES Function norm 7.427067557071e-04

[0]PETSC ERROR: --------------------- Error Message --------------------------------------------------------------

[0]PETSC ERROR: Error in external library

[0]PETSC ERROR: Error in jac->setup(): error code 12

I'm trying to tune a BoomerAMG configuration (via PETSc) to run on HIP. I'm using hypre-2.24 and rocm-5.0.2. I believe I'm matching the MFEM elasticity configuration, as explained in this paper. I have two issues: the iteration counts and condition numbers are higher than expected and the setup time is huge compared to the default configuration.

Default options

Just

HYPRE_BoomerAMGSetNumFunctionsto 3 andHYPRE_BoomerAMGSetInterpVectorsto the rigid body modes.Iteration counts above are a bit higher than GAMG. Condition numbers for GAMG stay in the range 17 to 28 throughout this solve, but GAMG setup time is enough higher that Hypre has a slight edge depending on node and job launch configuration (but less robustness as seen in the CG condition number estimates).

Elasticity suite

(This is the coarse problem of a matrix-free p-MG. The p-MG is very reliable. I can show the reduced, but this is what I care about and it's qualitatively the same for the linear discretization.)

Setup time is over 100x longer than seen above. I ran a much smaller problem because I'm impatient and iteration counts and condition numbers are much higher.

Question

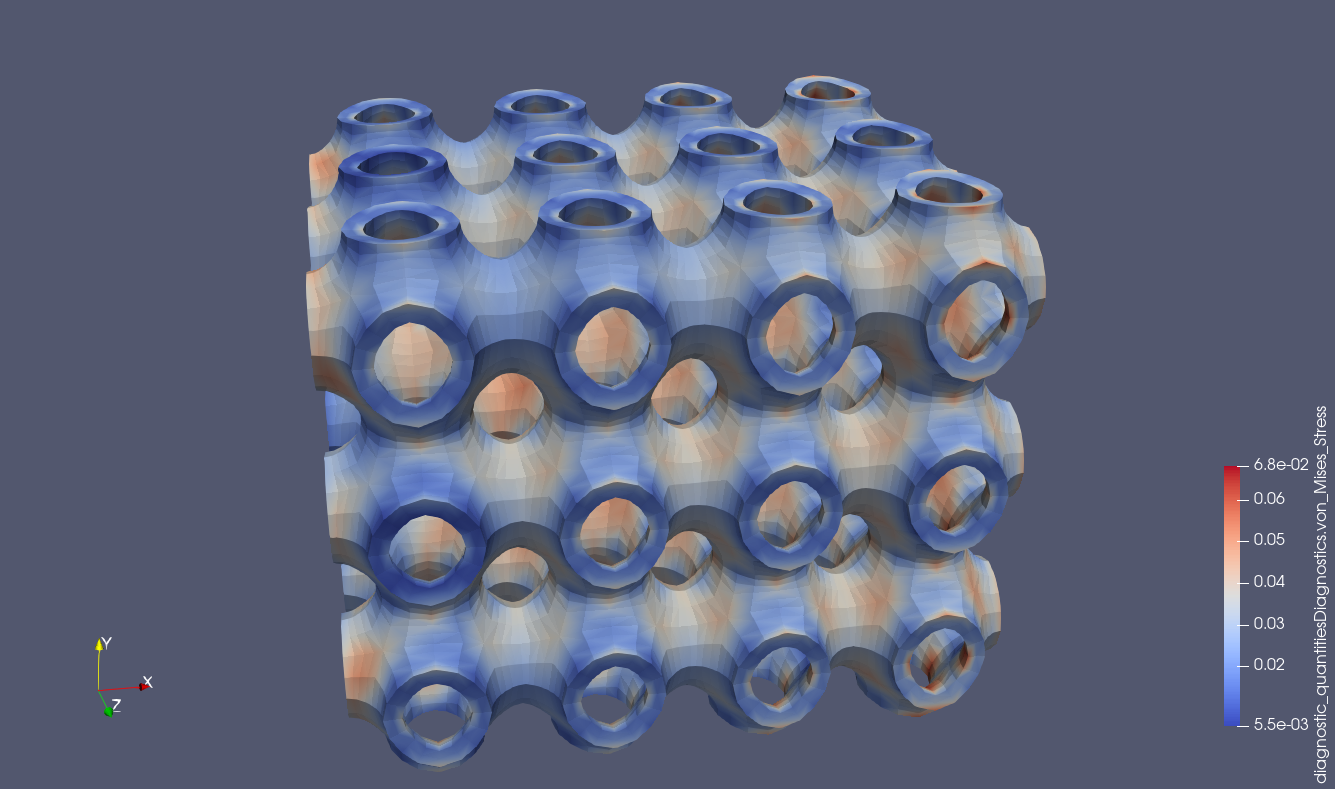

If it helps, my test models are scaled up variants of this sort of structure.