I feel a little confused. I found that gram matrix loss mainly affects the performance of anime scenes. I don't know if most people will like it.

some hard examples:

Also, I'm concerned that the demo of comparing [D, R] RIFE to this project may be misleading: In order to improve performance, Practical RIFE invests in more training data, computing power, data augmentation and lots of tricks. InterpAny-Clearer doesn't seem to be published currently and I'm worried about putting pressure on the authors. I'm interested in trying to incorporate InterpAny-Clearer's technology, but it will take many experiments.

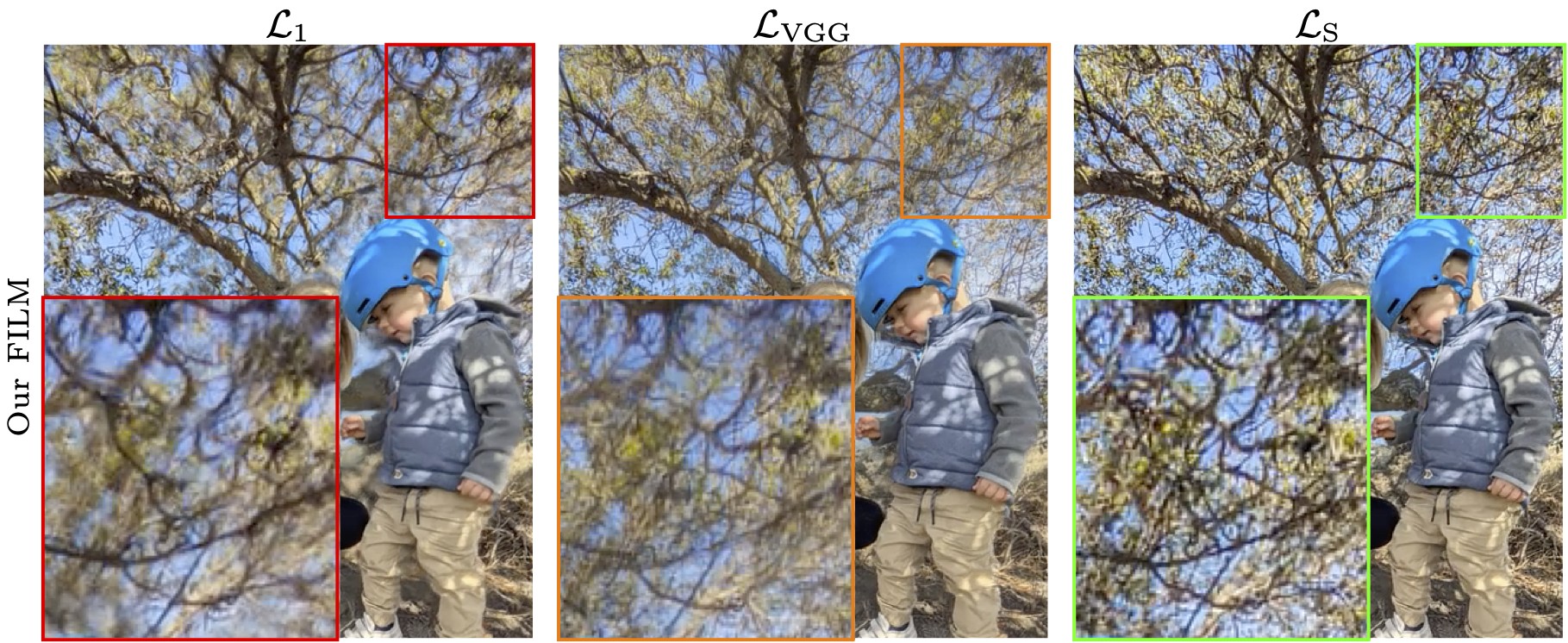

Source: FILM - Loss Functions Ablation

Source: FILM - Loss Functions Ablation

I would like to thank you very much for continually adding new, practical RIFE models. For this last one I am particularly grateful to you. Firstly because you are open to the work of others that can improve the already awesome RIFE. Secondly, because I was very interested in adding just this loss function to RIFE, which I requested from the developer InterpAny-Clearer here: Request for [D,R] RIFE trained on Style loss, also called Gram matrix loss (the best perceptual loss function)

As you have applied gram matrix loss to RIFE v4.17 training, I would like to ask you to conduct x128 frame interpolation tests of RIFE v4.15 and v4.17 for the files I0_0.png and I0_1.png and also I1_0.png and I1_1.png available here: https://github.com/zzh-tech/InterpAny-Clearer/tree/main/demo and posting their results as GIF files in the Practical-RIFE repository or here. There will be a total of 4 GIF files.

I think this test will be of interest to any Practical-RIFE enthusiast and will answer an important question: can gram matrix loss really improve the performance of practical RIFE models? And, in particular, whether it eliminates or at least mitigates to some extent the undesirable distortions occurring with VGG loss, which can be seen particularly clearly in the lower example for the column: [D,R] RIFE-vgg (Ours) in the table available at this link: https://github.com/zzh-tech/InterpAny-Clearer?tab=readme-ov-file#time-indexing-vs-distance-indexing

This test will not only compare the differences in the loss function for version 4.15 and version 4.17 of RIFE, but will also give an answer to the question of whether the application of the InterpAny-Clearer enhancement will further improve the clarity of frame interpolation for practical RIFE models, just as it improves it for the base RIFE model, as shown in the table to which I have provided a link above.

We will have a total comparison of 5 different versions of RIFE - 3 already available:

[T] RIFE - RIFE base model [D,R] RIFE - RIFE base model with full InterpAny-Clearer upgrade [D,R] RIFE-vgg - RIFE base model with VGG loss and full InterpAny-Clearer upgrade

and 2 versions of the practical RIFE models, which I ask you to compare here:

RIFE v4.15 with standard perceptual loss RIFE v4.17 with gram matrix loss

This comparison will hopefully give evidence to justify the use of gram matrix loss in all future RIFE practical models. Also, I hope it will demonstrate the need for the use of InterpAny-Clearer in the next RIFE v4.18 model.

I would also like to use these GIF files of 5 different versions of RIFE (of course with links to the sources of the comparisons) in the introduction to my rankings Video Frame Interpolation Rankings and Video Deblurring Rankings, where I would like to encourage other researchers to use the best and above all proven solutions for practical applications when developing new models.

I hope that the next GIF file will already be for RIFE v4.18 with gram matrix loss and the InterpAny-Clearer enhancement. The clarity of the frame interpolation is more important than the super resolution, especially when new monitors already reach 500Hz and the original frames can be seen on the screen very briefly and most of the time the interpolated frames are visible.