Open iammiori opened 5 years ago

layer 쌓는 부분만 기재. code#1

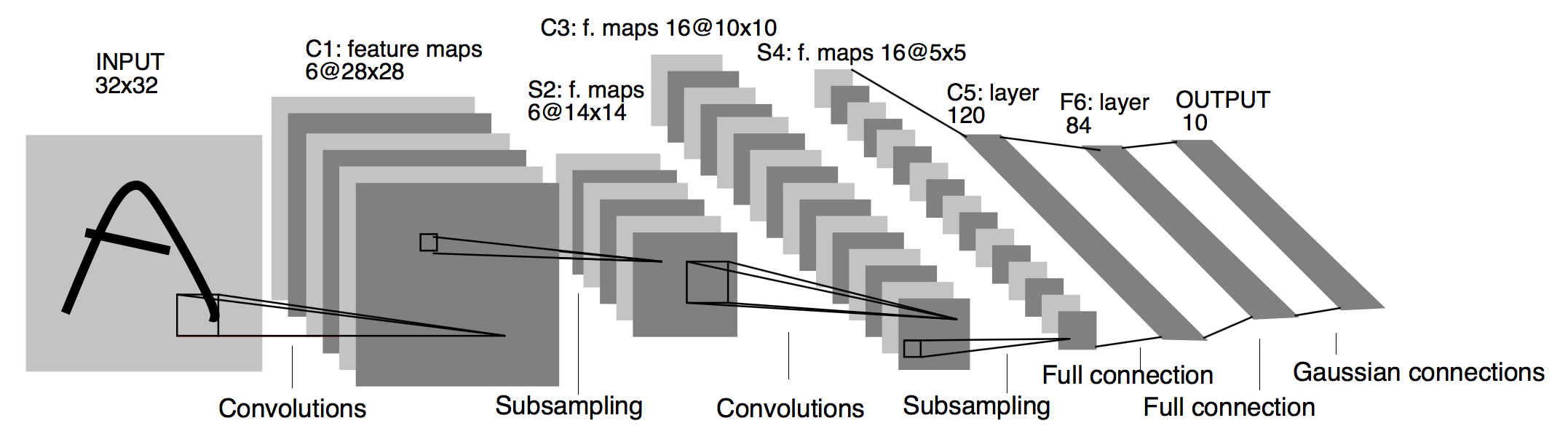

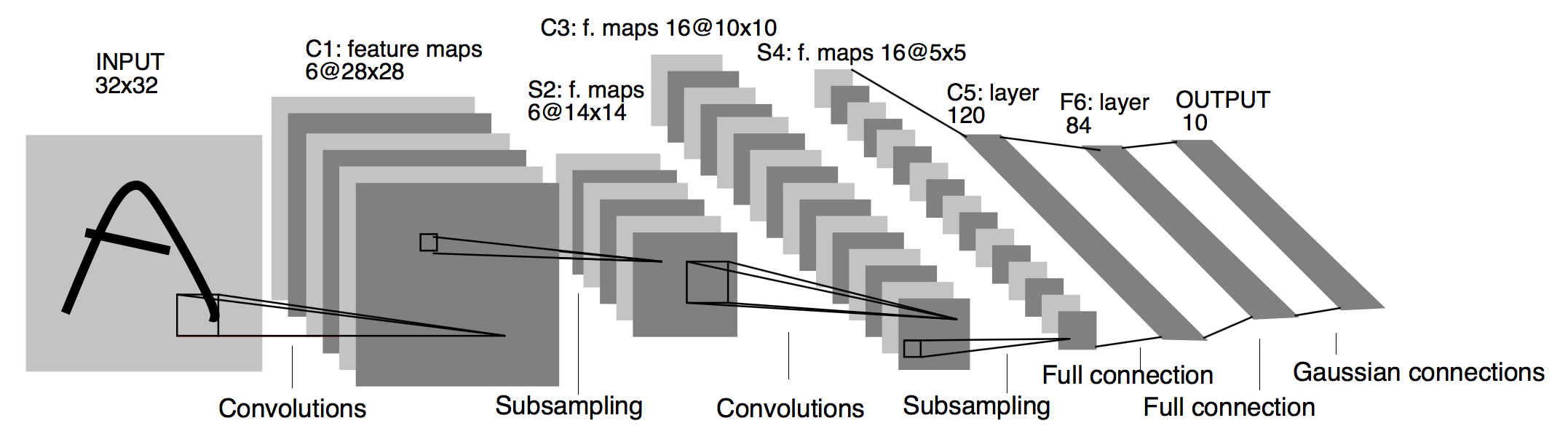

# Layer 1 : Convolution

# Input = 32x32x1.

# Output = 28x28x6

# w_filter_size = x

# 32 - x + 1 = 28

# finally, x = 5

conv1_w = tf.Variable(tf.random_normal(shape = [5,5,1,6], stddev = 0.01))

conv1 = tf.nn.conv2d(X,conv1_w, strides = [1,1,1,1], padding = 'VALID')

conv1 = tf.nn.relu(conv1)

# Subsampling means pooling.

# Input = 28x28x6.

# Output = 14x14x6.

pool_1 = tf.nn.max_pool(conv1,ksize = [1,2,2,1], strides = [1,2,2,1], padding = 'VALID')

#---------------------------------------------------

# Layer 2 : Convolution

# Input = 14x14x6.

# Output = 10x10x16

conv2_w = tf.Variable(tf.random_normal(shape = [5,5,6,16], stddev = 0.01))

conv2 = tf.nn.conv2d(pool_1, conv2_w, strides = [1,1,1,1], padding = 'VALID')

conv2 = tf.nn.relu(conv2)

# Subsampling means pooling.

# Input = 10x10x16.

# Output = 5x5x16.= 400

pool_2 = tf.nn.max_pool(conv2, ksize = [1,2,2,1], strides = [1,2,2,1], padding = 'VALID')

fc1 = flatten(pool_2)

#-------------------------------------------------------------

# Layer 3: Fully Connected.

# Input = 400.

# Output = 120.

fc1_w = tf.Variable(tf.random_normal(shape = (400,120), stddev = 0.01))

fc1 = tf.matmul(fc1,fc1_w)

fc1 = tf.nn.relu(fc1)

# Layer 4: Fully Connected.

# Input = 120.

# Output = 84.

fc2_w = tf.Variable(tf.random_normal(shape = (120,84), stddev = 0.01))

fc2 = tf.matmul(fc1,fc2_w)

fc2 = tf.nn.relu(fc2)

# Layer 5: Fully Connected.

# Input = 84. Output = 10.

fc3_w = tf.Variable(tf.random_normal(shape = (84,10), stddev = 0.01))

logits = tf.matmul(fc2, fc3_w)

# define cost/loss & optimizer

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

logits=logits, labels=Y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)