Thank you for the feedback. The data set has been generated automatically using the HTML provided by the journals and the images of the tables that we recovered from the articles. It could be that in some cases the HTML might not fully represent the table image. The number of such cases should be small.

On Tue, Sep 8, 2020 at 4:02 AM JaMe76 notifications@github.com wrote:

Hi,

Thank you for providing that amazing dataset, which is undoubtably one of the best, if not the best for this subject.

When converting the HTML into a kind of tile structure, I sometimes ran into issues that might be problematic:

I assume that for each row, the sum of all column spans, where cells without colspan attribute are considered having column span equal to one, is the same. Same holds true for each column, so no cells can overlap. However, when tiling the table with its cells taking into account their positions and spans, I realized that there are some HTML strings that either allow cell overlapping or some rows having more columns than others.

I checked the tiling for the first 2000 tables of the training set and discovered 14 instances with that peculiarity. Below are four examples where I highlighted, according to my view, the problematic cell annotation within the HTML string.

Thanks

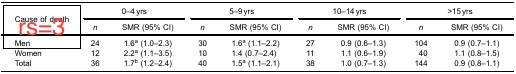

NB. rs, cs corresponds to rowspan, colspan, resp. PMC3900820_003_00 [image: PMC3900820_003_00] https://user-images.githubusercontent.com/50324223/92409705-cea04680-f141-11ea-959d-a72ff839a2bd.jpg PMC3900788_002_00 [image: PMC3900788_002_00] https://user-images.githubusercontent.com/50324223/92409712-d3fd9100-f141-11ea-844c-f325aee67cc1.jpg PMC4723163_004_00 [image: PMC4723163_004_00] https://user-images.githubusercontent.com/50324223/92409715-d829ae80-f141-11ea-86b7-692d123a3e92.jpg PMC3230392_003_00 [image: PMC3230392_003_00] https://user-images.githubusercontent.com/50324223/92409717-dbbd3580-f141-11ea-91fa-a793169daa92.jpg

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub https://github.com/ibm-aur-nlp/PubTabNet/issues/13, or unsubscribe https://github.com/notifications/unsubscribe-auth/AA6BZDIUIEX2EFUJS67CCVDSEUN2DANCNFSM4Q6WCGGQ .

Hi,

Thank you for providing that amazing dataset, which is undoubtably one of the best, if not the best for this subject.

When converting the HTML into a kind of tile structure, I sometimes ran into issues that might be problematic:

I assume that for each row, the sum of all column spans, where cells without colspan attribute are considered having column span equal to one, is the same. Same holds true for each column, so no cells can overlap. However, when tiling the table with its cells taking into account their positions and spans, I realized that there are some HTML strings that either allow cell overlapping or some rows having more columns than others.

I checked the tiling for the first 2000 tables of the training set and discovered 14 instances with that peculiarity. Below are four examples where I highlighted, according to my view, the problematic cell annotation within the HTML string.

Thanks

NB. rs, cs corresponds to rowspan, colspan, resp. PMC3900820_003_00 PMC3900788_002_00

PMC3900788_002_00

PMC4723163_004_00

PMC4723163_004_00

PMC3230392_003_00

PMC3230392_003_00