Thanks for the feature request. We will look into the activation function and consider adding it to our framework.

Open digantamisra98 opened 5 years ago

Thanks for the feature request. We will look into the activation function and consider adding it to our framework.

@Menooker are you still open to including Mish in the framework? It was recently added to PyTorch - https://github.com/pytorch/pytorch/pull/58940

@digantamisra98 I wonder if you are working on specific use cases for this on BigDL? FYI - we have already supported running distributed PyTorch on Spark in Analytics Zoo (e.g., see https://analytics-zoo.readthedocs.io/en/latest/doc/Orca/QuickStart/orca-pytorch-quickstart.html)

Mish is a new novel activation function proposed in this paper. It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

It would be nice to have Mish as an option within the activation function group.

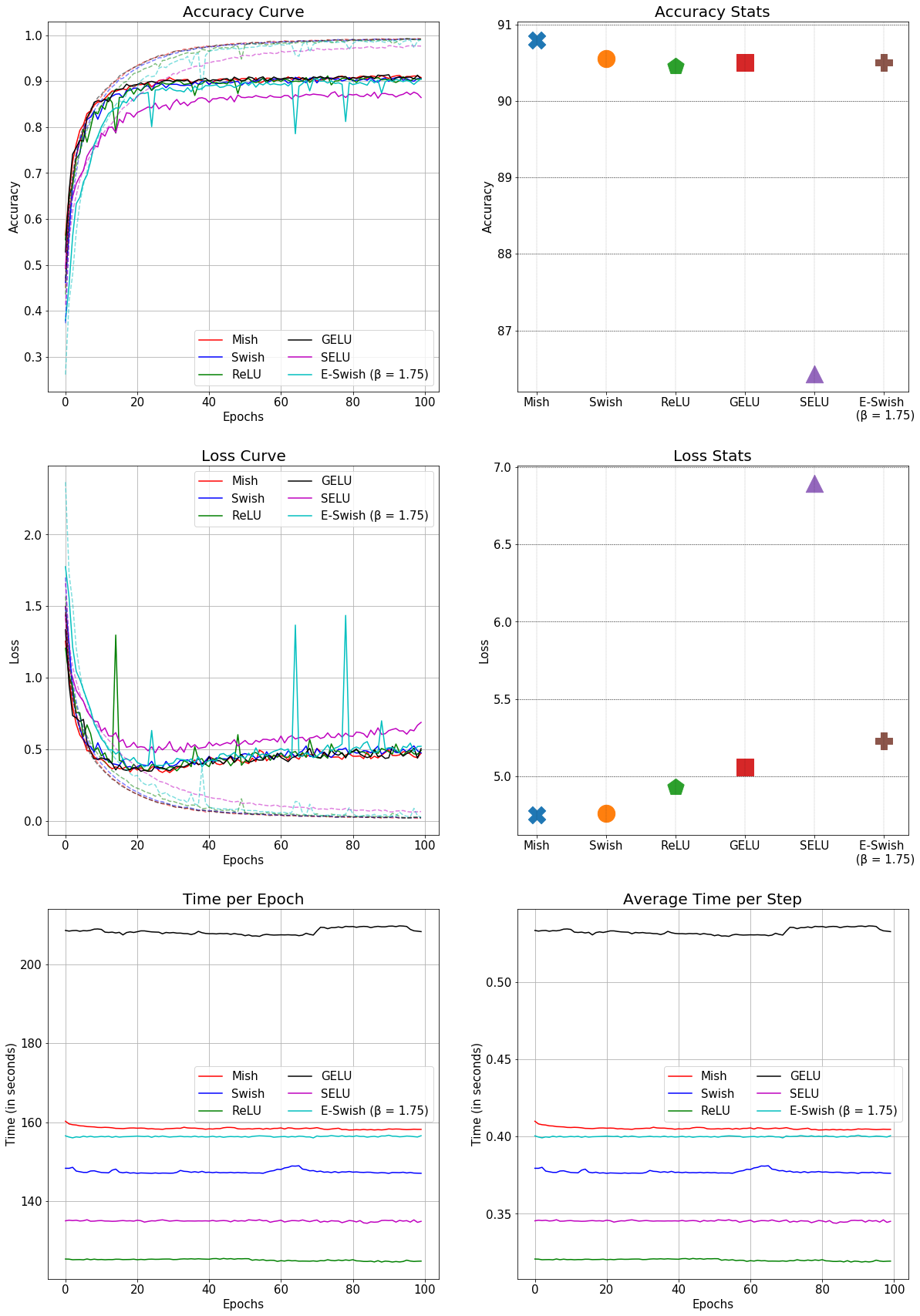

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10: (Better accuracy and faster than GELU)