Hi @Serafadam ,

- Yes, this is something we want to add to rtabmap_ros, this could be useful to offload feature extraction computation on the cameras.

- In general, VIO can work with only one camera and IMU (like on phones). Adding another camera (stereo) can help to better estimate scale of the tracked features. The cameras can be either monochrome or RGB, that doesn't really matter. What matters is to get the largest FOV, global shutter, hardware sync between the shutters of the cameras (in case of stereo), and time synchronization between image stamps and IMU stamps (in case of inertial visual odometry). If a TOF camera is used to get the depth (in RGB-D case), the problem is that it is often not synchronized with RGB camera, but if VIO is computed from RGB+IMU without TOF, we can interpolate the pose of the TOF camera to correctly reproject the point cloud in RGB frame (similar to what we did with Google Tango). Using a TOF camera can however generate better accurate point clouds (.e.g, kinect azure, google tango, or iPhone/iPad LiDAR), though their range is limited, particularly outdoor with sunlight. If depth is computed from stereo cameras, and another RGB camera is used (using stereo just to get depth, and estimating VO with RGB-D), ideally the three cameras would need to be hardware synchronized to estimate trajectory as good than with stereo camera. I guess in this setup, the advantage is that we could use IR projector to improve depth estimation with the IR stereo camera, while being able to track features using RGB camera (which won't see the static IR pattern).

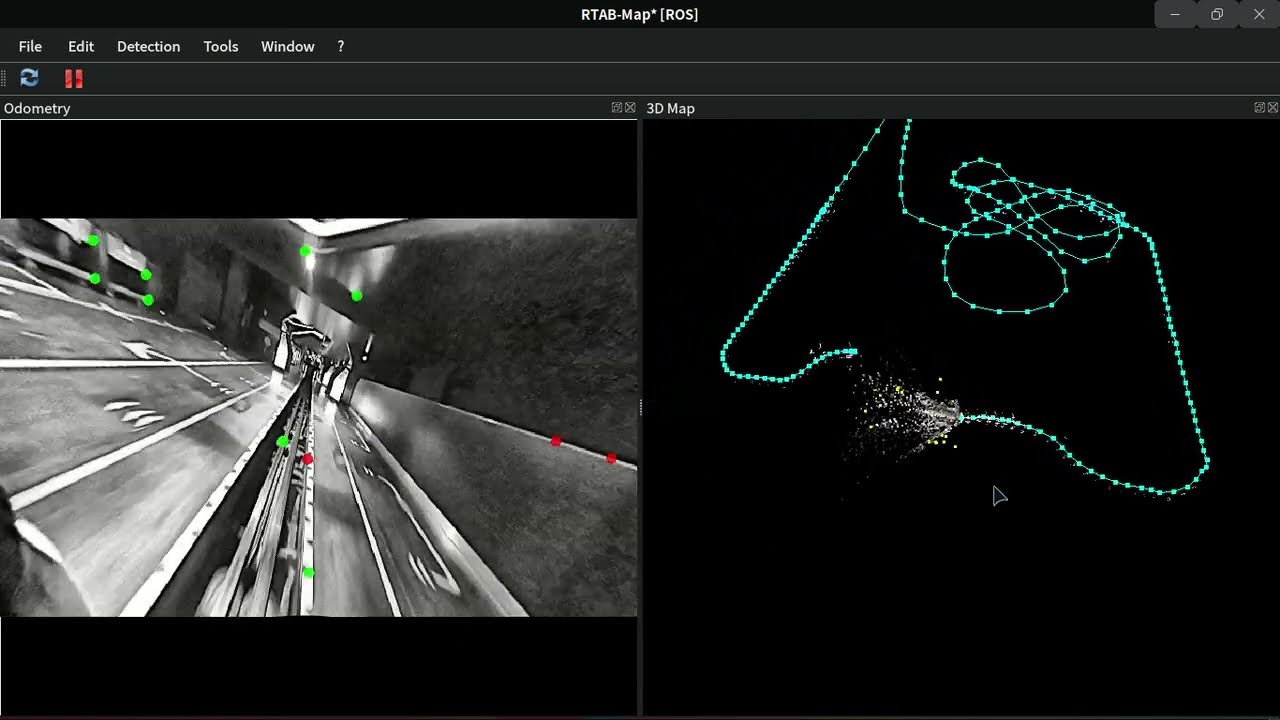

Current state of depthai/rtabmap integration

Standalone library

With rtabmap standalone, by default, the left and right IR/monochrome cameras are used. We choose not to have option for RGB camera as the FOV is smaller, it is a rolling shutter and frames are not hardware synchronized with the monochrome cameras. This is based on original OAK-D camera (only one I own). If you have new cameras with RGB global shutter, larger FOV and synchronized with left/right monochrome cameras, we could check to add more options to use the RGB stream.

Nevertheless, current exposed options can be seen in this panel:

- We can enable depth computation on the edge

- Thx to @borongyuan, we recently added feature extractions (GFTT) on the edge, and even supporting an experimental version of SuperPoint running on the edge

ROS

-

For ROS1, we provide a stereo example and a RGB-D example from command lines.

-

For ROS2, we could add depthai examples here similar to different variations of D435i examples: https://github.com/introlab/rtabmap_ros/tree/ros2/rtabmap_examples/launch

That could be interesting to make ros example using features extracted on the camera, but rtabmap_ros is lacking the interface to feed them to rtabmap library.

Hi, one of the developers from Luxonis here :slightly_smiling_face: We'd be happy to discuss what improvements could be made regarding running RTABMap together with DepthAI-based devices to provide better out-of-the-box experience, since we've noticed that many users are choosing this approach.

Currently, there are two different options when running RTABMap with OAK, one is available in the core RTABMap library, that uses OAK's in Stereo mode but we also have an example launch file inside depthai-ros that's currently set up to use RGBD mode, with some basic parameters. Some initial thoughts to start off:

depthai_ros_driverhas the option to be reconfigured in runtime to provide different types of streams, change resolution or other sensor parameters, I was wondering if that's something that could be useful in the mapping scenario.I would also appreciate comments on the current state of the integration, what could be further improved. Also, do you have access to OAK devices for testing?