Your code works fine for me. What exactly is the problem you're having?

On an unrelated note, putting your perf_buffer_poll call at the end of your program will cause it to drop events if you run it for long enough. You want to poll the perf buffer in your while loop so you can consume events.

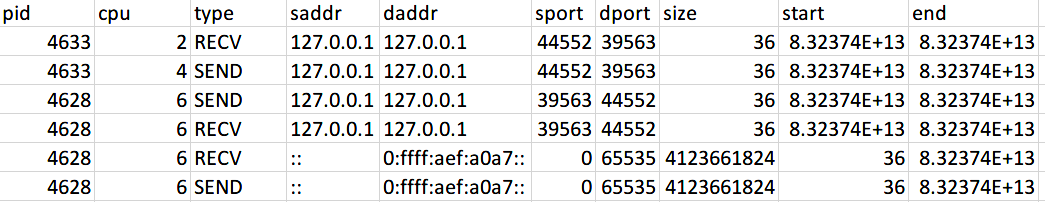

I'm writing a tool to record every TCP event. However, I can only resolve IPV4 events properly, IPV6 events can't be resolved properly. Here is a sample result:

Here is my tool implementation. Anything wrong? I can record IPV6 events correctly if record upon tcp_sendmsg/tcp_recvmsg entries without BPF_TABLE applied. However, to record the end time of the event, I have to use the return kprobe too, thus BPF_TABLE is used.

I'm running this tool on Chromium OS, kernel V5.4.

tcptrans.c:

tcptrans.py: