/cc @mikeal @lidel

Open Gozala opened 4 years ago

/cc @mikeal @lidel

Love it 😁

Agreed. This is clearly a well thought out "upgrade" path for whatever this library becomes. I'm 100% on board, and in particular, am excited about the first class batching proposal and the freedom that this API rethink affords us!

In my conversation with @mikeal he stated additional constraints that API would have to meet to enable https://github.com/mikeal/dagdb/ efficient replication. I would like those constraints to be captured here, even if we ultimately choose to not address them in the first iteration. So @mikeal if you'll get a chance to do so I would highly encourage you to.

It’s probably best to look at one of the storage implementations to understand the requirements. https://github.com/mikeal/dagdb/blob/master/src/stores/kv.js

It’s pretty simple. A store needs to maintain certain information about what it’s storing so that it call be queried without parsing every block in a graph that is being replicated.

If a store is keeping track of this then it can present a graph() method that can respond with the missing blocks in that graph for a given depth. Once a query runs once any complete graphs can cache that they already have that full graph, making another walk of even the full index unnecessary.

For many use cases, like DagDB, you simply cannot send the relevant cache state over the protocol in a form of a query or a list of blocks. The potential for overlap is too high and the information about each graph that each peer has is, by definition, incomplete.

Graphsync works well for blockchains because it’s an append only log structure and it’s highly unlikely (sometimes impossible) that there will be large amount of duplicate data in the chain between an old HEAD and a new HEAD.

For structures with more de-duplication and a less straight forward append-only log structure that entire approach just wont’ work. The minimal indexing I mentioned above is the bare minimum a store needs to keep in order to be able to tell a peer “this is what i’m missing in that graph” and request each block it does not have in its store.

I should also mention that the above indexing can be leveraged for opportunistic garbage collection. Knowing the to/from links of a block means that when you mutate a graph you can walk the diff and find the orphaned blocks and remove their references in the storage engine and you know if there are any other outstanding links to each block.

This actually conflicts a little with requirements around storing by multihash, because it’s possible to have block data referenced by different codecs. There’s ways around it, but it would require a pretty specific indexing structure, and the layers don’t separate cleanly. The “block store” will need to think in terms of CID’s but store by multihash and index by [multihash + codec].

It’s pretty simple. A store needs to maintain certain information about what it’s storing so that it call be queried without parsing every block in a graph that is being replicated.

- The links from/to every block CID.

- Whether or not the entire graph for a given CID is already stored.

Would it be fare to say that this is (important) implementation detail ? In other words you could there be naive compatible implementation that walk and parse blocks per each query, even if the performance is impractical ?

The reason I am asking this is because if it is yes, then that does not necessarily affect an end user API.

If a store is keeping track of this then it can present a

graph()method that can respond with the missing blocks in that graph for a given depth.

graph() supposed to identify missing blocks outwards of the given CID or inwards of it ?

you simply cannot send the relevant cache state over the protocol in a form of a query or a list of blocks. The potential for overlap is too high and the information about each graph that each peer has is, by definition, incomplete

So is idea here is that local replica can query others to identify new blocks that link to the know graph ?

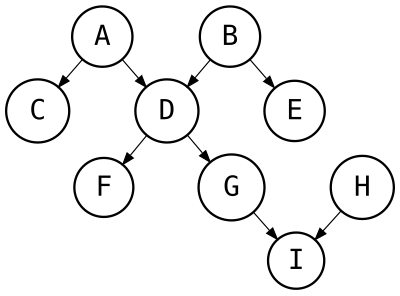

I'm not sure grasp how that would apply to polytrees like show below. E.g. When I query by B I may also be aware of A and H.

Which actor in the system is calling this method ?

Any actor, it’s actually best not to think about it that way.

Consider this. The store has a state, it has complete or incomplete graphs of various CID’s, and that state is being queried.

If I want to move data from one store to another I need to ask about this state. In a push situation that’s the client, but you could also structure replication as a pull from the remote end. Either way, the store receiving data needs to provide this for efficient replication and even the client store may want to use it for some local optimizations if it doesn’t have all the blocks already either.

Would it be fare to say that this is (important) implementation detail

The whole point of the graph() method is to enable efficient replication. If you don’t have an efficient way to provide the API it’s probably better not to have it at all and fall back to a replication strategy that doesn’t use it. Sure, it’s possible to implement it “the slow way” but any replication strategy using it is going to assume it’s fast and would prefer to not use it at all if it’s going to be slow.

If it's outwards I think I'm starting to understand the motivation.

outwards, the inbound links are primary there for GC and aren’t strictly necessary for this. it’s just that, if you’re doing the indexing you might as well do both.

I'm inclined to think that replication is a system layer API not the application layer.

It was actually tough for me to come around on this as well. Conceptually, you should be able to do replication at a low level without a lot of information because of the hash linked primitives we have. And to some extent you can, at least you can build a more efficient replication than if you didn’t have those, but to do something very fast you just need more information.

With a little more information about the state of the store we can get pretty fast, that’s what this is. This is most useful for cases like DagDB, where we expect a fair amount of de-duplication and the predictability of the paths effected by a mutation is minimal without just diffing the tree. Blockchains don’t have either of those problems which is why we didn’t need any of this for Graphsync.

Off topic: Not relevant to API discussion, but it is related to the discussion we're having so I'd want to bring it up as I suspect @mikeal may have considered this and I would love to learn conclusions.

Blockchains don’t have either of those problems which is why we didn’t need any of this for Graphsync.

The way I have being thinking about this graph replication with IPDF first and then with Textile Threads is: You could have arbitrary shaped graph along the side of git reflog style chain per replica. Assuming each replica maintains reflogs of every participant, syncing effectively becomes fairly straight forward - replicas just need to exchange added entries in their reflogs and then pack up blocks for each other they don't have. This is conceptually similar to how OP based CRDTs work.

Replicas will likely want to maintain indexes so "block packs" could be computed on queries, but those become internal optimization detail. I should also point out that even though there are multiple "reflogs" at play they could be projected as a single log with partial order.

If you do allow editing then you wind up with full blown CRDT.

Assuming each replica maintains reflogs of every participant, syncing effectively becomes fairly straight forward - replicas just need to exchange added entries in their reflogs and then pack up blocks for each other they don't have. This is conceptually similar to how OP based CRDTs work.

Not quite!

You’re forgetting about de-duplication. When data is inserted it updates the log, yes, but if it references other data from prior threads you can’t just say “give me the whole graph for the changes” because you may already have a lot of that graph in cache if it references threads you had already replicated.

That’s why this doesn’t end up looking like straight forward log replication. Once you have the list of logs you need to merge you still need to work your way down the graph only requesting new data, otherwise you could end up requesting very large amounts of data you already have in your local store.

You’re forgetting about de-duplication. When data is inserted it updates the log, yes, but if it references other data from prior threads you can’t just say “give me the whole graph for the changes” because you may already have a lot of that graph in cache if it references threads you had already replicated.

No I was not forgetting about it. What I was trying to say is that once two replicas exchange reflog updates, each has enough information to pack all the blocks other does not have because they have all the info locally. And yes to do that efficiently replicas would need to maintain an index, but point is that becomes implementation detail that doesn't need to have a public API.

As of indexing, each replica on local write can with very little overhead create a flat list of new blocks and have new head link to it . This way replica asked for updates just needs to walk from the head to a last know head and concat all new block lists together. In practice it's bit more complicated than that, because there >2 participants, but if you can project merged reflog (which is more or less what textile thread do) same idea would apply.

It needs to be a public API for the “push” case but sure, for “pull” it doesn’t need to be public.

It needs to be a public API for the “push” case but sure, for “pull” it doesn’t need to be public.

In fact in described model there is no distinction between push and pull, replicas wishing to sync exchange heads. From there each side can derive locally what it has that other doesn’t and pack it all up end send. Once both do it they’re in sync

As per https://github.com/ipfs/notes/issues/436 I would like to use ipfs-lite as a foundation for providing IPLD storage & replication system. At the same time I would like to use this as an opportunity to explore / design API fit best for that system instead of trying to provide backwards compatibility with

ipfs.dagandipfs.blockAPIs that were designed for different set of constraints and use cases. Here is a my short (constraint / wish) list:[ ] Interops with (new) IPLD stack instead of mimicing or wrapping it.

[ ] Interop layer should be extremely minimal to reduce coordination to a bare minimum.

Blockimplementations[ ] Designed for multi-threaded use.

[ ] First class batching