From what I've noticed they also provide the extrinsic information as follows:

#timestamp [ns], position.x [m], position.y [m], position.z [m], quaternion.w [], quaternion.x [], quaternion.y [], quaternion.z []

0000000039991664640,0.0625990927,0.580219448,1.79874659,0.848812044,0.524193227,-0.0361736789,-0.0585750677which I used to apply the transformation but still it did not work

import numpy as np

pose = np.array([39991664640, 0.0625990927, 0.580219448, 1.79874659, 0.848812044, 0.524193227, -0.0361736789, -0.0585750677])

R = o3d.geometry.get_rotation_matrix_from_quaternion(pose[4:])

Rt = np.vstack((np.hstack((R, pose[1:4].reshape(3,-1))), [0, 0, 0, 1]))

pcd.transform(Rt)

Hi, I am trying to create a point cloud from the Interiornet RGBD images, following the code snippet below:

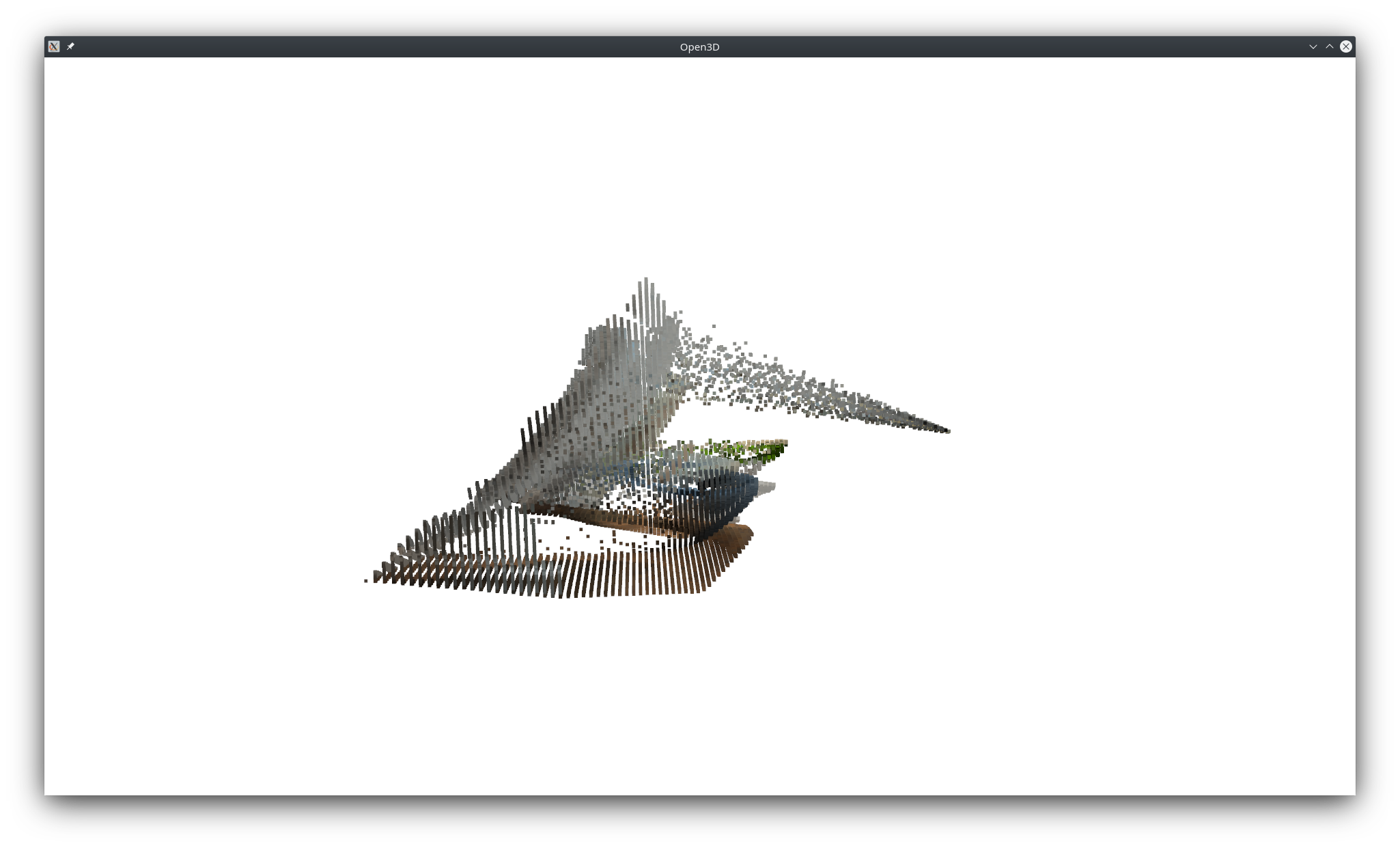

However, for some reason my point cloud is skewed:

Any idea what could be wrong?

Environment:

I am attaching a pair of rgbd images and the intrinsics file. open3d_reconstruction_system.zip