pjit or jax.jit exactly does what you want :)

You write your program as if you are writing for a single device and the XLA GSPMD partitioner should take care of distributing your program and adding collectives for you! See https://jax.readthedocs.io/en/latest/notebooks/Distributed_arrays_and_automatic_parallelization.html

JAX also has integrated itself with the Alpa compiler wherein you can specify pjit.AUTO to in_shardings and out_shardings and that will invoke the auto-spmd (alpa) compiler pass. You can look at these tests: https://github.com/google/jax/blob/782d90dc8501e7148e1fd1dbd4757b4ca0b3ca4d/tests/pjit_test.py#L1261 to see how to use it.

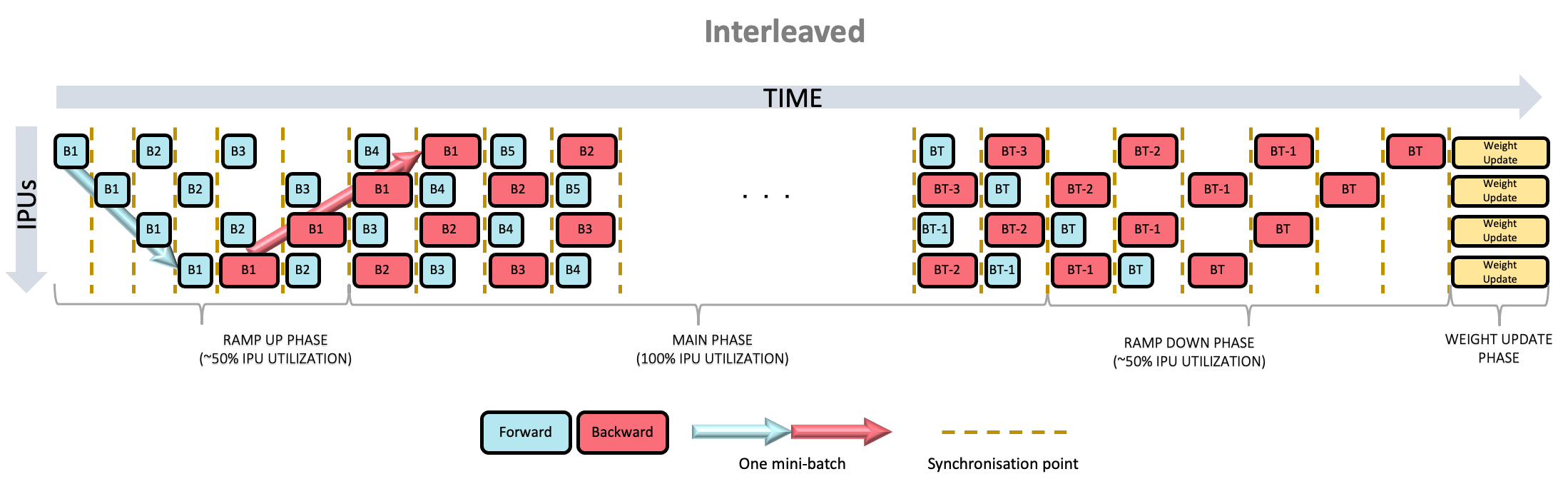

Or interleaved pipeline?

Or interleaved pipeline?

In another issue, I wrote the following:

Later, I came across a project that seeks to do just that:

Alpa: Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning (repo):

I was wondering if there's any interest in adopting/integrating a similar system into JAX itself. This could free the vast majority of JAX users from having to manually tune and restructure their programs to parallelize them efficiently for a particular situation and set of computational resources. It could greatly increase the ease and user-friendliness of distributed computing with JAX, resulting in more widespread adoption and popularity.

I'd love to hear your thoughts and comments.