Found this implementation with permisive license (uses C and Python): https://github.com/spacetelescope/drizzle

Closed noushdr closed 5 years ago

Found this implementation with permisive license (uses C and Python): https://github.com/spacetelescope/drizzle

It seems they're describing Pixel Shift, which requires that four images are taken using identical settings with the sensor shifted one sensel at a time in an (anti-)clockwise direction. RawTherapee already supports Pixel Shift demosaicing. How would this work with bracketed, raw images as used in HDRMerge, taken on a tripod (no shift) or hand-held (massive shift not pixel shift)?

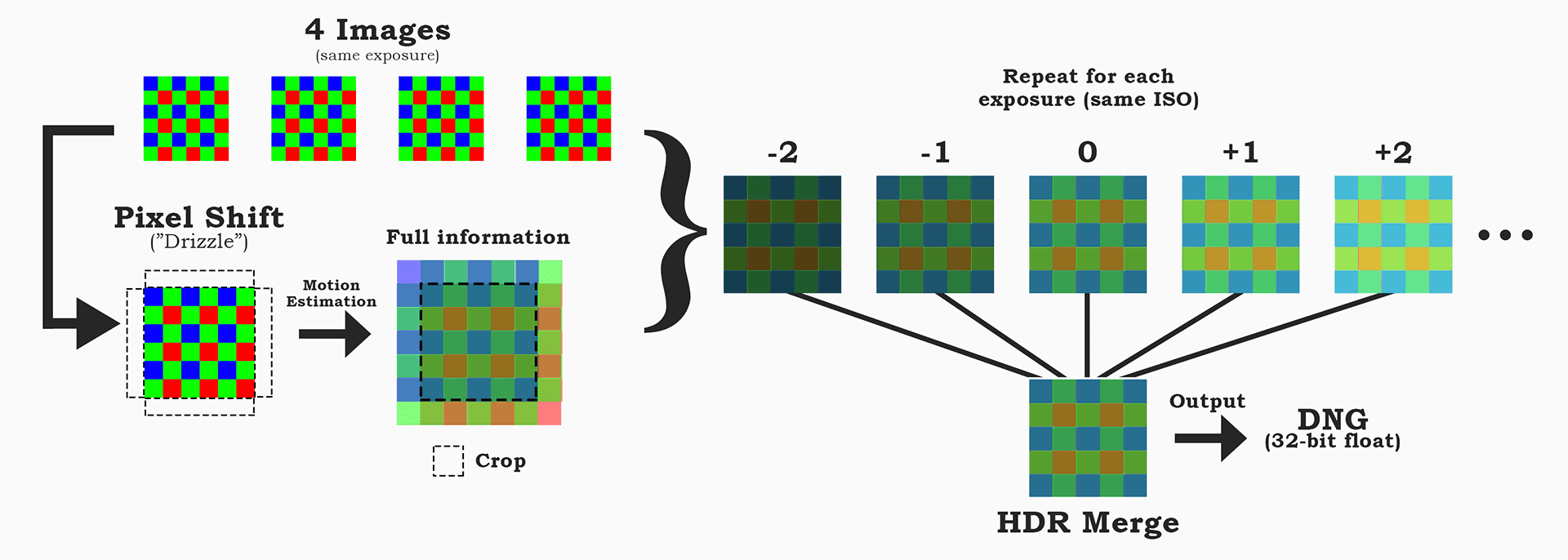

Well the idea was: HDRMerge asks for 4 images of each exposition (I usually do 4 exposition on landscapes, so 16 images), then first do the Pixel Shift on all of them and then do the HDR/ZeroNoise. Hand-held would not be possible, indeed, but on tripod you could set 2s between each shot, so you can change the position slightly between them.

RawTherapee already supports Pixel Shift demosaicing.

It does? Never heard of this feature on RT... This only works for Sony and Pentax, right? The idea was to have the befenits of Drizzle, together with HDR/ZeroNoise, on any camera that has Raw output with normal bayer filter (I don't know how X-trans would behave).

@Beep6581 Do you know if this is even possible to implement? If it is possible, where to start? I don't have good coding skills, but there's probably other ways I could help (research, debuging, etc).

HDRMerge is raw in, raw out. I ask again, how would this work in HDRMerge, and what would the massive amount of work required to implement this achieve?

Sorry if I wasn't clear. Here's the idea:

I do understand this will require a massive amount of work and I don't know if it's possible to write this kind of data on DNG files, but, there're many advantages (assuming the motion estimation is accurate):

I know you have experience with image processing, that's why I'm asking you if this is even possible or if you think there's some faulty on my reasoning.

Well, I would use HDRMerge first to get HDR raws of each shifted image, then use Rawtherapee for demosaicing. No further coding needed.

Anyway, how do you get the shifted shots in the first place? Do you have special equipment for that?

Javi

El mié., 25 jul. 2018 a las 23:55, noushdr (notifications@github.com) escribió:

Sorry if I wasn't clear. Here's the idea https://pictshare.net/944n60m72u.png: [image: Drizzle HDR] https://camo.githubusercontent.com/7fd9d3b93a0d104b07997ec5baf336ba74b2eac8/68747470733a2f2f7069637473686172652e6e65742f3934346e36306d3732752e706e67

I do understand this will require a massive amount of work and I don't know if it's possible to write this kind of data on DNG files, but, there're many advantages (assuming the motion estimation is accurate):

- No interpolation artifacts. Less moire and aliasing. Many kinds of photographs require this, such as astrophotography, historic photographs (e.g. zoological specimens), etc.

- Real color data. This is necessary for architecture photographers that need to match exactly what interior designer did on the room, for example, or anything that must have exact colors for some reason.

- Finer details. Some scientific images need to have accurate results. One case scenario is deconvolution on microscopes. It seems luminance regularization https://pdfs.semanticscholar.org/a746/571076e7dbd73c34816374e8c449bcd9c113.pdf can only do so much, so this can result in in errors using deconvolution. This is no big deal for normal photography, but scientific images need to be precise

I know you have experience with image processing, that's why I'm asking you if this is even possible or if you think there's some faulty on my reasoning.

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub https://github.com/jcelaya/hdrmerge/issues/157#issuecomment-407909189, or mute the thread https://github.com/notifications/unsubscribe-auth/AB9t_hOUcIVQAvQWLAOKpWS3WC2Nzrwyks5uKOlKgaJpZM4VZZD0 .

Well, I would use HDRMerge first to get HDR raws of each shifted image, then use Rawtherapee for demosaicing. No further coding needed.

RT supports merge of different images already, using drizzle? Also, even it does, I suppose the tonemapping will not be as accurate as if it was using full chroma information (?). I'm not against this idea, though.

Anyway, how do you get the shifted shots in the first place? Do you have special equipment for that?

Only a tripod with slightly movements between the shots. Even the shutter mirror movement would make a small shift on the position. After that, "sub-pixel motion estimation" could get the photos aligned as in the diagram above. This motion estimation is the difficult part. This publication have a method, but don't know if this would work without the precision of movements. Video codecs seems to have advanced implementations of motion estimation, such as this and this.

I'm not asking/expecting you guys to simply implement this. This is a conversation and I'm curious if this could some day be implemented as the advantages are very clear.

https://spacetelescope.github.io/drizzle/drizzle/user.html http://www.stsci.edu/~fruchter/dither/drizzle.html

Ok, I get it. "Bayer matrix in - Bayer matrix out". It's not that it can't be done, the idea is just not right for this project.

Thanks for all the replies.

Hi, Thought this idea would be of interest for HDRMerge community. It seems to be called "Bayer drizzle", and was implemented by

Dave CoffinAndrew Fruchter and Richard Hook (seems to be working on DeepSkyStacker). The idea is that, since you have different images from the same scene and they probably have some motion between them, you can (theoretically) get full color information without interpolation, just using motion estimation.Note that this is not the same as "Variable-Pixel Linear Combination". This is not meant to have higher resolution images, but to get full color information on the image.It is, sorry.I had some issues debayering some, but not all, HDRMerge images with Rawtherapee. AMaZe generates artifacts, as does IGV and others. So I thought this idea would be really nice for this project.