Hi @Sloeschcke, I suspect the issue is just that when go between TN representation and dense form you need to explicitly give the ordering of the indices. Once something is a tensor network, there is no definitive ordering of the indices or tensors - you need to specify it.

tn.contract() by default chooses the output indices as the order in which they appear on the tensors (which apparently is different for tn and mps), it doesn't matter so much if you keep the result as a tensor/network, since everything is labelled. But when you call .data you are forgetting all the labels. The answer is hopefully:

- specify

contract(output_inds=[...]), to ensure a particular ordering of the indices. - use

to_dense(['k0', 'k1', 'k2', 'k3'], ['k4', 'k5', 'k6', 'k7'])(if that is your particular encoding), which handles both the contraction and reshaping, and is for exactly these kind of purposes.

Let me know if either of those work for you.

[As a side-note, the ordering of the indices and tensors is still deterministic (will be the same each run), to allow things like compiling computational graphs, but is not guaranteed from version to version.]

What is your issue?

Hi, I am experiencing that the Matrix Product State (MPS) method from_TN() changes the underlying structure of the Tensor Network (TN), and I cannot seem to find out how the structure is changed. Here is an example of my problem:

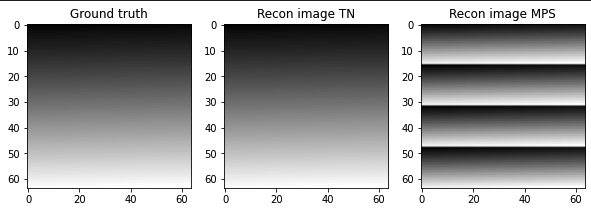

If I now contract both the TN and MPS and reshape them to the original image shape, I get two different results. The TN reconstructs the image correctly while the MPS gives a result that looks like a permuted version of the original . However, the cores of the TN and the MPS are identical. I tried looking at get_equation() to see if the contraction schemes differ, but this is not the case.

Can anyone help me explain what is being changed when calling from_TN()?

I have the code to reproduce the example I just explained in a jupyter notebook.

Output:

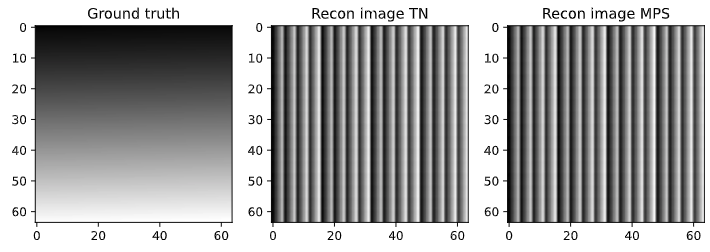

Upon further inspection, if changing the installment of quimb from the developer version to a stable version with "pip install quimb", I get the following result instead:

If I use get_equation() on the MPS I can see that the developer version gives the following result:

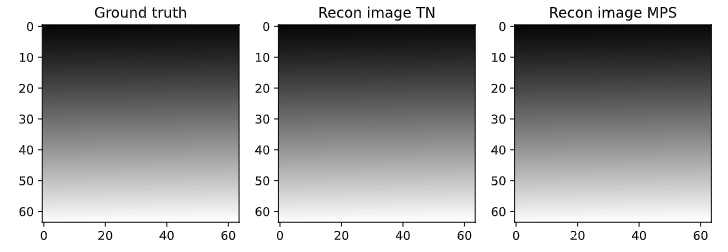

If I reverse permute the output after contracting:

Then I get this

Can anyone help me explain what the difference is between the developer and the stable version of quimb?