Hi, thanks for trying out vuda.

Unfortunately, I don't have a working mac OS environment at the moment - so no real repro possibilities right now. Would you mind trying a couple of things out for me.

- run the vulkaninfoSDK.exe and report back the output.

- try to run the same code above, but where you replace the function "logical_device::malloc(void devPtr, size_t size)" with `inline void logical_device::malloc(void devPtr, size_t size) { device_buffer_node node = new device_buffer_node(size, m_allocator); (devPtr) = node->key(); push_mem_node(node); }`

I am currently suspecting that the Intel drivers does not satisfy the vulkan specs with respect to memory property flags.

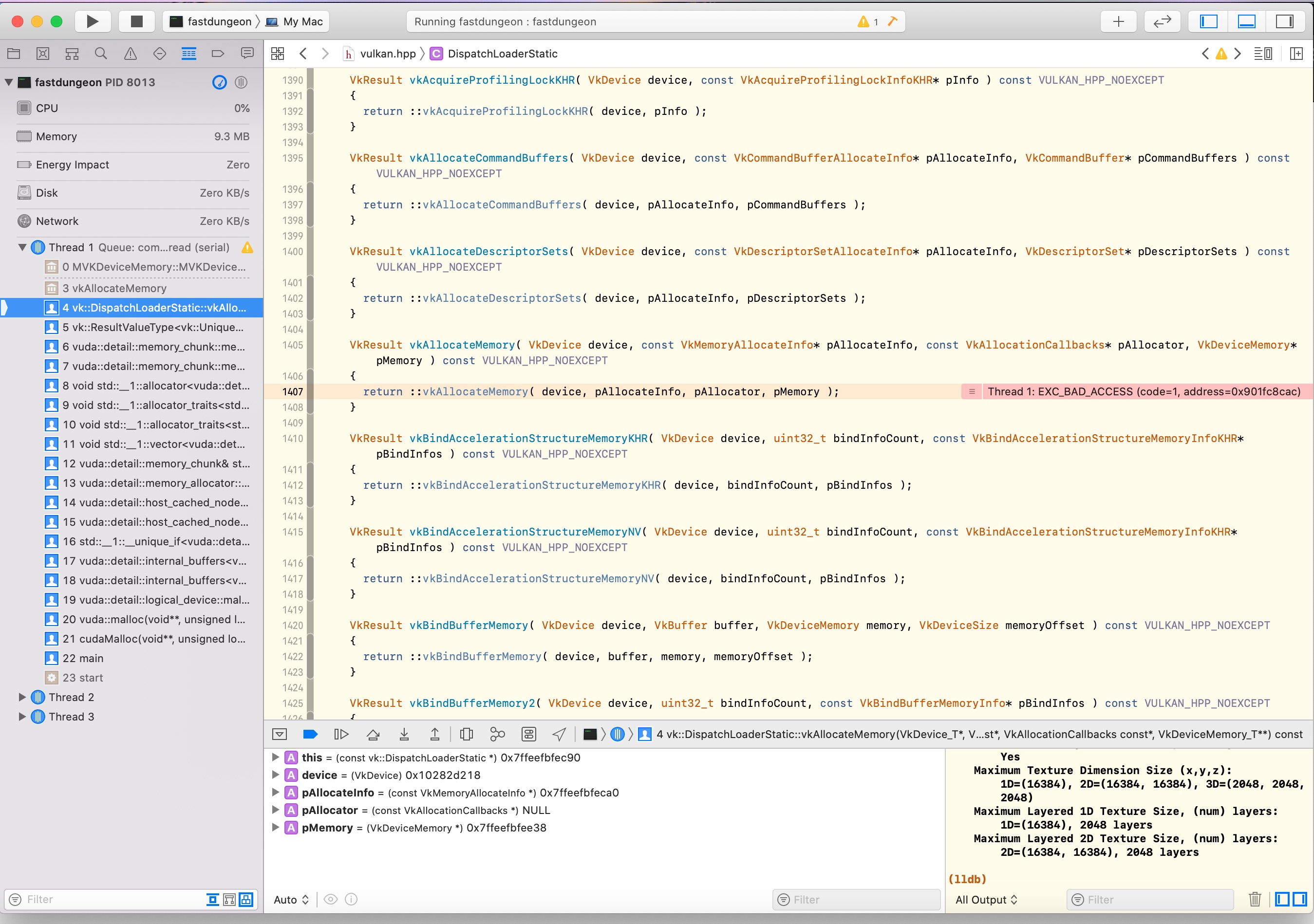

Hi, I'm using vuda on a macbook air, but when i run cudaMalloc() i get a EXC_BAD_ACCESS error. See the image below of the error.

Code:

Error image:

The query_device function provided in the examples reports this to console: