Hello @Murugurugan ,

Thanks for the issue reported. Would it be possible for you to clarify better what is working and what not, with clear steps to reproduce both cases.

It seems that u are starting a Flow locally and trying to access it but also trying to deploy a Flow in Kubernetes, but is not clear which error or behavior corresponds to each of them.

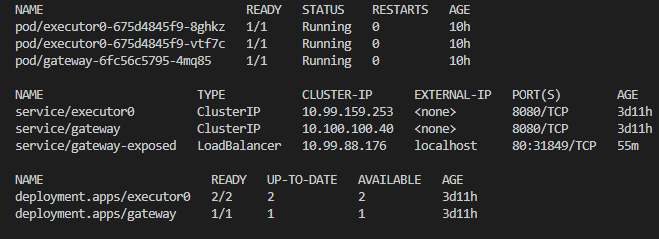

Describe the bug My Kubernetes cluster basically ignores replicas. When I try to send 2 requests at the same time concurrently via async, or 2 terminals, it always puts the second request in the queue and only processes stuff 1 by 1, even though I have 2 or more replicas for that executor set. Even if I have 2 replicas of gateways, it is the same issue. I also have problems of sending HTTP post request via a python httpx module, but client from Jina works just fine, which is weird (/post seems to work, but not /test in httpx). I am testing everything locally on Windows WSL2 docker's kubernetes.

I followed the Jina's docs as close as I could and created an executor with init.py, Dockerfile and config.yml, then I made a container from it. I added a simple time.sleep(10) for testing. I tried to play with async def, asyncio, prefetch settings in Flow or Client, none of that changed the issue. Kubernetes pods don't log any errors. I tried to expose it, so I don't have to use portforwarding and so it created LoadBalancer, but still nothing. I tried GRPC or HTTP, doesn't work either way. I don't know what to do, please help.

Executor:

Flow:

Client:

This simple HTTP post also doesn't work, it returns {'detail': 'Not Found'}:

Environment

Screenshots