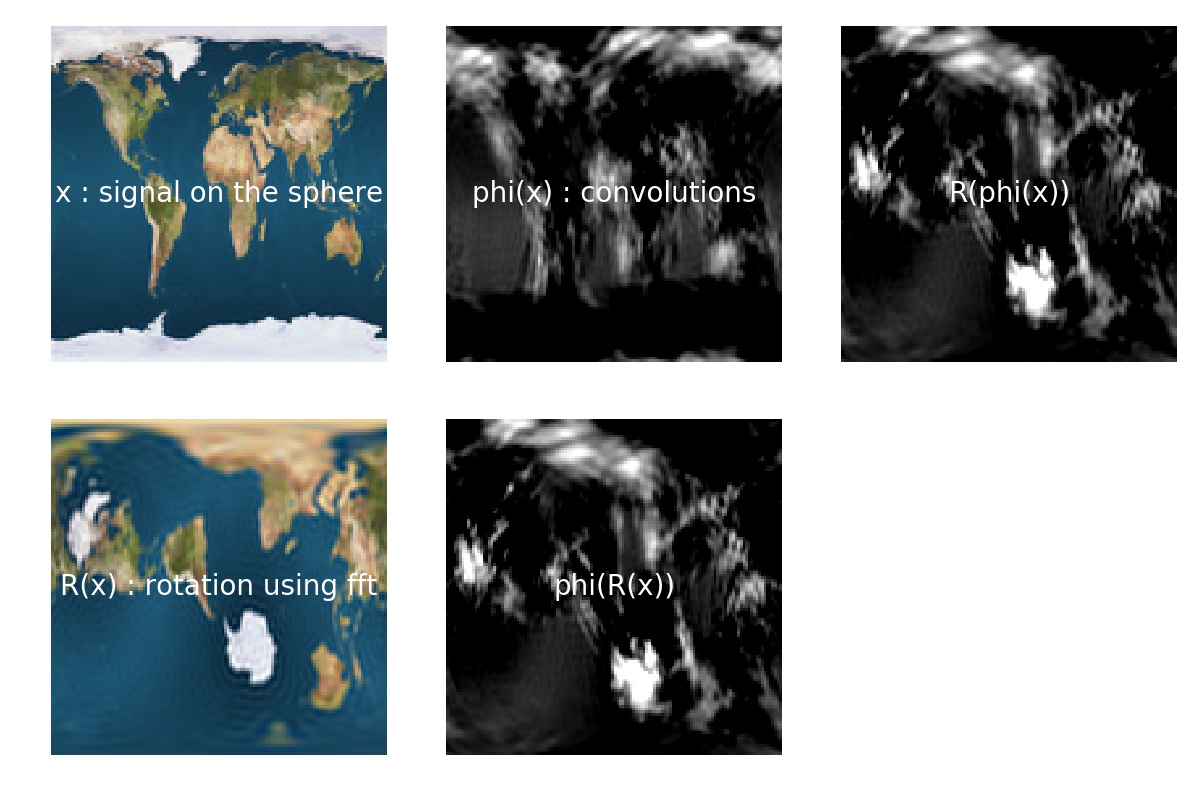

I's due to the large size of our choice of representation, signals on SO(3) : (2b)^3 You could use a smaller representation like signals on S2, of size (2b)^2 It's what they did in https://arxiv.org/abs/1711.06721

You could even use both: S2 -> S2 -> S2 -> SO3 -> SO3 -> ...

The computational cost of spherical convolution is too great. Is there any measure that makes spherical convolution can handle large images?