I modified the two TensorFlow MNIST sample networks to train them with the 35k pics and test them with the TFWM set.

Open joyhuang9473 opened 8 years ago

I modified the two TensorFlow MNIST sample networks to train them with the 35k pics and test them with the TFWM set.

psychologist Paul Ekman: https://en.wikipedia.org/wiki/Paul_Ekman => most emotions detected by systems are somehow based on his proposed 6 basic emotions.

Facial Action Coding System: https://en.wikipedia.org/wiki/Facial_Action_Coding_System

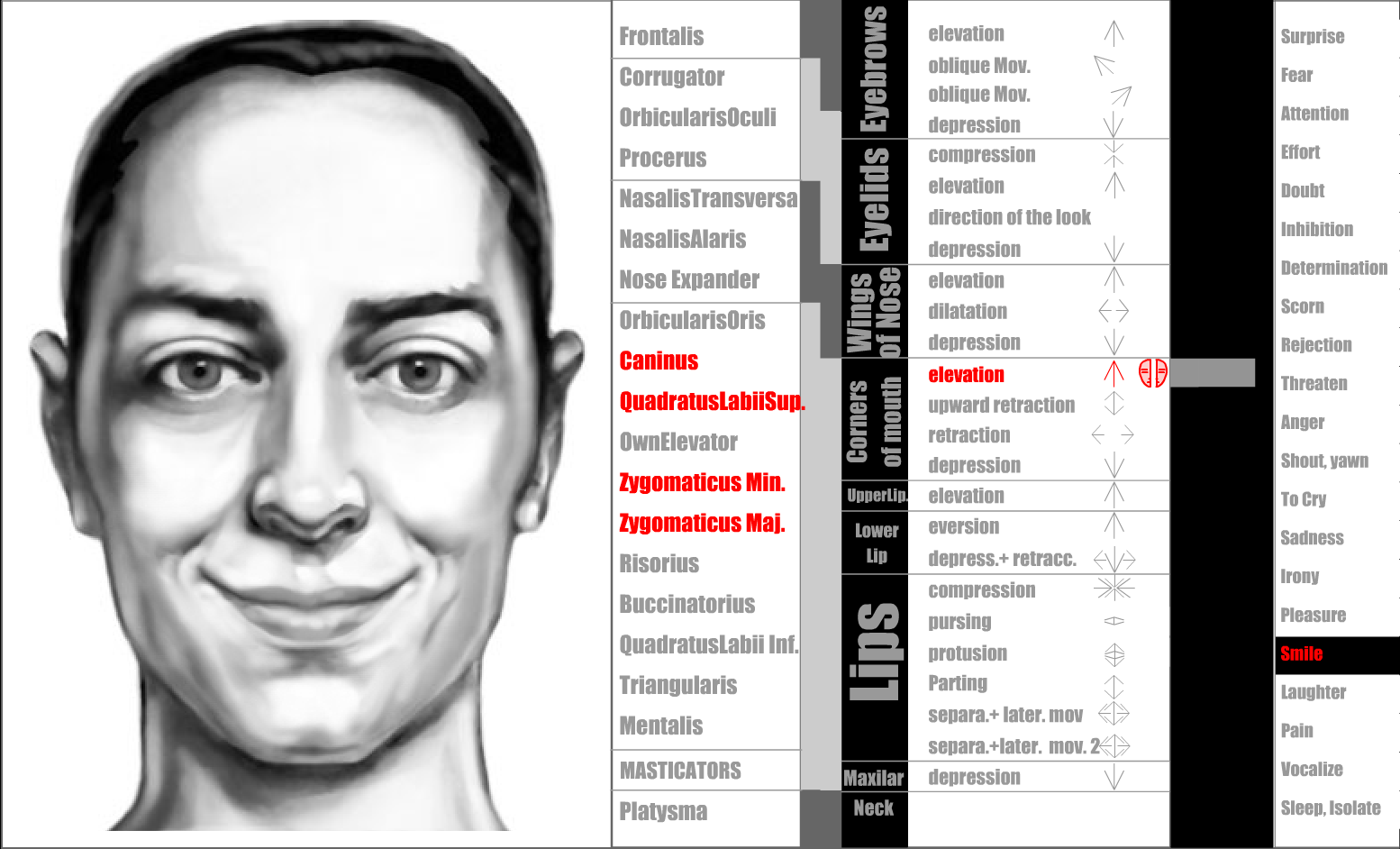

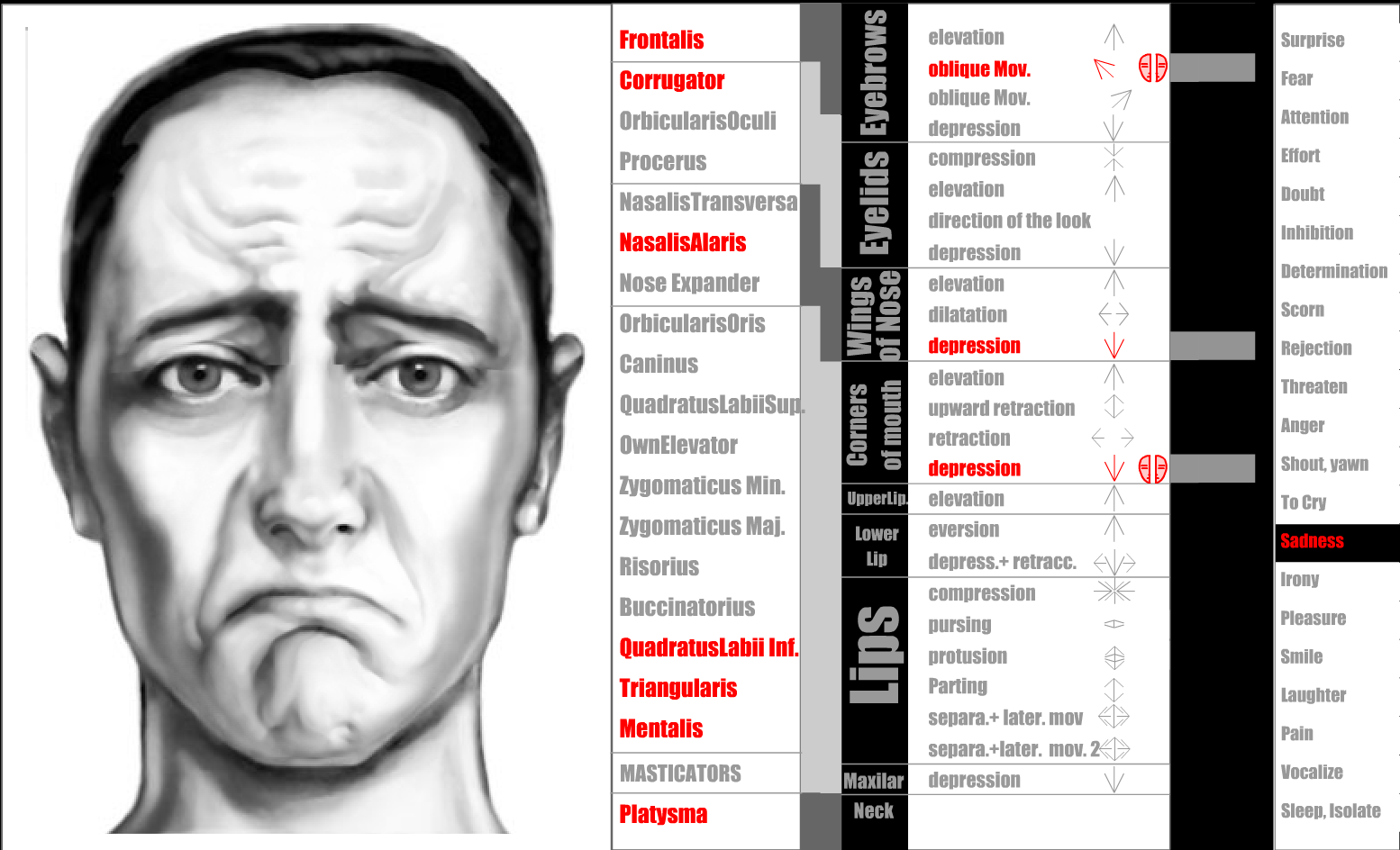

FACS can describe any emotional expression by deconstructing it into the specific Action Units (the fundamental actions of individual muscles or groups of muscles) that produced the expression.

In my simple experiment, I identified 2 Action Units relatively easy to detect in still images:

- Lip Corner Puller: which draws the angle of the mouth superiorly and posteriorly (a smile)

- Lip Corner Depressor: which is associated with frowning (and a sad face).

Fig. 1: A smile or joy, represented by the elevation of the corners of the mouth.

Fig. 2: Sadness, represented by a depression of the corners of the mouth

dlib: Dlib is a modern C++ toolkit containing machine learning algorithms and tools

Using dlib, a powerful toolkit containing machine learning algorithms, I detected the faces in each image with the included face detector.

facial landmark

For any detected face, I used the included shape detector to identify 68 facial landmarks. From all 68 landmarks, I identified 12 corresponding to the outer lips.

threshold,

difference between the y coordinates of the topmost landmark and both mouth corner landmarks

I then compute the two “lip corner heights” as the difference between the y coordinates of the topmost landmark and both mouth corner landmarks. I take the maximum (max) of the “lip corner heights” and compare it to th. If max is smaller than the threshold, it means that the corner of the lips are in the top region of the bounding box, which represents a smile (by the Lip Corner Puller). If not, then we are in the presence of a Lip Corner Depressor action, which represents a sad face.

NMIST networks?

I extracted the related faces from the Kaggle set and I ended up with 8989 joy and 6077 sad training faces. For testing I had 224 and 212 faces respectively from the TFWM set

After training and testing, the simple NMIST network obtained 51.4% and the deep NMIST 55% accuracy

single-rule?

I then used the test set and ran the single-rule algorithm. Surprisingly, this single rule obtained an accuracy of 76%

ARTNATOMY: It is intended to facilitate the teaching and learning of the anatomical and biomechanical foundation of facial expression morphology.

Building an Emotional Artificial Brain — Apple Inc.

Apple buys artificial intelligence startup Emotient: http://www.reuters.com/article/us-emotient-m-a-apple-idUSKBN0UL27420160107

demo of Emotion Detection from Text: http://demo.soulhackerslabs.com/emotion/

Deep Feelings about Deep Learning

It took me nearly 3 years to build a system that can guess your emotions from what you write, and the accuracy is far from perfect. The reason is that such systems are traditionally built using manually defined rules, features, and algorithms tailored for specific tasks.

great online book: http://neuralnetworksanddeeplearning.com/

video tutorials: http://techtalks.tv/talks/deep-learning-for-nlp-without-magic-part-1/58414/

TensorFlow: https://www.tensorflow.org/

TensorFlow - classify handwritten characters: https://www.tensorflow.org/versions/master/tutorials/mnist/pros/index.html

David and Goliath, or I Trying to Beat Microsoft at Facial Emotion Detection

Microsoft Project Oxford: https://www.projectoxford.ai/

Oxford facial emotion detection demo: https://www.projectoxford.ai/demo/Emotion

[Facial Recognition] Facial Emotion Recognition: Single-Rule 1–0 DeepLearning