it seems after disconnect and connect again it working in browser , but in cmd still have :

UnKnown cant find instance-mysite-cloud: Non-existent domain

Closed masterwishx closed 1 month ago

it seems after disconnect and connect again it working in browser , but in cmd still have :

UnKnown cant find instance-mysite-cloud: Non-existent domain

@kradalby should i close issue ?

Also found that ping for instance-mysite-cloud instance-mysite-cloud2 etc is working

Maybe Unknown related to Adguard installed in my pc in Win , when disabled : it goes through Adguard Home in Docker on server. and have next on nslookup :

nslookup instance-mysite-cloud :

╤хЁтхЁ: magicdns.localhost-tailscale-daemon

Address: 100.100.100.100

Unreliable answer:

╚ь : instance-darknas-cloud

Address: 127.0.1.1nslookup instance-mysite-cloud2 :

╤хЁтхЁ: magicdns.localhost-tailscale-daemon

Address: 100.100.100.100

*** magicdns.localhost-tailscale-daemon не удалось найти instance-mysite-cloud2: Non-existent domainnslookup instance-mysite-cloud3 :

╤хЁтхЁ: magicdns.localhost-tailscale-daemon

Address: 100.100.100.100

*** magicdns.localhost-tailscale-daemon не удалось найти instance-mysite-cloud3: Non-existent domainetc ...

I have to find a Windows machine to test with, I'll try later in the week.

Can you test if it behavious weirdly without adguard? I would be surprised if it works on other platforms and not Windows as the Tailscale client is what does this, not headscale.

Can you test if it behavious weirdly without adguard? I would be surprised if it works on other platforms and not Windows as the Tailscale client is what does this, not headscale.

This is when Adguard in windows is disabled: https://github.com/juanfont/headscale/issues/2061#issuecomment-2297299391

Yes on Linux it's OK as was befor

However this strange nslookup in win, the ping and magicdns is working fine just was need to reconnect

hmm, not sure, sounds like a blip, if anyone else have windows and can chime in, that would be helpful. Otherwise I'll try to test later.

I upgrade to beta2 (coming from beta1). Running headscale in docker on a vps. 1 windows client, 2 android, 1 linux with subrouter. My DNS resolver is Adguard Home on my LAN, accessible through my subrouter.

Everything working fine (except timeout on magic dns resolving - already present in beta1)

Everything working fine (except timeout on magic dns resolving - already present in beta1)

I am not aware of this issue? Is this a Windows issue? Adguard issue? can you open a ticket with information and steps to reproduce?

Everything working fine (except timeout on magic dns resolving - already present in beta1)

I am not aware of this issue? Is this a Windows issue? Adguard issue? can you open a ticket with information and steps to reproduce?

I just started to use headscale. I need to do some test before opening an issue. I could be an edge case or misconfiguration.

I have to find a Windows machine to test with, I'll try later in the week.

Is this right config for dns when using Adguard home on some machine? I mean to disable the rest like 1.1.1.1?

hmm, so using the adguard IP on the headscale network might be a bit unstable.

You get kind of a bootstrap issue,

just on the top of my head. Dont get me wrong, you can do this, but you need to be aware that you might run into some weird behaviour, this might be the cause of this issue.

hmm, so using the adguard IP on the headscale network might be a bit unstable.

You get kind of a bootstrap issue,

- if the connection is down to that node you might not have dns

- if the connection is down, you might not reach headscale

- if headscale is down, you might not have dns

just on the top of my head. Dont get me wrong, you can do this, but you need to be aware that you might run into some weird behaviour, this might be the cause of this issue.

I think it was working fine on beta1, also now Only strange nslookup in win So I have Adguard Home on docker in host network, same Ubuntu vps as docker for headscale, also installed tailscale client on same machine.

But if I uncomment cloudflare dns, so clients will not reach Adguard Home dns 100%?

Yes if you add more it will not guarantee I think that they go there.

I think you should probably ask for support for this in discord. We can keep the issue open until we sure, would be great if you test some more.

Yes if you add more it will not guarantee I think that they go there.

I think you should probably ask for support for this in discord. We can keep the issue open until we sure, would be great if you test some more.

Thanks i will test it more and will ask in discord also about what people have in nslookup

I've had issues with DNS until it's re-enabled in both beta1 and beta2 on my Windows machine. I'm using Pi-hole as my DNS server if that makes a difference. I'm on Windows version 10.0.22631 Build 22631.

I've noticed that restarting tailscaled results in the same issue. I'll test this out with Tailscale's control server to see if it's a client or server issue.

Definitely a Headscale/MagicDNS issue. Doing the same service restart with Tailscale as the control server causes no DNS issues. Interestingly enough, after restarting and connecting first to Tailscale and then switching the 'account' to Headscale, DNS stops working again.

Also on android I get this warning even though DNS works:

@Zeashh but is this a new issue? Or an issue we already had?

The warning in Android is new, the Windows issue isn't. I was waiting for beta2 before mentioning it due to the DNS changes

Also on android I get this warning even though DNS works:

I've the same issue on Android with Tailscale version 1.72.0. Not an issue on my other phone with Tailscale 1.70.0

I am trying to determine if this is a regression between alpha-x, beta1 or beta2. Or if it is an issue that already was present in Headscale prior to 0.22.3.

Based on @ygbillet it sounds like it might be because more errors are now surfaced in the new android version, not necessarily because things have changed in headscale. I know Tailscale has been working on which errors they surface to the users.

regardless, I dont think this is related to the Windows issue described by @masterwishx.

I am trying to determine if this is a regression between alpha-x, beta1 or beta2. Or if it is an issue that already was present in Headscale prior to 0.22.3.

Based on @ygbillet it sounds like it might be because more errors are now surfaced in the new android version, not necessarily because things have changed in headscale. I know Tailscale has been working on which errors they surface to the users.

regardless, I dont think this is related to the Windows issue described by @masterwishx.

Also I saw they have some fixes for DNS for android in lasted version

@masterwishx I will close this as an end to the Windows issues? Any Android issues should be opened as a separate issue and it should be tested with multiple headscale and tailscale versions before opening.

I do not have any Android devices so I do not have any opportunity to do this, but I dont think it is related to beta2.

Also on android I get this warning even though DNS works:

Have same issue on android

Also on android I get this warning even though DNS works:

I've the same issue on Android with Tailscale version 1.72.0. Not an issue on my other phone with Tailscale 1.70.0

So it seems this is tailscale android 1.72.0 issue

@masterwishx I will close this as an end to the Windows issues? Any Android issues should be opened as a separate issue and it should be tested with multiple headscale and tailscale versions before opening.

I do not have any Android devices so I do not have any opportunity to do this, but I dont think it is related to beta2.

The Windows issue is still present, even in beta3. Another thing I've noticed, the issue only occurs if the Tailscale service on Windows starts with "Use Tailscale DNS settings" on. If I start it with that option off and then turn it on, DNS works right away.

I do not have any windows system, can you help me track if this occur in:

I've got a different results. Same behavior on beta2 et beta3.

Configuration

server_url: <redacted> head.example.com

listen_addr: 0.0.0.0:8080

metrics_listen_addr: 127.0.0.1:9090

grpc_listen_addr: 127.0.0.1:50443

grpc_allow_insecure: false

noise:

private_key_path: /var/lib/headscale/noise_private.key

prefixes:

v6: fd7a:115c:a1e0::/48

v4: 100.46.0.0/10

allocation: sequential

dns:

magic_dns: true

base_domain: <redacted> ts.example.com

# List of DNS servers to expose to clients.

nameservers:

global:

- 192.168.5.1

split:

{}

search_domains: []

extra_records: []

use_username_in_magic_dns: falseResults

I change DNS resolver 192.168.5.1 (on my LAN - accessible through HeadScale) to 1.1.1.1 (on Internet).

DNS resolver on my LAN is AdGuard Home. The first two timeout seems normal (in my case). Same result with plain old wireguard on my router or on another peer on my network. I need to check directly on my LAN.

In your case maybe an issue regarding connectivity to DNS resolver.

Could you ping your DNS server through HeadScale ? And a traceroute tracert ?

I change DNS resolver 192.168.5.1 (on my LAN - accessible through HeadScale) to 1.1.1.1 (on Internet).

DNS resolver on my LAN is AdGuard Home. The first two timeout seems normal (in my case). Same result with plain old wireguard on my router or on another peer on my network. I need to check directly on my LAN.

In your case maybe an issue regarding connectivity to DNS resolver.

This sounds like a bootstrap problem is 192.168.5.1 is served over a subnet router via tailscale/headscale. First the wg tunnel needs to be established and then it can serve DNS responses.

- 0.22.3: Tailscale doesn't use the configured DNS server, re-enabling the DNS option makes no difference

I am not sure if this makes any sense to me, how do you mean that Tailscale does not use any configured DNS?

- latest alpha (0.23.0-alpha12-debug on Dockerhub, 0.23.0-alpha12 on Github): same as the issue on beta2 and beta3

IIRC, the DNS configruation in this beta should be essentially the same as 0.22.3, so I find this strange.

- beta: I'm not sure which release you mean exactly, but IIRC beta1 and 0.22.3 had the same issue (DNS was reworked in beta2 no?)

The change to DNS happened between beta1 and beta2, so I would expect everything before that to be the same. Since it is not, I am inclined to think there might be something with your configuration?

- 0.22.3: Tailscale doesn't use the configured DNS server, re-enabling the DNS option makes no difference

I am not sure if this makes any sense to me, how do you mean that Tailscale does not use any configured DNS?

- latest alpha (0.23.0-alpha12-debug on Dockerhub, 0.23.0-alpha12 on Github): same as the issue on beta2 and beta3

IIRC, the DNS configruation in this beta should be essentially the same as 0.22.3, so I find this strange.

- beta: I'm not sure which release you mean exactly, but IIRC beta1 and 0.22.3 had the same issue (DNS was reworked in beta2 no?)

The change to DNS happened between beta1 and beta2, so I would expect everything before that to be the same. Since it is not, I am inclined to think there might be something with your configuration?

I retested 0.22.3 and it seems I had missed the "Use Tailscale DNS settings" option earlier because I've now observed completely different behavior.

Aside from 0.22.3, the behavior I describe below is the same on beta2, beta3 and that alpha release I tested.

Did a bit of testing with external/internal DNS servers. If I set an external DNS server (like 1.1.1.1), I can ping and reach my local devices using subnet routers (as I should) and am able to resolve public DNS queries. On 0.22.3 this behavior is slightly different: I'm able to ping my devices, but connecting to, say, RDP over an IP address, doesn't work.

If I set it to my local DNS server, which I should only be able to access through the configured subnet routers, I'm not only not able to access it, nor any other local device (i.e. the subnet route doesn't work at all).

I adapted my newest config (from beta3) to the syntax specified those prior releases (config-example.yaml). Otherwise I've used essentially the same configuration throughout all my testing:

server_url: https://vpn.domain.com:443

listen_addr: 0.0.0.0:8080

metrics_listen_addr: 0.0.0.0:9090

grpc_listen_addr: 127.0.0.1:50443

grpc_allow_insecure: false

private_key_path: "/var/lib/headscale/private.key"

noise:

private_key_path: "/var/lib/headscale/noise_private.key"

prefixes:

v4: 100.64.0.0/10

v6: fd7a:115c:a1e0::/48

allocation: sequential

derp:

server:

enabled: true

region_id: 999

region_code: "headscale"

region_name: "Headscale Embedded DERP"

stun_listen_addr: "0.0.0.0:3478"

private_key_path: "/var/lib/headscale/derp_server_private.key"

automatically_add_embedded_derp_region: true

urls: []

paths:

- /etc/headscale/derp.yaml

auto_update_enabled: true

update_frequency: 24h

disable_check_updates: true

ephemeral_node_inactivity_timeout: 1h

database:

type: postgres

postgres:

host: postgres-0.local.domain.com

port: 5432

name: headscale

user: headscale

pass: "pass"

max_open_conns: 10

max_idle_conns: 10

conn_max_idle_time_secs: 3600

ssl: true

log:

format: text

level: debug

dns:

magic_dns: true

base_domain: clients.vpn.domain.com

nameservers:

global:

- 192.168.100.20

split: {}

search_domains:

- clients.vpn.domain.com

- local.domain.com

extra_records: []

unix_socket: /var/run/headscale/headscale.sock

unix_socket_permission: "0770"

logtail:

enabled: true

randomize_client_port: false# Set custom DNS search domains. With MagicDNS enabled,

# your tailnet base_domain is always the first search domain.

search_domains: []Do we need to set here base domain or it's added by automatic default?

Do we need to set here base domain or it's added by automatic default?

Added automatically

I change DNS resolver 192.168.5.1 (on my LAN - accessible through HeadScale) to 1.1.1.1 (on Internet). DNS resolver on my LAN is AdGuard Home. The first two timeout seems normal (in my case). Same result with plain old wireguard on my router or on another peer on my network. I need to check directly on my LAN. In your case maybe an issue regarding connectivity to DNS resolver.

This sounds like a bootstrap problem is

192.168.5.1is served over a subnet router via tailscale/headscale. First the wg tunnel needs to be established and then it can serve DNS responses.

First of all, I have no bug with MagicDNS on Windows with a DNS server on my LAN. I noticed that I had a timeout before the response but the behavior was identical with Wireguard.

I did a few more tests and it turns out that the nslookup command adds the domain suffix.

In other words, the request from nslookup to google.fr is translated to google.fr.my.domain.suffix

To avoid this problem, you need to end the FQDN with a dot.: nslookup google.fr.

Obviously, this problem should only exist on a PC attached to a domain.

First of all, I have no bug with MagicDNS on Windows with a DNS server on my LAN. I noticed that I had a timeout before the response but the behavior was identical with Wireguard.

ah, interesting, thanks for the clarification. But is this a new behaviour for you or did you have with previous versions too?

Did a bit of testing with external/internal DNS servers. If I set an external DNS server (like 1.1.1.1), I can ping and reach my > local devices using subnet routers (as I should) and am able to resolve public DNS queries. On 0.22.3 this behavior is slightly different: I'm able to ping my devices, but connecting to, say, RDP over an IP address, doesn't work.

If I set it to my local DNS server, which I should only be able to access through the configured subnet routers, I'm not only not able to access it, nor any other local device (i.e. the subnet route doesn't work at all).

I adapted my newest config (from beta3) to the syntax specified those prior releases (config-example.yaml). Otherwise I've used essentially the same configuration throughout all my testing

I am a bit lost, by the way you describe this it sounds more like a routing/subnet router issue than DNS, where the DNS part is more because you cant reach it through the subnet router, more than being an actual DNS issue.

I am also confused to if this is a regression or improvement between 0.22.3 and 0.23.0+ as you mention that the current stable does not work either?

First of all, I have no bug with MagicDNS on Windows with a DNS server on my LAN. I noticed that I had a timeout before the response but the behavior was identical with Wireguard.

ah, interesting, thanks for the clarification. But is this a new behaviour for you or did you have with previous versions too?

I only used HeadScale from 0.22.3 (and immediatly upgraded to beta1). The two timeout were always present. My previous comment point out that it's not a TailScale issue but an odd behavior from nslookup on a Windows AD member.

My comment 2 days ago was to point out that, having a configuration similar to op, I don't have any problems under Windows regarding MagicDNS or DNS resolver on LAN. Maybe it could be a routing issue.

@Zeashh

I am a bit lost, by the way you describe this it sounds more like a routing/subnet router issue than DNS, where the DNS part is more because you cant reach it through the subnet router, more than being an actual DNS issue.

I am also confused to if this is a regression or improvement between 0.22.3 and 0.23.0+ as you mention that the current stable does not work either?

A wild guess but could this be because the subnet routes depend on other peers which can't be reached through DNS as DNS depends on those routes to begin with? Again wild guess on my part.

I'll do some more constructive testing in an isolated environment later and get back to you. This has been quite chaotic so far, but I'll have some more time this tomorrow/this weekend to test it out thoroughly.

Thank you, I appreciate your testing, as it currently stands, I think this issue is what stands between beta and RC and eventually a release, so I'm quite keen to figure it out. It is a bit hard for me to lab up the whole thing with the resources I have available so it is great that you can do it.

A wild guess but could this be because the subnet routes depend on other peers which can't be reached through DNS as DNS depends on those routes to begin with? Again wild guess on my part.

This shouldn't be a problem if you're using the Tailnet domain or an IP.

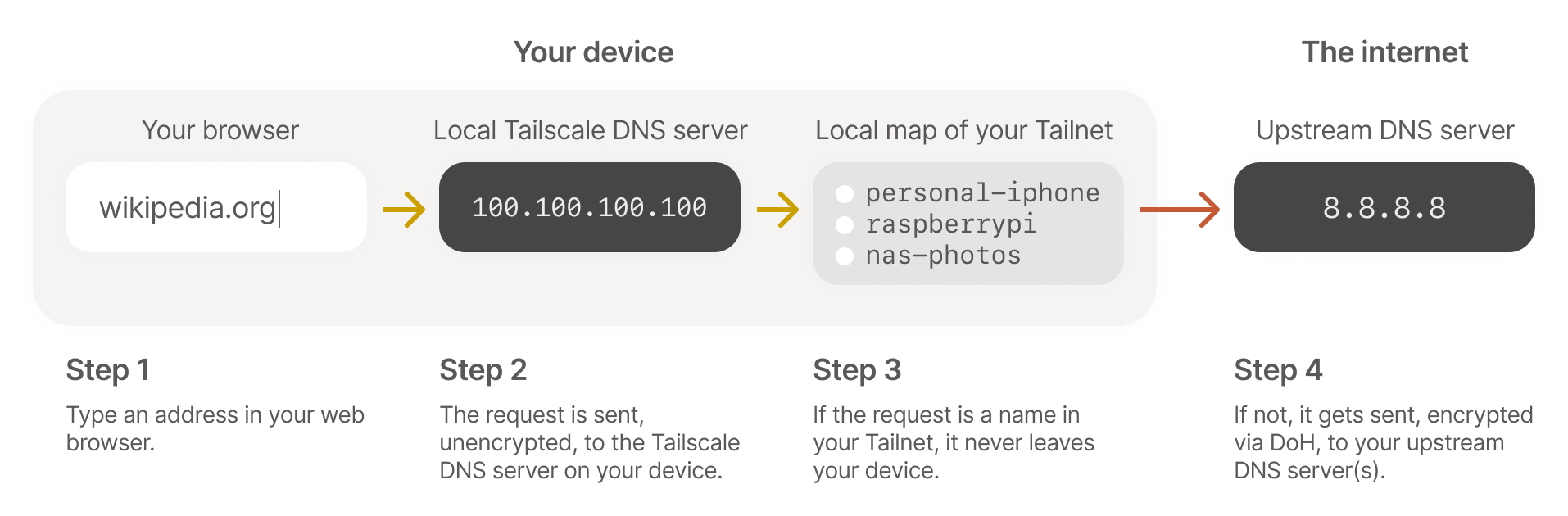

To fully understand how MagicDNS works, I recommend the following article: https://tailscale.com/blog/2021-09-private-dns-with-magicdns#how-magicdns-works

In this article, we learn that each TailScale client sets up a DNS server that answers on the Tailnet domain. If it can't answer, it forwards the request to the DNS server specified in the configuration.

Obviously, if connectivity to this server is not operational (routing, firewall), the request will not succeed.

Based on the article, some ideas for testing.

As posted befor and some other tests, now disabled also Norton Firewall ,Win Adguard is Enabled .

Also have Adguard on node in Magis DNS :

https://github.com/juanfont/headscale/issues/2061#issue-2474012349

in Windows (ping all nodes is working Fine, only strange DNS result when nslookup) , in Linux Not have this issue.

Also when tailscale status :

nslookup hs.mysite.com :

╤хЁтхЁ: UnKnown

Address: 100.100.100.100

╚ь : hs.mysite.comroute print:

When Win Adguard is disabled then no error in Health check.

when tailscale status .

nslookup hs.mysite.com :

╤хЁтхЁ: magicdns.localhost-tailscale-daemon

Address: 100.100.100.100

*** magicdns.localhost-tailscale-daemon cant find hs.mysite.com : Non-existent domainnslookup google.com :

╤хЁтхЁ: magicdns.localhost-tailscale-daemon

Address: 100.100.100.100

Не заслуживающий доверия ответ:

╚ь : google.com

Addresses: 2a00:1450:4028:804::200e

142.250.75.78nslookup instance-mysite.com :

╤хЁтхЁ: magicdns.localhost-tailscale-daemon

Address: 100.100.100.100

*** magicdns.localhost-tailscale-daemon не удалось найти instance-mysite.com: Non-existent domainbut ping also working for nodes and other ...

I was in the process of writing a HUGE reply with a ton of constructive testing, and then something bizarre™ happened. The original issue was... gone?

Now a bit of context. Actually I'll just paste what I've already written for that reply I've now dropped:

The setup involves 2 Linux machines (let's call them router peers 1 and 2), which have configured routes on the tailscale client using this command:

tailscale up --advertise-exit-node --advertise-routes=192.168.100.0/24 --advertise-tags=tag:connector --login-server=https://vpn.grghomelab.me --accept-dns=false192.168.100.0/24 is my local subnet. DNS is served through Pi-hole on 192.168.100.20.

My Windows laptop is supposed to serve as a client and is where I've first noticed the issue regarding connectivity on startup.

So notice how I've got 2 router peers? Yea well one of them is an RPI with firewalld as its firewall. In short, I noticed that everything worked when the subnet route was set through the other router peer, which doesn't have an OS-level firewall (because it's a VM in Proxmox so I use its firewall, way simpler that way).

And lo and behold, if I run tailscale down on the RPI, the issue is gone. In other words, Tailscale now always uses the other router peer, which works.

The only thing I'm not sure about is why disabling Tailscale DNS and re-enabling it fixes this? And why it only affects Windows as far as we can tell?

I haven't done testing with versions other than beta3 since it was then that I noticed this. Tailscale clients are on versions 1.72.0/1.72.1 for Windows and Linux respectively.

TLDR; firewalld doesn't like Tailscale's subnet routes and there should be testing/documenting done on how this can be achieved, though I personally don't need it. The issue can be closed as far as I'm concerned.

I'm glad you figured it out, I'm going to close this as it seem to be unrelated to the betas and that means we can progress with the releases.

As for the firewalld, Happy for someone to contribute some docs/caveats here, I would argue that they are probably more related to Tailscale than Headscale as I would expect you to see this behaviour with the official SaaS.

Is this a support request?

Is there an existing issue for this?

Current Behavior

after updated to beta2 in Windows magic DNS not working , when

nslookup instance-mysite-cloud:Also tryed both :

use_username_in_magic_dns: falseanduse_username_in_magic_dns: truealso have this in config :

Expected Behavior

should work as befor ,aslo in browser cant get to

instance-mysite-cloud:portSteps To Reproduce

...

Environment

Runtime environment

Anything else?

....