Processing, pipelines, and classifiers

Preprocessing

- Similar to R, python's pandas package provides a fluit DataFrame api for doing data manipulation

- python has also been known for good data manipulation, better than Java or C in terms of developer efficiency.

- If a lot of efficiency is required, there exists C bindings for a lot of routine operations that exist in Cython as Pandas and Numpy are all backed by Fortran and C

Pipelining

- it's also important to remember that real life data does not come in a perfect format that is easily ingested by a machine learning algorithm.

- While algorithms usually ingest data in that comes from some

R^n, in real life that is not the case.

- While algorithms usually ingest data in that comes from some

- Consider our task of text classification.

- We need to turn the text data into normalized text, then include various features that contain the user data, time information along with tfidf-lsi features generated from the text, then that needs to be converted into a sparse matrix for the classifier.

- By using the simple Pipeline api, we are able to compose very complex analytics structures in a modular and reusable way.

- this is done by implementing class that inherits from

BaseEstimator, TransformerMixinand implementingfitandtransform

- this is done by implementing class that inherits from

class ItemGetter(BaseEstimator, TransformerMixin):

"""

ItemGetter

~~~~~~~~~~

ItemGetter is a Transformer for Pipeline objects.

Usage:

Initialize the ItemGetter with a `key` and its

transform call will select a column out of the

specified DataFrame.

"""

def __init__(self, key):

self.key = key

def fit(self, X, y=None):

pass

def transform(self, X, y=None):

return X[self.key]

def fit_transform(self, X, y=None, **fit_params):

return self.transform(X)By using this API, we can have complex structures in very few lines of code

Pipeline([

("features", FeatureUnion([

("text", Pipeline([

("get", ItemGetter("text")),

("tfidf", TfidfTransformer()),

("lsi", TruncatedSVC())

])),

("user", Pipeline([

("get", ItemGetter("user")),

("network", NetworkFeatures())

]))

])

])- The above line of code converts a JSON object with a

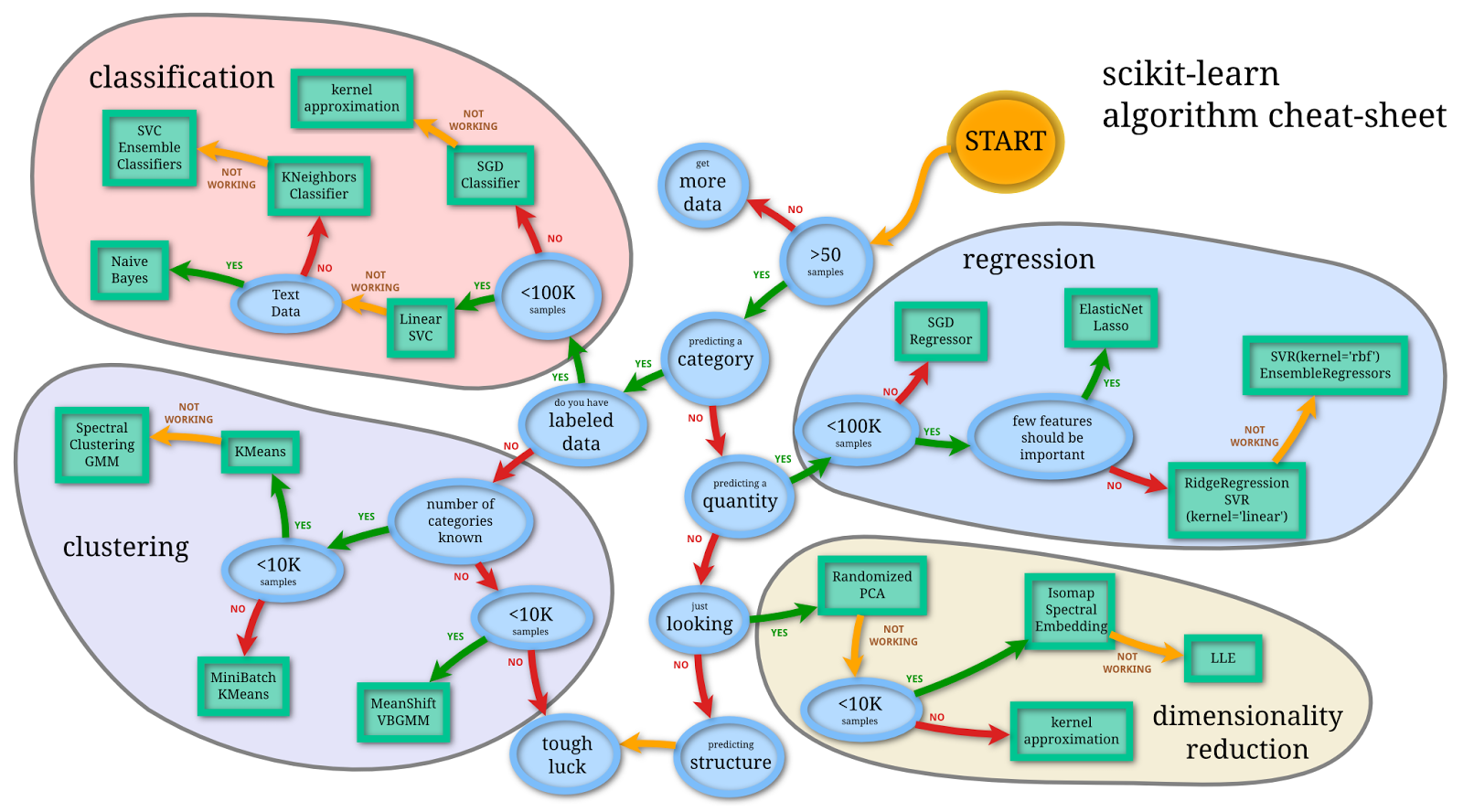

"text", "user"field and converts it into a sparse matrix that has been factorized. - The benefit of scikit learn as well is that unlike other language packages, scikit has a huge collection of pre implemented state of the art classifiers that rely on well implemented open source tools like LibSVM. and LibLinear.

- moreover, adding a classifier is easy as adding the class to the end of the pipeline.

Pipeline([

("features", FeatureUnion([

("text", Pipeline([

("get", ItemGetter("text")),

("tfidf", TfidfTransformer()),

("lsi", TruncatedSVC())

])),

("user", Pipeline([

("get", ItemGetter("user")),

("network", NetworkFeatures())

])),

]).

("classifier", LinearRegression())

])- Lastly, and the most important is gridsearch.

the context of machine learning, hyperparameter optimization or model selection is the problem of choosing a set of hyperparameters for a learning algorithm, usually with the goal of optimizing a measure of the algorithm's performance on an independent data set. Often cross-validation is used to estimate this generalization performance. Hyperparameter optimization contrasts with actual learning problems, which are also often cast as optimization problems, but optimize a loss function on the training set alone. In effect, learning algorithms learn parameters that model/reconstruct their inputs well, while hyperparameter optimization is to ensure the model does not overfit its data by tuning, e.g., regularization.

- While other frameworks require custome gridsearch code to be developed and potentially complex methods of injecting paramters into models, by taking advantage of pythonic metaprogramming we simply need to specify a parameter grid and the pipeline.

params = {

'clf__C': uniform(0.01, 1000),

'features__text__tfidf__analyzer':['word', 'char'],

'features__text__tfidf__lowercase': [False, True],

'features__text__tfidf__max_features': list(range(10000, 100000, 1000)),

'features__text__tfidf__ngram_range': list(n_grams(3, 14)),

'features__text__tfidf__norm': ['l2']

}

clf = RandomizedSearchCV(pipeline, params, n_iter=60, n_jobs=4, verbose=1, scoring="f1")

clf.fit(X_train, y_train)With this in mind, to build a baisc text classification can be extremely simple from start to finish.

X, y = get_data()

pipeline = Pipeline([

("tfidf", TfidfTransformer()),

("lsi", TruncatedSVD()),

("clf", LogisticRegression())]

)

pipeline.fit(X, y)Technical Debt

- By using pandas dataframes and implementing scikit learn pipeline elements we produce a lot of maintainable and modular code than can be reused in other projects.

- moreover, by using this common api, the code we produce is more self documenting.

- By having these useful abstractions like FeatureUnions, Pipelines we reduce technical debt by not having to implent custom scripts to do this kind of work

- allowing us to focus on modeling rather than coding.

- more time building rather than just writing scaffolding code.

- Also allows less experienced python developers try things out.

Exploration

popular language with great tools

ipython notebooks

Data Visualization super easy.

Issues

It is important to note that with the split between python 2.7 and 3.4 various aspects of the python ecosystem still sit in 2.7. Its recommended that future work done in 3.4 and developed in virtual environments in order to maintain compatibility. However, luckily a majority of the packages we use for pipelineing have all been ported to 3.4