What's the file size of your HLG.pt?

Closed shcxlee closed 1 year ago

What's the file size of your HLG.pt?

It's 4.0k

Did you download it or generate it by yourself?

I didn't donwload it. All I did was run prepare.sh.

What is the output of the following commands?

ls -lh data/lm

ls -lh data/lang_bpe_500ls -lh data/lm :

total 7.5G

-rw-r--r-- 1 185M Aug 19 15:18 G_3_gram.fst.txt

-rw-r--r-- 1 7.3G Aug 19 15:31 G_4_gram.fst.txtls -lh data/lang_bpe_500 :

total 205M

-rw-r--r-- 1 240K Aug 19 15:14 bpe.model

-rw-r--r-- 1 134 Aug 25 22:19 HLG_modified.pt

-rw-r--r-- 1 134 Aug 25 22:19 HLG.pt

-rw-r--r-- 1 20M Aug 19 15:14 L_disambig.pt

-rw-r--r-- 1 4.8M Aug 19 15:14 lexicon_disambig.txt

-rw-r--r-- 1 4.7M Aug 19 15:14 lexicon.txt

-rw-r--r-- 1 19M Aug 23 12:00 Linv.pt

-rw-r--r-- 1 19M Aug 19 15:14 L.pt

-rw-r--r-- 1 3.0M Aug 19 15:18 P.arpa

-rw-r--r-- 1 2.9M Aug 19 15:18 P.fst.txt

-rw-r--r-- 1 5.0K Aug 19 15:14 tokens.txt

-rw-r--r-- 1 83M Aug 19 15:17 transcript_tokens.txt

-rw-r--r-- 1 48M Aug 19 15:13 transcript_words.txt

-rw-r--r-- 1 240K Aug 19 15:14 unigram_500.model

-rw-r--r-- 1 7.4K Aug 19 15:14 unigram_500.vocab

-rw-r--r-- 1 2.9M Aug 19 15:13 words.txtCould you do

rm data/lang_bpe_500/HLG.pt

./prepare.sh --stage 9 --stop-stage 9

ls -lh data/lang_bpe_500/And also post the output of ./prepare.sh --stage 9 --stop-stage 9.

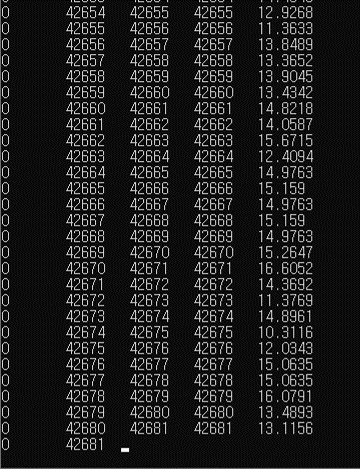

Now I am running stage 9. I saw an error message but that disappeared too quickly to read or copy. I am currently getting a bunch of numbers.

I will do

I will do ls -lh data/lang_bpe_500/ after stage 9 is done.

Now I am running stage 9. I saw an error message but that disappeared too quickly to read or copy

Please use

./prepare.sh --stage 9 --stop-stage 9 2>&1 | tee error.logand post the first 20 lines or so of error.log

This is first 60ish lines of error.log

2022-09-22 22:01:13,616 INFO [compile_hlg.py:73] Loading G_3_gram.fst.txt

[F] /usr/share/miniconda/envs/k2/conda-bld/k2_1656951676196/work/k2/csrc/fsa_utils.cu:295:void k2::OpenFstStreamReader:$

[ Stack-Trace: ]

/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/lib/libk2_log.so(k2::internal::GetStackTrace()+0x47) [$

/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/lib/libk2context.so(k2::internal::Logger::~Logger()+0x$

/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/lib/libk2context.so(k2::OpenFstStreamReader::ProcessLi$

/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/lib/libk2context.so(k2::FsaFromString(std::string cons$

/anaconda3/envs/icefall/lib/python3.9/site-packages/_k2.cpython-39-x86_64-linux-gnu.so(+0x5374b) [0x7f1522c34$

/anaconda3/envs/icefall/lib/python3.9/site-packages/_k2.cpython-39-x86_64-linux-gnu.so(+0x211c1) [0x7f1522c02$

python3(+0x1828f4) [0x55d9b6ce48f4]

python3(_PyObject_MakeTpCall+0x2df) [0x55d9b6c9e47f]

python3(_PyEval_EvalFrameDefault+0x1350) [0x55d9b6d38c90]

python3(+0x196fe3) [0x55d9b6cf8fe3]

python3(+0x198709) [0x55d9b6cfa709]

python3(+0xfe73d) [0x55d9b6c6073d]

python3(+0x196fe3) [0x55d9b6cf8fe3]

python3(+0x198709) [0x55d9b6cfa709]

python3(+0xfe73d) [0x55d9b6c6073d]

python3(_PyFunction_Vectorcall+0x104) [0x55d9b6cf9be4]

python3(+0xfe088) [0x55d9b6c60088]

python3(_PyFunction_Vectorcall+0x104) [0x55d9b6cf9be4]

python3(+0xfe088) [0x55d9b6c60088]

python3(+0x196fe3) [0x55d9b6cf8fe3]

python3(PyEval_EvalCodeEx+0x4c) [0x55d9b6da5a7c]

python3(PyEval_EvalCode+0x1b) [0x55d9b6cf9dbb]

python3(+0x243b2b) [0x55d9b6da5b2b]

python3(+0x274155) [0x55d9b6dd6155]

python3(+0x1151f7) [0x55d9b6c771f7]

python3(PyRun_SimpleFileExFlags+0x1bf) [0x55d9b6ddb72f]

python3(Py_RunMain+0x378) [0x55d9b6ddbdf8]

python3(Py_BytesMain+0x39) [0x55d9b6ddbff9]

/lib/x86_64-linux-gnu/libc.so.6(__libc_start_main+0xe7) [0x7f15b7040c87]

python3(+0x2016a0) [0x55d9b6d636a0]

Traceback (most recent call last):

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/fsa.py", line 1280, in from_str

ragged_labels) = _k2.fsa_from_str(s, num_aux_labels,

RuntimeError:

Some bad things happened. Please read the above error messages and stack

trace. If you are using Python, the following command may be helpful:

gdb --args python /path/to/your/code.py

(You can use `gdb` to debug the code. Please consider compiling

a debug version of k2.).

If you are unable to fix it, please open an issue at:

https://github.com/k2-fsa/k2/issues/new

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/icefall/egs/librispeech/ASR/./local/compile_hlg.py", line 159, in <module>

main()

File "/icefall/egs/librispeech/ASR/./local/compile_hlg.py", line 147, in main

HLG = compile_HLG(lang_dir)

File "/icefall/egs/librispeech/ASR/./local/compile_hlg.py", line 75, in compile_HLG

G = k2.Fsa.from_openfst(f.read(), acceptor=False)

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/fsa.py", line 1352, in from_openfst

return Fsa.from_str(s, acceptor, num_aux_labels,

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/fsa.py", line 1293, in from_str

raise ValueError(f'The following is not a valid Fsa {o}(with '

ValueError: The following is not a valid Fsa in the OpenFst format (with num_aux_labels=1):And now I don't have HLG.pt when I run ls -lh data/lang_bpe_500/

ls -lh data/lang_bpe_500/

total 205M

-rw-r--r-- 1 240K Aug 19 15:14 bpe.model

-rw-r--r-- 1 134 Aug 25 22:19 HLG_modified.pt

-rw-r--r-- 1 20M Aug 19 15:14 L_disambig.pt

-rw-r--r-- 1 4.8M Aug 19 15:14 lexicon_disambig.txt

-rw-r--r-- 1 4.7M Aug 19 15:14 lexicon.txt

-rw-r--r-- 1 19M Aug 23 12:00 Linv.pt

-rw-r--r-- 1 19M Aug 19 15:14 L.pt

-rw-r--r-- 1 3.0M Aug 19 15:18 P.arpa

-rw-r--r-- 1 2.9M Aug 19 15:18 P.fst.txt

-rw-r--r-- 1 5.0K Aug 19 15:14 tokens.txt

-rw-r--r-- 1 83M Aug 19 15:17 transcript_tokens.txt

-rw-r--r-- 1 48M Aug 19 15:13 transcript_words.txt

-rw-r--r-- 1 240K Aug 19 15:14 unigram_500.model

-rw-r--r-- 1 7.4K Aug 19 15:14 unigram_500.vocab

-rw-r--r-- 1 2.9M Aug 19 15:13 words.txt[F] /usr/share/miniconda/envs/k2/conda-bld/k2_1656951676196/work/k2/csrc/fsa_utils.cu:295:void k2::OpenFstStreamReader:$

Could you post the whole line? It is not complete.

Sorry about that. Here's the complete line.

[F] /usr/share/miniconda/envs/k2/conda-bld/k2_1656951676196/work/k2/csrc/fsa_utils.cu:295:void k2::OpenFstStreamReader::ProcessLine(std::string&) Invalid line: 4 0 200004 0, eof=true, fail=true, src_state=4, dest_state=0

What version of k2 are you using?

python3 -m k2.versionI am using 1.17 version

k2 version: 1.17

Build type: Release

Git SHA1: 3dc222f981b9fdbc8061b3782c3b385514a2d444

Git date: Mon Jul 4 02:13:04 2022

Cuda used to build k2: 10.2

cuDNN used to build k2: 8.0.2

Python version used to build k2: 3.9

OS used to build k2: Ubuntu 18.04.6 LTS

CMake version: 3.18.4

GCC version: 7.5.0

CMAKE_CUDA_FLAGS: -lineinfo --expt-extended-lambda -use_fast_math -Xptxas=-w --expt-extended-lambda -gencode arch=compute_35,code=sm_35 -lineinfo --expt-extended-lambda -use_fast_math -Xptxas=-w --expt-extended-lambda -gencode arch=compute_50,code=sm_50 -lineinfo --expt-extended-lambda -use_fast_math -Xptxas=-w --expt-extended-lambda -gencode arch=compute_60,code=sm_60 -lineinfo --expt-extended-lambda -use_fast_math -Xptxas=-w --expt-extended-lambda -gencode arch=compute_61,code=sm_61 -lineinfo --expt-extended-lambda -use_fast_math -Xptxas=-w --expt-extended-lambda -gencode arch=compute_70,code=sm_70 -lineinfo --expt-extended-lambda -use_fast_math -Xptxas=-w --expt-extended-lambda -gencode arch=compute_75,code=sm_75 -DONNX_NAMESPACE=onnx_c2 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_75,code=compute_75 -Xcudafe --diag_suppress=cc_clobber_ignored,--diag_suppress=integer_sign_change,--diag_suppress=useless_using_declaration,--diag_suppress=set_but_not_used,--diag_suppress=field_without_dll_interface,--diag_suppress=base_class_has_different_dll_interface,--diag_suppress=dll_interface_conflict_none_assumed,--diag_suppress=dll_interface_conflict_dllexport_assumed,--diag_suppress=implicit_return_from_non_void_function,--diag_suppress=unsigned_compare_with_zero,--diag_suppress=declared_but_not_referenced,--diag_suppress=bad_friend_decl --expt-relaxed-constexpr --expt-extended-lambda -D_GLIBCXX_USE_CXX11_ABI=0 --compiler-options -Wall --compiler-options -Wno-strict-overflow --compiler-options -Wno-unknown-pragmas

CMAKE_CXX_FLAGS: -D_GLIBCXX_USE_CXX11_ABI=0 -Wno-unused-variable -Wno-strict-overflow

PyTorch version used to build k2: 1.12.0

PyTorch is using Cuda: 10.2

NVTX enabled: True

With CUDA: True

Disable debug: True

Sync kernels : False

Disable checks: False

Max cpu memory allocate: 214748364800

k2 abort: FalseI am using 1.17 version

Please update your k2 to the latest version. This issue has been fixed in the latest k2.

I've updated k2 and tried running attention-decoder method, but I got FileNotFoundError: [Errno 2] No such file or directory: 'data/lang_bpe_500/HLG.pt'. Should I run ./prepare.sh --stage 9 --stop-stage 9 to download HLG.pt?

Should I run ./prepare.sh --stage 9 --stop-stage 9

yes, please first update k2 and then rerun stage 9.

Sorry for the late reply. It took me a while to run decode.py multiple times. I keep getting RuntimeError: CUDA out of memory. error although I decreased --max-duration from 150 to 1.

./conformer_ctc/decode.py --max-duration 1 --method attention-decoder

2022-09-26 20:49:09,605 INFO [decode.py:637] Decoding started

2022-09-26 20:49:09,606 INFO [decode.py:638] {'subsampling_factor': 4, 'vgg_frontend': False, 'use_feat_batchnorm': True, 'feature_dim': 80, 'nhead': 8, 'attention_dim': 512, 'num_decoder_layers': 6, 'search_beam': 20, 'output_beam': 8, 'min_active_states': 30, 'max_active_states': 10000, 'use_double_scores': True, 'env_info': {'k2-version': '1.19', 'k2-build-type': 'Release', 'k2-with-cuda': True, 'k2-git-sha1': '1628f670fd1830e95d6f107465f8a519856dac69', 'k2-git-date': 'Sun Sep 18 02:21:46 2022', 'lhotse-version': '1.5.0.dev+git.08a613a.clean', 'torch-version': '1.12.0', 'torch-cuda-available': True, 'torch-cuda-version': '10.2', 'python-version': '3.9', 'icefall-git-branch': 'master', 'icefall-git-sha1': '9e24642-dirty', 'icefall-git-date': 'Fri Sep 9 21:32:49 2022', 'icefall-path': '/icefall', 'k2-path': '/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/__init__.py', 'lhotse-path': '/anaconda3/envs/icefall/lib/python3.9/site-packages/lhotse/__init__.py', 'hostname': 'ifp-10', 'IP address': '127.0.1.1'}, 'epoch': 77, 'avg': 55, 'method': 'attention-decoder', 'num_paths': 100, 'nbest_scale': 0.5, 'exp_dir': PosixPath('conformer_ctc/exp'), 'lang_dir': PosixPath('data/lang_bpe_500'), 'lm_dir': PosixPath('data/lm'), 'rnn_lm_exp_dir': 'rnn_lm/exp', 'rnn_lm_epoch': 7, 'rnn_lm_avg': 2, 'rnn_lm_embedding_dim': 2048, 'rnn_lm_hidden_dim': 2048, 'rnn_lm_num_layers': 4, 'rnn_lm_tie_weights': False, 'full_libri': True, 'manifest_dir': PosixPath('data/fbank'), 'max_duration': 1, 'bucketing_sampler': True, 'num_buckets': 30, 'concatenate_cuts': False, 'duration_factor': 1.0, 'gap': 1.0, 'on_the_fly_feats': False, 'shuffle': True, 'drop_last': True, 'return_cuts': True, 'num_workers': 2, 'enable_spec_aug': True, 'spec_aug_time_warp_factor': 80, 'enable_musan': True, 'input_strategy': 'PrecomputedFeatures'}

2022-09-26 20:49:09,957 INFO [lexicon.py:176] Loading pre-compiled data/lang_bpe_500/Linv.pt

2022-09-26 20:49:10,017 INFO [decode.py:648] device: cuda:0

2022-09-26 20:49:13,570 INFO [decode.py:690] Loading G_4_gram.fst.txt

2022-09-26 20:49:13,570 WARNING [decode.py:691] It may take 8 minutes.

Traceback (most recent call last):

File "/icefall/egs/librispeech/ASR/./conformer_ctc/decode.py", line 823, in <module>

main()

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/icefall/egs/librispeech/ASR/./conformer_ctc/decode.py", line 707, in main

G = k2.arc_sort(G)

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/fsa_algo.py", line 567, in arc_sort

ragged_arc, arc_map = _k2.arc_sort(fsa.arcs, need_arc_map=need_arc_map)

RuntimeError: CUDA out of memory. Tried to allocate 3.45 GiB (GPU 0; 10.92 GiB total capacity; 9.14 GiB already allocated; 1.23 GiB free; 9.14 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONFIs there any workaround other than modulating --max-duration?

Is there any workaround other than modulating --max-duration?

One workaround is to use a GPU with a larger RAM, e.g., 32 GB.

Another workaround is to prune the 4-gram. You can use https://github.com/kaldi-asr/kaldi/pull/4594 to do that.

(Note: It is a single self-contained python script without any external dependencies.)

One workaround is to use a GPU with a larger RAM, e.g., 32 GB.

I am currently using 3 GPUs, each with 12GB RAM. However, it seems like decode.py only uses the first GPU instead of utilizing all three of them.

I am currently using 3 GPUs, each with 12GB RAM. However, it seems like decode.py only uses the first GPU instead of utilizing all three of them.

In that case, another workaround is to move G to a separate device. https://github.com/k2-fsa/icefall/blob/9ae2f3a3c5a3c2336ca236c984843c0e133ee307/egs/librispeech/ASR/conformer_ctc/decode.py#L706

Please make sure that inputs for later computations are on the same device.

However, it seems like decode.py only uses the first GPU instead of utilizing all three of them.

Yes, by default only GPU 0 is used for computation.

In that case, another workaround is to move G to a separate device.

I changed device in line 706 as torch.device("cuda", 1)

G = k2.Fsa.from_fsas([G]).to(torch.device("cuda", 1))But I am still getting OOM...

Traceback (most recent call last):

File "/icefall/egs/librispeech/ASR/./conformer_ctc/decode.py", line 824, in <module>

main()

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/icefall/egs/librispeech/ASR/./conformer_ctc/decode.py", line 708, in main

G = k2.arc_sort(G)

File "/anaconda3/envs/icefall/lib/python3.9/site-packages/k2/fsa_algo.py", line 567, in arc_sort

ragged_arc, arc_map = _k2.arc_sort(fsa.arcs, need_arc_map=need_arc_map)

RuntimeError: CUDA out of memory. Tried to allocate 3.45 GiB (GPU 1; 10.92 GiB total capacity; 8.08 GiB already allocated; 2.29 GiB free; 8.08 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONFIs GPU 1 used exclusively by you?

Yes.

the GPU has about 3 times less memory than ours. try setting --max-duration much smaller.

On Fri, Sep 30, 2022 at 11:23 PM shcxlee @.***> wrote:

Yes.

— Reply to this email directly, view it on GitHub https://github.com/k2-fsa/icefall/issues/578#issuecomment-1263713597, or unsubscribe https://github.com/notifications/unsubscribe-auth/AAZFLOYRTHVRXAI5ZENISQLWA4AYHANCNFSM6AAAAAAQTM363I . You are receiving this because you are subscribed to this thread.Message ID: @.***>

I set --max-duration 50 and got OOM. I will try --max-duration 10.

I got the same error even with --max-duration 10

RuntimeError: CUDA out of memory. Tried to allocate 3.45 GiB (GPU 1; 10.92 GiB total capacity; 8.08 GiB already allocated; 2.29 GiB free; 8.08 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONFAh, it's the arc sorting G that is failing.

You could do that on CPU, I guess. At that point G may already be arc sorted, so it may be possible to simply comment out that line. If it's not already arc sorted you may be able to do that command while G is still on CPU.

Problem solved.

I changed line 646 "cuda", 0 to "cuda", 3

https://github.com/k2-fsa/icefall/blob/9ae2f3a3c5a3c2336ca236c984843c0e133ee307/egs/librispeech/ASR/conformer_ctc/decode.py#L646

while also assigning this G to CPU.

https://github.com/k2-fsa/icefall/blob/9ae2f3a3c5a3c2336ca236c984843c0e133ee307/egs/librispeech/ASR/conformer_ctc/decode.py#L706

Thank you very much for your help!

I've finished training LibriSpeech Conformer CTC model. However, whenever I try to decode this model, following error message comes up.

I've tested other methods and found that ctc-decoding is the only method that is currently working. How can I fix this issue?