Thank you for sharing the excellent analysis @brandond . But still, if k3s had a way of modifying the default periods of control loops (I suspect it will be health checks, node updates, etc...) that load could drastically go lower, at the expense of slower response times.

Those are parameters which are configurable on all the subsystems k3s embeds.

For example, here [1], but there are others like kubelet, kubeproxy, etc.. it's all tunable.

Is it possible to access those settings from k3s?

--horizontal-pod-autoscaler-cpu-initialization-period duration

--horizontal-pod-autoscaler-downscale-stabilization duration

--horizontal-pod-autoscaler-initial-readiness-delay duration

--horizontal-pod-autoscaler-sync-period duration

--leader-elect-lease-duration duration

--leader-elect-renew-deadline duration

--leader-elect-retry-period duration

--log-flush-frequency duration

--mirroring-endpointslice-updates-batch-period duration

--namespace-sync-period duration

--node-monitor-grace-period duration

--node-monitor-period duration

--node-startup-grace-period duration

--pod-eviction-timeout duration

--pvclaimbinder-sync-period duration

--resource-quota-sync-period duration

--route-reconciliation-period duration [1] https://kubernetes.io/docs/reference/command-line-tools-reference/kube-controller-manager/

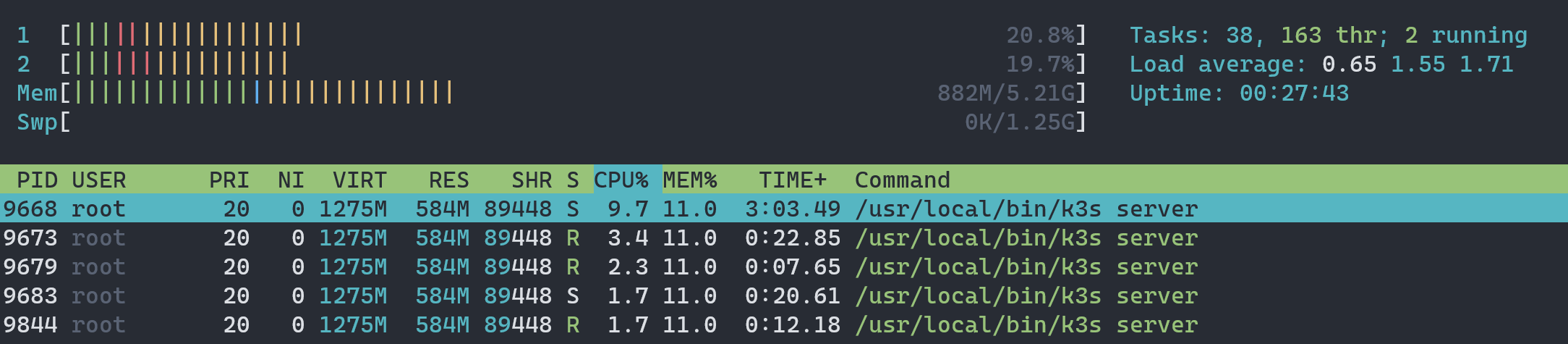

Environmental Info: K3s Version: k3s version v1.18.8+k3s1 (6b595318)

Running on CentOS 7.8

Node(s) CPU architecture, OS, and Version: Linux k3s 3.10.0-1127.19.1.el7.x86_64 #1 SMP Tue Aug 25 17:23:54 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

AMD GX-412TC SOC with 2GB RAM

Cluster Configuration: Single node installation

Describe the bug:

When deploying the latest stable k3s on a single node, the CPU and memory usage may look important. I understand that Kubernetes isn't lightweight by definition, but the k3s is really interessing for creating/deploying appliances. On small (embedded) systems, the default CPU and memory usage is important (I'm not speaking here for modern servers). Is-there a way to optimize these ressources usage or at least to understand the k3s usage of ressources when nothing is deployed?

Steps To Reproduce:

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="server --no-deploy traefik" sh

Expected behavior:

Maybe less CPU and memory usage when nothing is deployed and running

Actual behavior:

500MB of memory used and 5% of CPU usage on each core (4 cores CPU) when idle

Additional context / logs: