It turns out due to precision loss in draw_markers(). Here's a temporary fix by moving float32 matrix on GPU to float64 matrix on CPU.

def draw_markers(self, gc, marker_path, marker_trans, path,

trans, rgbFace=None):

positions = path.vertices

if len(positions) == 1: # single markers don't need a particle shader to draw

positions = trans.transform(positions)

translation = Affine2D().translate(*positions[0])

return self.draw_path(gc, marker_path, marker_trans + translation, rgbFace)

marker_path = marker_trans.transform_path(marker_path)

polygons = self.path_to_poly(marker_path, rgbFace is not None)

# for precision

positions = trans.transform(positions)

arr_data = numpy.array(positions).astype(numpy.float32).tobytes()

pos_vbo = self._gpu_cache(self.context, hash(arr_data), VBO, arr_data)

with ObjectContext(self.particle_shader) as program, ClippingContext(gc):

program.bind_attr_vbo("shift", pos_vbo)

program.set_uniform3m("trans", Affine2D().get_matrix(), transpose=True)

program.set_attr_divisor("pos", 0)

program.set_attr_divisor("shift", 1)

for polygon in polygons:

arr_data = numpy.array(polygon).astype(numpy.float32).tobytes()

poly_vbo = self._gpu_cache(self.context, hash(arr_data), VBO, arr_data)

program.bind_attr_vbo("pos", poly_vbo)

if rgbFace is not None and len(polygon) >= 3:

col = get_fill_color(gc, rgbFace)

program.set_uniform4f("color", *col)

glDrawArraysInstanced(GL_POLYGON, 0, len(polygon) - 1, len(positions))

if gc.get_linewidth() > 0:

with StrokedContext(gc, self):

col = get_stroke_color(gc, self)

program.set_uniform4f("color", *col)

glDrawArraysInstanced(GL_LINE_STRIP, 0, len(polygon), len(positions))

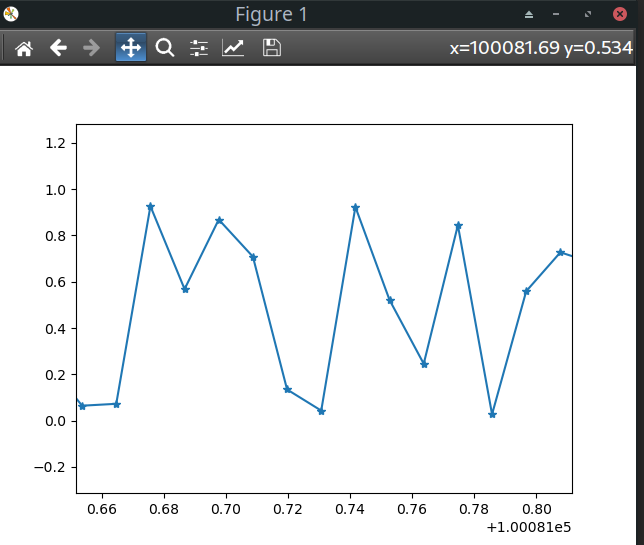

Markers not displaying properly on small intervals, when using mplopengl. When n = 1000, the markers are slightly offset When n = 100000, the markers are totally broken

When n = 100000, the markers are totally broken

On Qt5Agg backend, there is no such issue.

On Qt5Agg backend, there is no such issue.